Over the past year the PyImageSearch blog has had a lot of popular blog posts. Using k-means clustering to find the dominant colors in an image was (and still is) hugely popular. One of my personal favorites, building a kick-ass mobile document scanner has been the most popular PyImageSearch article for months. And the first (big) tutorial I ever wrote, Hobbits and Histograms, an article on building a simple image search engine, still gets a lot of hits today.

But by far, the most popular post on the PyImageSearch blog is my tutorial on installing OpenCV and Python on your Raspberry Pi 2 and B+. It’s really, really awesome to see the love you and the PyImageSearch readers have for the Raspberry Pi community — and I plan to continue writing more articles about OpenCV + the Raspberry Pi in the future.

Anyway, after I published the Raspberry Pi + OpenCV installation tutorial, many of the comments asked that I continue on and discuss how to access the Raspberry Pi camera using Python and OpenCV.

In this tutorial we’ll be using picamera, which provides a pure Python interface to the camera module. And best of all, I’ll be showing you how to use picamera to capture images in OpenCV format.

Read on to find out how…

IMPORTANT: Be sure to follow one of my Raspberry Pi OpenCV installation guides prior to following the steps in this tutorial.

OpenCV and Python versions:

This example will run on Python 2.7/Python 3.4+ and OpenCV 2.4.X/OpenCV 3.0+.

Step 1: What do I need?

To get started, you’ll need a Raspberry Pi camera board module.

I got my 5MP Raspberry Pi camera board module from Amazon for under $30, with shipping. It’s hard to believe that the camera board module is almost as expensive as the Raspberry Pi itself — but it just goes to show how much hardware has progressed over the past 5 years. I also picked up a camera housing to keep the camera safe, because why not?

Assuming you already have your camera module, you’ll need to install it. Installation is very simple and instead of creating my own tutorial on installing the camera board, I’ll just refer you to the official Raspberry Pi camera installation guide:

Assuming your camera board and properly installed and setup, it should look something like this:

Step 2: Enable your camera module.

Now that you have your Raspberry Pi camera module installed, you need to enable it. Open up a terminal and execute the following command:

$ sudo raspi-config

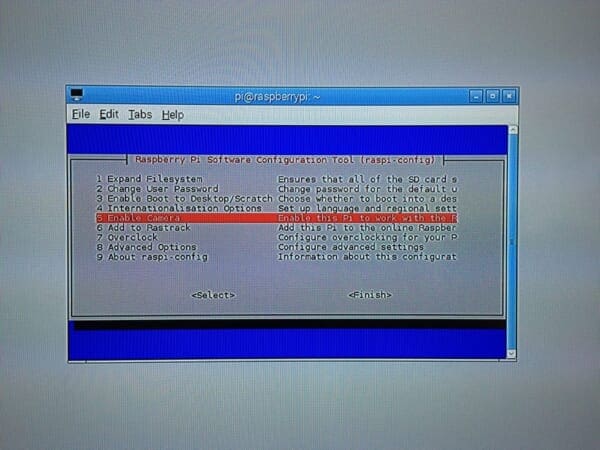

This will bring up a screen that looks like this:

Use your arrow keys to scroll down to Option 5: Enable camera, hit your enter key to enable the camera, and then arrow down to the Finish button and hit enter again. Lastly, you’ll need to reboot your Raspberry Pi for the configuration to take affect.

Step 3: Test out the camera module.

Before we dive into the code, let’s run a quick sanity check to ensure that our Raspberry Pi camera is working properly.

Note: Trust me, you’ll want to run this sanity check before you start working with the code. It’s always good to ensure that your camera is working prior to diving into OpenCV code, otherwise you could easily waste time wondering when your code isn’t working correctly when it’s simply the camera module itself that is causing you problems.

Anyway, to run my sanity check I connected my Raspberry Pi to my TV and positioned it such that it was pointing at my couch:

And from there, I opened up a terminal and executed the following command:

$ raspistill -o output.jpg

This command activates your Raspberry Pi camera module, displays a preview of the image, and then after a few seconds, snaps a picture, and saves it to your current working directory as output.jpg .

Here’s an example of me taking a photo of my TV monitor (so I could document the process for this tutorial) as the Raspberry Pi snaps a photo of me:

And here’s what output.jpg looks like:

Clearly my Raspberry Pi camera module is working correctly! Now we can move on to the some more exciting stuff.

Step 4: Installing picamera.

So at this point we know that our Raspberry Pi camera is working properly. But how do we interface with the Raspberry Pi camera module using Python?

The answer is the picamera module.

Remember from the previous tutorial how we utilized virtualenv and virtualenvwrapper to cleanly install and segment our Python packages from the the system Python and packages?

Well, we’re going to do the same thing here.

Before installing picamera , be sure to activate our cv virtual environment:

$ workon cv

Note: If you are installing the the picamera module system wide, you can skip the previous commands. However, if you are following along from the previous tutorial, you’ll want to make sure you are in the cv virtual environment before continuing to the next command.

And from there, we can install picamera by utilizing pip:

$ pip install "picamera[array]"

IMPORTANT: Notice how I specified picamera[array] and not just picamera .

Why is this so important?

While the standard picamera module provides methods to interface with the camera, we need the (optional) array sub-module so that we can utilize OpenCV. Remember, when using Python bindings, OpenCV represents images as NumPy arrays — and the array sub-module allows us to obtain NumPy arrays from the Raspberry Pi camera module.

Assuming that your install finished without error, you now have the picamera module (with NumPy array support) installed.

Step 5: Accessing a single image of your Raspberry Pi using Python and OpenCV.

Alright, now we can finally start writing some code!

Open up a new file, name it test_image.py , and insert the following code:

# import the necessary packages

from picamera.array import PiRGBArray

from picamera import PiCamera

import time

import cv2

# initialize the camera and grab a reference to the raw camera capture

camera = PiCamera()

rawCapture = PiRGBArray(camera)

# allow the camera to warmup

time.sleep(0.1)

# grab an image from the camera

camera.capture(rawCapture, format="bgr")

image = rawCapture.array

# display the image on screen and wait for a keypress

cv2.imshow("Image", image)

cv2.waitKey(0)

We’ll start by importing our necessary packages on Lines 2-5.

From there, we initialize our PiCamera object on Line 8 and grab a reference to the raw capture component on Line 9. This rawCapture object is especially useful since it (1) gives us direct access to the camera stream and (2) avoids the expensive compression to JPEG format, which we would then have to take and decode to OpenCV format anyway. I highly recommend that you use PiRGBArray whenever you need to access the Raspberry Pi camera — the performance gains are well worth it.

From there, we sleep for a tenth of a second on Line 12 — this allows the camera sensor to warm up.

Finally, we grab the actual photo from the rawCapture object on Line 15 where we take special care to ensure our image is in BGR format rather than RGB. OpenCV represents images as NumPy arrays in BGR order rather than RGB — this little nuisance is subtle, but very important to remember as it can lead to some confusing bugs in your code down the line.

Finally, we display our image to screen on Lines 19 and 20.

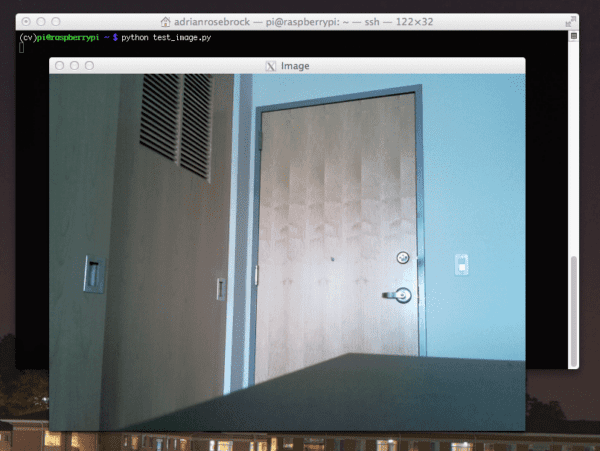

To execute this example, open up a terminal, navigate to your test_image.py file, and issue the following command:

$ python test_image.py

If all goes as expected you should have an image displayed on your screen:

Note: I decided to add this section of the blog post after I had finished up the rest of the article, so I did not have my camera setup facing the couch (I was actually playing with some custom home surveillance software I was working on). Sorry for any confusion, but rest assured, everything will work as advertised provided you have followed the instructions in the article!

Step 6: Accessing the video stream of your Raspberry Pi using Python and OpenCV.

Alright, so we’ve learned how to grab a single image from the Raspberry Pi camera. But what about a video stream?

You might guess that we are going to use the cv2.VideoCapture function here — but I actually recommend against this. Getting cv2.VideoCapture to play nice with your Raspberry Pi is not a nice experience (you’ll need to install extra drivers) and something you should generally avoid.

And besides, why would we use the cv2.VideoCapture function when we can easily access the raw video stream using the picamera module?

Let’s go ahead and take a look on how we can access the video stream. Open up a new file, name it test_video.py , and insert the following code:

# import the necessary packages

from picamera.array import PiRGBArray

from picamera import PiCamera

import time

import cv2

# initialize the camera and grab a reference to the raw camera capture

camera = PiCamera()

camera.resolution = (640, 480)

camera.framerate = 32

rawCapture = PiRGBArray(camera, size=(640, 480))

# allow the camera to warmup

time.sleep(0.1)

# capture frames from the camera

for frame in camera.capture_continuous(rawCapture, format="bgr", use_video_port=True):

# grab the raw NumPy array representing the image, then initialize the timestamp

# and occupied/unoccupied text

image = frame.array

# show the frame

cv2.imshow("Frame", image)

key = cv2.waitKey(1) & 0xFF

# clear the stream in preparation for the next frame

rawCapture.truncate(0)

# if the `q` key was pressed, break from the loop

if key == ord("q"):

break

This example starts off similarly to the previous one. We start off by importing our necessary packages on Lines 2-5.

And from there we construct our camera object on Line 8 which allows us to interface with the Raspberry Pi camera. However, we also take the time to set the resolution of our camera (640 x 480 pixels) on Line 9 and the frame rate (i.e. frames per second, or simply FPS) on Line 10. We also initialize our PiRGBArray object on Line 11, but we also take care to specify the same resolution as on Line 9.

Accessing the actual video stream is handled on Line 17 by making a call to the capture_continuous method of our camera object.

This method returns a frame from the video stream. The frame then has an array property, which corresponds to the frame in NumPy array format — all the hard work is done for us on Lines 17 and 20!

We then take the frame of the video and display on screen on Lines 23 and 24.

An important line to pay attention to is Line 27: You must clear the current frame before you move on to the next one!

If you fail to clear the frame, your Python script will throw an error — so be sure to pay close attention to this when implementing your own applications!

Finally, if the user presses the q key, we break form the loop and exit the program.

To execute our script, just open a terminal (making sure you are in the cv virtual environment, of course) and issue the following command:

$ python test_video.py

Below follows an example of me executing the above command:

As you can see, the Raspberry Pi camera’s video stream is being read by OpenCV and then displayed on screen! Furthermore, the Raspberry Pi camera shows no lag when accessing frames at 32 FPS. Granted, we are not doing any processing on the individual frames, but as I’ll show in future blog posts, the Pi 2 can easily keep up 24-32 FPS even when processing each frame.

What's next? We recommend PyImageSearch University.

84 total classes • 114+ hours of on-demand code walkthrough videos • Last updated: February 2024

★★★★★ 4.84 (128 Ratings) • 16,000+ Students Enrolled

I strongly believe that if you had the right teacher you could master computer vision and deep learning.

Do you think learning computer vision and deep learning has to be time-consuming, overwhelming, and complicated? Or has to involve complex mathematics and equations? Or requires a degree in computer science?

That’s not the case.

All you need to master computer vision and deep learning is for someone to explain things to you in simple, intuitive terms. And that’s exactly what I do. My mission is to change education and how complex Artificial Intelligence topics are taught.

If you're serious about learning computer vision, your next stop should be PyImageSearch University, the most comprehensive computer vision, deep learning, and OpenCV course online today. Here you’ll learn how to successfully and confidently apply computer vision to your work, research, and projects. Join me in computer vision mastery.

Inside PyImageSearch University you'll find:

- ✓ 84 courses on essential computer vision, deep learning, and OpenCV topics

- ✓ 84 Certificates of Completion

- ✓ 114+ hours of on-demand video

- ✓ Brand new courses released regularly, ensuring you can keep up with state-of-the-art techniques

- ✓ Pre-configured Jupyter Notebooks in Google Colab

- ✓ Run all code examples in your web browser — works on Windows, macOS, and Linux (no dev environment configuration required!)

- ✓ Access to centralized code repos for all 536+ tutorials on PyImageSearch

- ✓ Easy one-click downloads for code, datasets, pre-trained models, etc.

- ✓ Access on mobile, laptop, desktop, etc.

Summary

This article extended our previous tutorial on installing OpenCV and Python on your Raspberry Pi 2 and B+ and covered how to access the Raspberry Pi camera module using Python and OpenCV.

We reviewed two methods to access the camera. The first method allowed us to access a single photo. And the second method allowed us to access the raw video stream from the Raspberry Pi camera module.

In reality, there are many ways to access the Raspberry Pi camera module, as the picamera documentation details. However, the methods detailed in this blog post are used because (1) they are easily compatible with OpenCV and (2) they are quite speedy. There are certainly more than one way to skin this cat, but if you intend on using OpenCV + Python, I would suggest using the code in this article as “boilerplate” for your own applications.

In future blog posts we’ll take these examples and use it to build computer vision systems to detect motion in videos and recognize faces in images.

Be sure to sign up for the PyImageSearch Newsletter to receive updates when new Raspberry Pi and computer vision posts go live, you definitely don’t want to miss them!

Download the Source Code and FREE 17-page Resource Guide

Enter your email address below to get a .zip of the code and a FREE 17-page Resource Guide on Computer Vision, OpenCV, and Deep Learning. Inside you'll find my hand-picked tutorials, books, courses, and libraries to help you master CV and DL!

Hello Adrian,

Thank you for this new demonstration, which works very well!

I wait for the following episode with impatience, to understand how to capture a detecting motion and tracking a person.

Thank you very much to share with us your experiment.

Christian

Awesome Adrian!

Thanks a lot for this! (and for how cleanly everything is explained).

I’ll make sure to stay tuned.

Fabio Guarini

Hello Adrian,

Thanks for the documentation. But I have a question to Frame rate. In the example above we have a frame rate of 32, but I get only 10 Images per second. Would it be faster to use c/C++ instead?

Dieter

Are you using the Raspberry Pi B/B+ or the Pi 2? You can easily get 32 FPS on the Pi 2. Using C/C++ will almost certainly be faster, but I would check ensure that your camera is working properly. It could be possible that the frames are not being read from the camera fast enough.

Hello Adrian:

I thank you for opening the door for many of us beginners to run OpenCV on raspberry.

I just ran “test_video.py” example above, but I am getting about 10 frames per second instead of 32. I am using raspberry Pi 2.

Could I be missing something obvious?

Very best regards,

Razmik Karabed

You can try increasing the FPS by using threading. Also, reducing the frame dimensions from 640 x 480 to 320 x 240 will also dramatically increase your frame rate.

is picamera capable for live video streaming to do face recognition

Face recognition algorithms don’t “care” where the video stream is from, as long as you can read the frames from a stream. Inside the PyImageSearch Gurus course I demonstrate how to do facial recognition using the Raspberry Pi picamera module.

for raspberry pi 3 people should know that “Enable/disable connection to the Raspberry Pi Camera”. is the options you want to click on after you have done sudo raspi-config. Then you will have to enable the camera from there. Different then raspberry pi 2 =]

How did you get X11 to work with OpenCV? Are you using the same `cv2.imshow(“image”, image)` call? or something special? I can’t seem to get it to work with my windows system.

Are you using X11 forwarding when ssh’ing into your Pi? I’m not sure about windows systems, but the command on Unix systems is

ssh -X pi@ipaddr. Your code will not have to change at all and you’ll still be able to usecv2.imshow.I finally got it to work. It was a combination of things I believe. I’ve come to realize that the RasPi sometimes gets into a bad state and needs to be rebooted. Also, I don’t think that I was capturing the `waitkey` correctly and the script was ending too soon.

Another question though. I’ve noticed that using OpenCV doesn’t always get me the native resolution of the camera. Do you know how to get this? This is true when using the built-in Surface Pro 2 camera and my current web cam. Both should be 720p, but I’m getting something in the range of 480. Do you know how to get this to the native resolution?

I’m simply using the `cam = cv2.VideoCapture()` to obtain the device and `(grabbed, image) = cam.read()` to grab the image.

When accessing the camera through the Raspberry Pi, I actually prefer to use the

picameramodule rather thancv2.VideoCapture. It gives you much more flexibility, including obtaining native resolution. Please see the rest of this blog post for more information on manually setting the resolution of the camera.`PiCamera` isn’t limited to the native camera? Meaning that I can use that module with any webcam?

It depends. If it’s USB based you might be out of luck. But if your “native” camera can be plugged into the slot in the Pi, then you’re in business (I’m not familiar with the cameras you mentioned previously).

Ok that makes sense. I found this as well. I’ll check it out and see if it works:

http://stackoverflow.com/a/20120262/447015

Thank you very much! This blog is very helpful ! I ‘m looking forward to your new blogs!

Hi Adrian!!

First of all, THANKS A BUNCH MAN!! I understood almost all the proccesses of this tutorial and the previous one, and i had no errors.

But i have a question, I am using a GoPiGo Robot from Dexter industries, and i would like to make the robot follow an object. Using this code, i’ve seen that the capturing of the images goes a little bit slow, so in real time i don’t know if i could get the same faster in order to do the tracking.

Do you have an idea how it could be faster?

And, Do you have any post related with the tracking objects?

Thanks again Adrian!

Hi Dave, I’m not familiar with the GoPiGo, but if the image capturing is slow then you probably want to reduce the FPS and the resolution. I also cover tracking objects inside my book, Practical Python and OpenCV.

Hello Adrian:

First, thanks for the wonderful blogs.

I have a similar problem like Dave. but I’ve been using the Pi camera module. I used your method (test_image.py) to capture the image before processing it, I figured out that the major timing approximately 1.3 sec (for Pi 2) is spending on taking an image. I used the time() function to calculate the time. Also, when I tried to remove the line 12 (time.sleep(0.1)), the quality of a taken image wasn’t good enough for my application. Would you describe more details how to reduce the FPS and resolution in order to decrease the timing for taking an image?

Thank you so much!

Hey John, the resolution of the camera is controlled by Lines 9 and 11. Lower the resolution to 320 x 240, and you should see a substantial pickup. Secondly, I’m not sure if you noticed or not, but I just did a series of blog posts on how to increase the FPS of your Pi using Python and OpenCV. Click here to read more.

Hello Adrian,

Amazing tutorial! Everything works great, except that I’m able to get only 2-3 fps with the code above. I am using the model B+. I have recorded videos with >30 fps using raspivid, so I know that the camera module works just fine. What is it that I am missing?

Thanks,

Max

Hi Max, that is definitely pretty strange, I’m not sure why you would only be getting 2-3 FPS. Maybe try reducing the image resolution and see if that helps?

Adrian,

thank you for these tutorials. i have succesfully completed the first tutorial about the installations, and steps 1-4 of this tutorial. The code in step 5 however, returns: “gtk warning ** cannot open display: :0.0”.

i am have the rpi b+ connected to my television with an hdmi cable. have you, or anyone else, seen and fixed this problem before? when i google this error i only get the suggestion to export the display variable (as i did) but that does not seem to work.

Thank you!

Hey Vincent, are you running the command from the X GUI? It sounds like you’re simply executing the command from the command line (which you absolutely should do), but you need to have your GUI launched. Run

startxto load the X interface, then open up a terminal and execute the script.Adrian,

that fixed it. thank you!

No problem!

Hi,

I understand that is impossible to run a py program with uv4l driver without a GUI ?

I have the same problem ok Gtk-warning ….. My script works fine under X interface.

Thanks

How are you accessing your Raspberry Pi? Via SSH? VNC?

Hi, my problem is same as Vincent, and after I run startx, I got server error, then how to fix it? Anyone can help me? Thank you very much!!!

Hi Adrian,

First of all, a wonderful post. I followed your previous post to install OpenCV and Python in Raspbian without any problem. I’ve just gone through this post and tried both test_image.py and test_video.py without ‘real’ problem as well.

The only issue I have is it seems that I’m not quite getting 32 FPS for my RPi2 at 640×480. I compared the video with raspivid -d and feel that at 640×480, it wasn’t as smooth. On the other hand, if I change the resolution to 480×320, they seems comparable. So, I’m wondering how to get a more precise FPS info for realtime video. Any suggestions?

Thanks

Are you running any other applications on the Pi while trying capturing the video? You should definitely be able to get 20+ FPS at 640 x 480 without a real issue. Also make sure that the camera is connected properly. It’s rare, but I’ve seen situations where the camera connection is a bit loose and while the Pi can see that the camera is there, the actual frame rate drops.

Hi Adrian,

No, just running X and a terminal. The connection to the camera should be fine as I simply change the resolution to 480×320 and it works. Also running raspivid -d without problem. Anyway, in order to quantify it, I need a way to measure the frame rate. May be record it and see from there.

Hi Adrian,

i was using exactly your code with the same peripherals; the only difference is that I am using Raspberry Pi and not Raspberry Pi 2.

As there are more users having problems with the framerate:

Might it be possible that the normal Raspberry Pi is that much worse when it comes to framerate than the new Raspberry Pi 2?

I have a program running where I do some blob detection and get maybe 2-3 frames with a resolution of 640&480.

Also with your code I do not get much more than maybe 5 fps @640&480.

Absolutely — the original Raspberry Pi is much, much slower than the P 2. I would recommend upgrading to the Pi 2 if you can, it’s definitely worth it.

Wow – that was an unexpected quick reply – thanks for that!

Since I already have the hardware would it make sense to re-write the code to C in order to receive at least 15 frames?

What would you say?

You’ll likely get some performance gains by dropping down into C, but in reality the previous Pi’s only had one core so there’s only so much performance that you can really gain. If you really want to obtain faster performance, pick up a Pi 2.

Nice blog entry, as always, Adrian!

I was also seeing slow and sluggish frame rate when I was forwarding the video back to my PC via SSH. The frame rate was much better when I setup a VNC server on the Pi2, connected to it from the PC, and then started test_video.py on the VNC’s desktop.

I’m prettty sure I wouldn’t have any issues if I just hooked a monitor to the Pi, but I like the monitor-less configuration better.

FYI, link for VNC setup on Pi that I used: http://elinux.org/RPi_VNC_Server

Absolutely, VNC will increase lag dramatically. The Pi 2 is actually running at a pretty high framerate, but the problem arises when you try to stream the results back to your VNC client.

Hi,

I’ve managed to put your example working. I just need now to flip the image (to then convert the image to HSV and track the brightest spot on the image) and I can’t do that with cv.flip() and cv2.flip() functions.

Can you give me any clue?

Hey Pedro, what do you mean by “flipping” the image? Do you mean flipping the image horizontally or vertically? Or are you trying to convert directly to the HSV color space? If you want to find the brightest spot in an image, you’ll also want to take a look at this post.

Thanks for the reply Adrian.

I want to rotate the image 180º.

About that post, I’ll try that method using the camera to do the processing in live stream.

If you want to rotate the image 180 degrees, you’ll need the

cv2.rotatefunction. I cover the very basic image processing functions in this post as well as in my book, Practical Python and OpenCV.Thank you very much Adrian.

I found on that post what I needed! And I’ve also managed to found the way to make it spot a laser!

Thank you for all the help, that’s all I needed (I hope!)!

Very nice! What was your approach to spot the laser?

As I found on some articles, the laser spot must be the brightest point on the screen.

But this isn’t so linear to apply on the raspberry pi camera. I have to be careful with the ambient birghtness. If the ambient is too bright, it detect false positives.

Now I’m with another problem: processing speed. My program can only process a frame every 150ms. This is much slow for my application 🙁 I need now to find another faster solution and when I find the resolution for my problems I post them here!

Thank you once again!

Very nice solution Pedro, congrats! And a quick way to obtain faster FPS is to simply downscale the image. Less data to process == faster runtime.

Hello Adrian,

Thanks for this awesome tutorial!!! Everything went smoothly!!!! Now, I just need to figure out how to read letters and numbers from images, taken by the pi camera….do you have a tutorial on reading text from images???

Regards,

M

Hi Marcellus, I actually cover the basics of recognizing digits inside my book, Practical Python and OpenCV + Case Studies. There is a chapter dedicated entirely to recognizing digits that you could absolutely use and modify to your needs.

Thanks!

Hey Adrian!

When i do:

sudo python myfile.py i get this error

ImportError:No module named cv2

and when i do python myfile.py it works correctly. But i need to use opencv with sudo, due to i need to communicate with another extension wich requires super user permission. Is there some way i can do that ?

Thanks again!

Hey Dave, I’ve actually never done this before, but here’s my best guess: If you want to use OpenCV inside a virtual environment for the root user, then you’ll need to switch over to the root user account and repeat steps 7 and 10 for root. I’m not sure if the sudo command will be able to access the root virtual environment once it’s created, you may actually have to switch over to the root account to run the script and ensure it accesses the virtual environment.

Sorry about my ignorance Adrian, but what do you mean by “steps 7 an 10 for root?

Thanks a bunch!

You’ll need to launch a root shell:

$ sudo /bin/bashand then create your virtual environment and sym-link OpenCV as the root user, which should be in the directory /root.Thanks Adrian! I’ll try it.

I had the same issue.

You can fix it by changing wich python you use.

like this: sudo /home/pi/.virtualenvs/opencv/bin/python

and change opencv with your environment. This did the trick for me

Hello Adrian!

First of all I’d like to say that you are one of the greatest computer-vision tutors I have seen 🙂

I have a question related to this article: If I want to capture two consecutive frames from a video stream (at every iteration), what is the correct method? I would like to compute some differences between frames for detecting motion.

Best regards!

Thanks for the kind compliment Andrew! 😀

If you want to compare the difference between two frames, you will need two variables: the

previousframe and thecurrentframe. Right after Line 20 I would check to see if thepreviousframe has been initialized or not. If not, initialize it as the current frame. Otherwise, you’ll have thecurrentandpreviousframe together and you’ll be able to compute the differences between them.I do have some pretty epic plans to cover motion detection with the Raspberry Pi, so definitely stay tuned!

Hi Adrian, I’m new with raspberry so I have some questions. After finish your tutorial I can’t import cv2, but if i work out of virtualenv I can import cv2. Do you know what happens?

Please help me.

Hi Pablito, this tutorial actually built on my previous tutorial on installing OpenCV on your Raspberry Pi. Take a look at Step 10 where the OpenCV library is sym-linked into the virtual environment.

You do not have to use virtual environments if you do not want, it’s just good practice.

Hello Adrian! Firstly, excellent tutorial. I enjoyed the use of the virtual environment in the first tutorial and this one did a beautiful job of following on. I have got my RaspiPiCam working using the code you have provided and can take still and motion video. However, I am now lost at the next step in the OpenCV testing process.

When I go to the available OpenCV Samples under the “/opencv-2.4.10/samples/python2” folder and attempt to run them, they do not recognize the RaspiPiCam stream. In particular, they do not like statements like: “try: video_src = video_src[0]” (as found in facedetect.py).

I believe that there is a method to get OpenCV to directly play video from a RaspiPiCam using Python (as found here: http://raspberrypi.stackexchange.com/questions/17068/using-opencv-with-raspicam-and-python), but I can’t get it to work and was wondering if you had a more direct / elegant solution.

I am trying to avoid re-writing all of the existing OpenCV samples simply so that they work with my RaspiPiCam instead of an actual USB cam. Thanks!!

Hi Stephen, I definitely appreciate not wanting to rewrite all of the OpenCV examples as that can be quite time consuming and tedious. If you want to work directly with the Raspberry Pi camera module, you can try installing the uv4l drivers. However, they can also be a pain to install. And more importantly, those drivers are not kernel level drivers — they will run as users threads. This means that they will be a bit slow.

In general, I think you have two options:

1. Update the OpenCV examples to use the Raspberry Pi code that I have detailed above.

2. Purchase a USB camera, like the Logitech C210. All you need to do is plug the camera into the Pi and it should be automatically recognized. And from there you won’t have to change any code in the OpenCV examples.

I know that’s probably not the answer you were hoping for, but there isn’t exactly a clean and elegant solution to this particular problem.

I thought that this might be the case. Thank you very much for the quick response and your thoughts!

No problem, glad to help! Let me know which route you end up going.

Step 5, running headless with putty, fails for me with the message “Gtk-WARNING **: cannot open display”, at line 19. I got it to work using the RasPi desktop over VNC, but only after I had rerun my profile in that environment. Enabling X11 forwarding in the putty configuration did not work in my case.

Hi Joe — I’m sorry to hear that X11 forwarding did not work, that’s very strange. I don’t have a Windows system, and thus no access to Putty, so I can’t give it a shot to replicate the error. But whenever I ssh into my Pi with X11 forwarding from my command line, I can tell you that my command looks like this:

$ ssh pi@my_ip_addressI hope some fellow Windows users on the blog can help out!

Hi Adrian Rosebrock

I am working in a project which i have to scan qr code and barcode by using python.Does it existe a tutoriel for doing that .

thx

Best regards

Hey, thanks for the comment. I don’t have any tutorials related to scanning the actual barcode, but I do have a tutorial on detecting barcodes in images which you may find useful.

Hi Adrian,

I’m having a problem with step 5. I get an error when running ‘python test_image.py’:

—

OpenCV Error: Unspecified error (The function is not implemented. Rebuild the library with Windows, GTK+ 2.x or Carbon support. If you are on Ubuntu or Debian, install libgtk2.0-dev and pkg-config, then re-run cmake or configure script) in cvShowImage, file /home/pi/opencv-2.4.10/modules/highgui/src/window.cpp, line 501

Traceback (most recent call last):

File “test_image.py”, line 19, in

cv2.imshow(“Image”, image)

cv2.error: /home/pi/opencv-2.4.10/modules/highgui/src/window.cpp:501: error: (-2) The function is not implemented. Rebuild the library with Windows, GTK+ 2.x or Carbon support. If you are on Ubuntu or Debian, install libgtk2.0-dev and pkg-config, then re-run cmake or configure script in function cvShowImage

—

All the installation steps worked, so I don’t think this is a problem with that. I think this is an issue with X11 forwarding. I use this command to log in to the pi: ‘ssh -X pi@piaddr’ I’m using a mac and RPi B+. I tried running ‘startx &’ and ‘/etc/X11/Xsession’ but neither worked. How do you get images to display over your ssh connection? Is there any other setup required?

Thanks,

Rafi

Hey Rafi, it looks like you didn’t perform Step 3 of the Raspberry Pi + OpenCV install tutorial. Step 3 involves installing

libgtk2.0-dev. Go back to Step 3, installlibgtk2.0-dev, and then re-compile and install OpenCV and this should take care of the problem.I have the same problem.

And I installed libgtk2.0-dev before build and make. So this doesn’t fix the problem.

Try installing libgtk-3-dev as well. Make sure you delete your “build” directory, re-create it, and re-run CMake + make. This has fixed the issue anytime myself and most other readers have encountered your error. In fact, the error posted is the hallmark of OpenCV not being able to build GUI support. Unfortunately without physical access to your Pi I’m not sure what the particular error is, but I would double-check the GUI libraries on your machine.

hello guys.. may i ask something .. is it possible when the camera recognize an object then it convert it into speech. bcause we have a project design that is related with this post. Our project is for the blind impaired person, entitled ” Audio navigator for blind impaired”. the concept of this, it is a device wearable by a blind then we planned to use PI cam to detects motions and object that can gives awareness to a blind person of whats happening in his environment. for ex. the camera detects traffic light, the camera will automatically recognize it that it is a traffic light, then color sign green,orange and red that can tells to a blind when he/she can cross in certain road to avoid accident. in this case, we hope that it would help to them to make life easier by using this invention. pls anyone who can give ideas .. honestly my knowledge of making this is very limited so thats why i decided to approach this site. i am a student only.. pls forgive my grammar… thank you.. Godbless you all

Converting an image to text (and then to speech) is a pretty challenging project and is still under active research. Both Stanford and Google are currently researching methods for automatic image captioning which captions images via text strings. From there, those text strings can be passed on to speech algorithms. But it’s still an incredibly challenging problem and very much in its infancy.

Very interesting; thanks for this RPi-OpenCV tutorial! I have started doing something similar as you can see here: https://www.raspberrypi.org/forums/viewtopic.php?f=43&t=114550

although I am using OpenCV 3.0.0 instead of 2.4 as I gather you are using. As long as you’re compiling the library yourself, it is just as easy to install 3.0 and it was actually faster to compile than the Pi2 timings you listed for your install.

Doing foreground extraction and blob detection I see only 8 fps at 320×240 resolution on a RPi2, but I have not tried to optimize my algorithms as yet, and that includes reading the h264 input file instead of taking a live feed.

Very nice, thanks for sharing! I’ll actually have OpenCV 3.0 install instructions for the Raspberry Pi 2 online within the next 2 weeks (pretty excited to get them pushed online). Your project sounds great so far, congrats! And 8 FPS for background subtraction using the MOG methods sounds about right.

Hello Adrian,

First off, I want to thank you for these awesome tutorials.

My problem is that when I write test_image.py, and run it I get an error “picamera.exc.PiCameraMMALError: Camera component couldn’t be enabled: Out of resources (other than memory)”. I was wondering if that is an error due to the RPi or with my camera, or with something else?

Hey Neilesh — I have honestly never encountered that error before. That definitely sounds like an error related to your Raspberry Pi or the camera, not OpenCV.

Experienced the same error when I mistakenly used python3 to create the file (after setting up python2 environment)

Hi Movaid — I would suggest checking the common picamera errors page. My guess is that either (1) your Raspberry Pi camera module is not installed properly, (2) you did not enable it via

rapsi-config, or (3) you did not runrpi-updateto download the latest firmware.same problem happen.help me.

I had similar problem. I found the error is caused since my pi camera was not released by previously executed program. For example, if you run this program and close the image window using mouse and clicking close on menu instead of pressing key, I received this error.

Hi Adrian

Do you have a sample code to access multiple camera in a single raspberry pi, I mean attach two to three camera to the same Raspberry pi and read frames from each of them process it and save it ?

Hey Girish — I don’t have any complete examples, but all you need is the

cv2.VideoCapture(0)function where the 0 is the first camera, 1 would be the second camera, 2 would be the third, etc. Just maintain a list of capture objects and you’ll be able to access each of the cameras.Hello Adrian,

Step 5, i get

“Traceback (most recent call last):

File “”, line 1, in

import cv2

ImportError: No module named cv2″

When running import cv2.

Any ideas as to what might be happening and how to overcome this? I have completed your “installing openCV and Python on raspberry pi” tutorial. Thanks for your help.

Josh

It sounds like you are not in the

cvvirtual environment. Use theworkoncommand to access it before executing any code that accesses OpenCV:$ workon cv$ python

>>> import cv2

...

Hey Adrian !

thaks for this tutorial !

I have a RP 2 with the camera module installed correctly I think and performed all the steps from opencv installation to this tuto and everything seems to work fine but I can’t acheive a fps above 15 in 640×480 ( a simple calculation displayed every 2 sec). nothing else is working in the same time. I access the Pi through VNC, using direct access ( hmdi cable, keyboard ..) don’t seems to provide the desired performance ( fps << 32).

I have tried to remove the imshow thinking that displaying images with opencv would lead to performance drop but I've not noticed a significant gain in performance…

I don't think this is about the exposure, but I don't have tested it in a very luminous condition.

should I deactivate automatic exposure ? ( is it even possible ?)

I know you have answered several questions like this but I can't understand why I can't acheived the 32 fps as you do 🙁

do you have any idea ?

thanks again for your time and tutorials which are excellent !

The first way you can increase FPS is to simply reduce your image size. One way you might be able to boost performance is to take a look at the V4L2 drivers for the Pi. I personally haven’t had much luck with them, but I know others that have. The V4L2 drivers can (theoretically) improve your frame rate and let you use the

cv2.VideoCapturefunction rather than thepicameramodule which should improve the FPS a little bit.Hi again,

if opencv can only work with images at around 15 fps ( I don’t know why but let say)

Do you think it is possible to have a high frame rate display , an other thread processing the frame that give back results as an overlay ?

thanks in advance !! 🙂

Absolutely! It’s very common to dedicate one thread to grabbing frames from the camera device and then handing them off to the thread that is doing the actual processing of the images. This ensures that the main thread is not delayed by the polling of the camera.

Hi,

Compliments for your article.

Do you think is it possible to build up an head counter with raspberry pi?

I have a shop and would like to count people coming in and going out from the shop door placing a camera over the door.

Tks

Hey Pippo, thanks for the comment. And yes, it’s absolutely possible to build a head counter to count people with the Raspberry Pi. I think you might like this particular post on motion detection and tracking to get you started.

So far so good. I have tried other ways to get opencv on my pi using the pi cam. And have waisted some serious time. Dude you totally hooked it up. Thank you so much. Have you thought of doing a automatic pan and tilt face follow with an arduino, and a couple of servos?

Hey Tyrone, thanks for the awesome suggestion, I’ll definitely look into it!

hai adrian, thank you for your tutorial

why if me use syntax cv2.absdiff with the picamera array inside in syntax, i always have error, the error say

“size of input do not match (the operation is neither ‘array op array’ (where arrays have same size and the same numberof channels), nor ‘array op scalar’, nor ‘scalar op array’) in arithm_op….”

i have same resolution with the parameter in syntax cv2.absdiff, but why i have problem like that ? can you help me ?

When you take the difference between two images they need to be the same size (in terms of width and height) and in terms of channels. Either the two images you are trying to compare do not have the same width and height and/or one is grayscale and the other is RGB. Make sure all the dimensions match before using the

cv2.absdifffunction.This is failing to run with an import error “No module named cv2”

Any ideas?

This is a on a fresh install of Rasperian, Open CV / Python 2.74 (using your instructions), and I am in the virtual environment CV when running the code.

thanks

FIXED: Really strange… when I left the machine last night, I had completed all steps of the install CV and Python including the last step which tested it. I noticed earlier today that the .profile change made last night was no longer there (which I fixed) by repeating step 7. I then repeated step 10 to fix this problem of cv2 not being defined. If there is anything I am missing about the virtual environment, and things I need to add elsewhere in Unix, please let me know. Thanks

You’re the second person in the past 72 hours who has mentioned that their changes to the

.profilefile disappeared (in the other persons case, it was after a reboot. That is really strange behavior, I’m honestly not sure about that one. If you don’t mind, could you post on the official Raspberry Pi forums and see if they have any suggestions? I would love to know why the updates are being overwritten.Hi, how can i get time for each frame using the cv.GetCaptureProperty() in the code mentioned above for capturing the video stream.

Since we’re using the

picameramodule, you won’t be able to access any other properties associated with the camera like you would in OpenCV. You can dump an image to file directly with the timestamp included; otherwise, just use thedatetimemodule to grab the current time as the frame is read.Hi Adrian

Thank you very much! for This blog is very good.

Thanks Rafael! 😀

Hi adrian,

I am ganesh from india, I am going to making an secuirity system based on raspberry pi .In that interfacing camera and storing data in memory card, please suggest me how i start this project? which procedure i follow?.

Hey Ganesh, you might want to try my tutorial on building a home surveillance and motion detection with the Raspberry Pi, Python, OpenCV, and Dropbox.

Hi, Adrian. Thanks for your guidance over Raspberry PI. But I have a question here. After the Raspberry Pi camera module has been installed, how could I actually open up the terminal as shown in Step 2? Is that necessary to use Linux platform? Thanks for your prompt reply, much appreciated.

There are many ways to open up a terminal using the Pi, but I would suggest going through the official Raspberry Pi documentation for more info on launching a terminal.

Hi Adrian, Before I purchased the course i wanted to know if it included a way that i can always have the camera live without recording, when a hang gesture is detect take a picture? is this possible? Thank you so much I’m starting to become a big fan of the website.

The course itself does not include a method to perform hand gesture recognition, but that is something that I hope to cover in the future. In the meantime, it is covered inside the PyImageSearch Gurus course.

I just claimed my spot. So I’m guessing i would have to wait for the next course to start in order for me to learn how to keep the camera feed live all the time ?

You certainly don’t have to join in the course if you don’t want to, I was simply saying that I haven’t had a chance to cover hand guest recognition on the PyImageSearch blog yet, but it will be covered inside the PyImageSearch Gurus course.

Leaving the your webcam feed running all the time is pretty easy. Just SSH into your Pi. Launch

screen. Execute your script. Close your screen session. Then log out of your Pi. Your script will run without you having to be attached to the Pi.Hi Adrian, I have an error when I try to run the code of test_video.py which is

TypeError: ‘float” object is not iterable

Can you help me and thank for this post

What line of code is throwing that error?

Hey, Adrian! Thank you for this post, it’s wonderful.

I got an error while trying to execute test_image.py.

File "test_image.py", line 18, in cv2.imshow("Image", image) NameError: name 'image' is not definedCan you help me with this problem?

Thanks

If you’re getting an error related to the image not being defined, then I would go back to the test script and examine the output of the

image = rawCapture.arrayis a valid. It could be that the Raspberry Pi camera itself is not configured properly.Hi

I am new with the raspberry pi and i installed opencv and python on my raspberry pi.. Now I want to take an image by my pi camera using opencv. Already I followed your steps carefully but finally when i write $python test_image.py .. I saw that the light of the camera is working, it wants to take a picture but it dosn’t take!! and this is the warning error that is displayed for me :

(Image:2312):Gtk-WARNING**:cannot open display:

How can I solve this error ??? plz help me as soon as possible 🙁

Please see my response to Kronos and Joe Landau above.

Dear Adrian,

First of all thank you very much for your great support on either how to install opencv into RP and how to use picamera. On the past I tried some other methods on other web sites for installing opencv, but I was not succesfull, but with your support finally iI did it.

Anyway, Now I am able to run python opencv exmples with a usb camera, but after installing pi camera using pip install picamera “array”

I am having issue. When I triy your example , the python compiler says there is no array method in picamera. What is wrong, could you please help, thank you

I can see two things that might have went wrong here. First, make sure you are in your virtual environment when installing the

picamera[array]:Lastly, make sure you have quotes around “picamera[array]” when you install it:

$ pip install "picamera[array]"A+ on a Pi 2. thanks Adrian

Nice, I’m glad it worked for you Michael! 🙂

Hi Adrian, this is some great work to get started with the picamera. However, I would like to clarify on the use of this particular line (#22) on video capture

> key = cv2.waitKey(1) & 0xFF

What’s the importance of this part?

If you use the

cv2.imshowwithout usingcv2.waitKeythen your window will show up and then disappear immediately. Thecv2.waitKeyfunction allows the window to be stayed open and optionally grabs the key that is pressed.Hi Adrian,

This is a detailed, well written and nicely explained tutorial. Thank you very much for sharing it.

The only suggestion I would have is to add something on X11 forwarding. It may even be a link to your favorite blog that goes over how to do it. I had to take a little detour to get this working to see the images and the stream. I used Xming and Putty following the instructions from here: http://laptops.eng.uci.edu/instructional-computing/incoming-students/using-linux/how-to-configure-xming-putty

I am looking forward to all the possibilities and interesting projects using CV.

-Sidd

Hey Sidd — Thanks for the note on X11 forwarding. Once you have X11 installed (whether or OSX or Linux), it can be done using a simple command:

$ ssh -X pi@pi_ip_addressOn OSX, you’ll need to download and install Quartz first.

The only issue with X11 forwarding is that it can be a bit slow for streaming the results back from a webcam/video device.

I have a raspberry pi and HDMI monitor but do not have the keyboard. Is it possible for me to log in to Pi using SSH and forward display to HDMI monitor?

Currently, I am able to forward X11 on my Windows laptop using XMing and Putty but video streaming is very slow and wants to use raspberry pi HDMI output on a monitor.

Streaming frames over a network will be slower than natively displaying them to an attached monitor. There are ways to speed up the process (i.e., gstreamer) but overall, if you want minimal lag you should be viewing the frames on a monitor attached to the Pi.

Just the one a newbie needs. Perfect. Keep it up.

I enjoyed following the steps and for a change something from the net works as it is described.

I’m glad the tutorial helped! 🙂

Hi everybody,

i installed Opencv 3.0, Python 3.2.3 on my Raspi2 (followed Adrians nice tutorial…).

When I start test_image.py I get an error as soon I move around the mouse over the Image Window:

GLib-GObject-WARNING**: Attempt to add property GtkSettings::gtk-label-select-on-focus after class was initialised.

I don´t ssh to my raspi…I use the HDMI Output.

When I google the error it looks like I´m not the only one – but I´m definetly one of the Noobs who don´t know how to solve it 😉

Can anybody help me with this?

@Adrian..really nice work you are doing here. Nice tutorials and blog post. Keep it up!

Stephan the Kraut

Hey Stephan — I’ve ran into that GTK warning myself. I’ve installed OpenCV on hundreds of systems, but it only seems to happen on the Raspberry Pi. I’m honestly not what causes it, but it’s clearly from the GTK library. It doesn’t affect OpenCV at all, other than the warning message is displayed to the terminal which can be a bit annoying.

Had the same exact problem. Did you find a solution? I want to believe it could be a bad connection, but upon trying the test_image.py the ole pi cam is working for sure just not the video stream.

Hi

I would like to know if the face recognition method can be able to identify a person through comparing it with another photo taken before??

Thanks

Absolutely. In fact, that’s how most face recognition algorithms such as Eigenfaces and LBPs for face recognition are trained. Both are covered inside the PyImageSearch Gurus course.

Hello Adrian,

I’m developing a UAV (rover) , using also your code for item recognition.

It works fine. THanks for your great job.

I’d like now to live streaming the results ( captured images + the circle ).

How can I pass those result to my web server?

(I’m using tornado)

BR

Oscar

Hey Oscar — there are a number of ways to pass the results to your web server. Inside this post I show how to develop a computer vision web API that images can be uploaded to. I use Django for this project (not Tornado), but the principles are still the same.

Thanks for the lesson sir, now I can access my camera on raspberry pi. hehe….

Thank you so much for this! I am using for my design project and we fried our pi and had to start over. It worked perfectly the first time so Now we must do it again. But now we are getting a gtk warning and I have read about to install gtk, re compile and install open cv. thank YOU!!!!

Are you getting an error or a warning related to GTK? If you’re getting an error regarding unable to open the display, then I assume you’re SSH’ing into your Pi. You’ll need to enable X11 forwarding:

$ ssh -X pi@ip_addressMany thanks for such a great article. I started to work with the PI v1 some time back but found that it was too slow for my processing needs. However seeing that the camera has been better integrated into Python makes it a lot easier.

One thing that has always been a issue re OpenCV and SimpleCV is Blobs. Hopefully the newer OpenCV has made it easier.

Many thanks

Marc

Hey Marc, are you referring to the blob detector that comes with OpenCV? If so, I can try to write a blog post on it in the future to help clarify things.

Hi Adrian, Thanks for the article. Its so well explained. Followed the steps and was able to get the image and video working with Opencv.. Keep it up. 🙂

Thanks Ragu! 🙂

Hi, one week ago there was no eror, now its happening like this

pi@raspberrypi ~ $ raspistill -o output.jpg

mmal: No data received from sensor. Check all connections, including the Sunny one on the camera board

I’m not sure about that one. I would (1) make sure that the camera is enabled via

raspi-config(just in case it somehow got turned off) and (2) double and triple check the connections on your board. If you’re still getting an error, you might need to post on the official Raspberry Pi forums.Thanks for the tutorial Sir..I kindly wish to know if it will be possible to get the camera running automatically when my raspberry starts…any script file which could execute the profile, work on and subsequently the camera working. I will really be grateful if you could help me out.

2) How could i get dropbox to send me a notification anytime a picture is added into it

3) what do i have to change if im to upload the images on some server, later to be retrieved via an app?

To get a Python script automatically running when your Raspberry Pi starts, I would suggest using crontab. You can specify to run a shell script on reboot. Inside this shell script you should put the

source,workon, andpythoncommands to run your script.As for Dropbox sending you a notification, I’m not sure what you mean by that. Total disclaimer: I am not a Dropbox developer and this was the first time I used their API. I would suggest posting on the Dropbox Forum.

Finally, you should look into using the pysftp package for uploading to a server.

Thank you for the tutorial, very clear.

But I’m facing an issue while running the command: python test_image.py (Step 5):

(Image:1448): Gtk-WARNING **: cannot open display:

Any help, please?

Please see my reply to Joe Landau above.

Can you explain me how i create a QR-Code reader with this?

I don’t cover how to read a QR code on this blog, but I demonstrate how to detect barcodes in video streams. I’ve never personally tried it, but I’ve heard that zbar is a good library for reading barcodes.

Hi Adrian,

GOOD WORK MAN!! Worked with me perfect.

Now I want to change the settings of the camera programmatically. Settings like brightness, saturation, contrast, exposure, etc. Can you help me with this?

Thanks

I would suggest taking a look at the picamera documentation. The page linked to demonstrates how to adjust brightness. Similar examples can be found throughout the docs.

Hello Adrian,

First I want to thank you for all your great tutorials, they have been a great resource. Next, I have installed OpenCV and Python on my raspberry pi 2 successfully. I then followed this tutorial and was able to run all the way through both step 5 and step 6 successfully the first time around. Because they worked as planned I set my work aside for about a week. When I returned and tried to run the same scripts in the virtual environment without making any changes I get the same GTK warning as those previous comments. The window opened the first time and now the window will not open. I am not running my pi through ssh but rather the HDMI port. My question is then, are there any updates or additional libraries needed to fix this warning? Your help is greatly appreciated.

All the best

Which GTK warning are you getting? An error related to the display being unable to open? Or the one related to the gtk-label-select? If it’s the latter, you can ignore this warning. I’m not sure why it happens, but it seems to be Raspberry Pi specific and it will not impact your usage of OpenCV. If it’s the latter, then make sure you have launched the Raspberry Pi desktop and are not trying to execute it via the command line at the Pi boots into.

As for the image opening a first time, but not a second, that is very, very strange behavior and not something I have encountered before.

Hello!

First of all Adrian Rosebrock thank you very much for a great tutorial.

I have tried the live display code above and it works fine, but if I increase the resolution to like 1296×972 the fps drop allot. There is a big difference if I compare it with raspivid live display.

For now I’m not doing any image processing.

Is there any solution to increase the fps to like 25 (resolution > 640×480) so that the stream will be smooth? I need these for kind of live magnification. And maybe I will have to draw a line on every image so that’s why I would like to use OpenCV and Python instead of raspivid.

Realistically, if you want to obtain ~25 FPS, your images will need to be smaller than 640 x 480. The larger your resolution becomes, the more data there is, and hence the processing rate will drop. I personally haven’t tried this, but you might want to install the V4L2 drivers so you can access the Raspberry Pi camera module via the

cv2.VideoCapturefunction and see if FPS rates improve.Thank you very much for your answer.

Yesterday I found that I can make an overlay if I use picamera preview. So I can easily draw a line on a live stream and it works great. That’s all I need for now.

I will take a look at v4l2 driver if I ever need to make some processing on a stream.

Interesting. How do you draw on the picamera preview? I haven’t seen that done before.

Hey Adrian,

I have a Raspberry Pi Model B+ and I was successful setting up the picamera in order to get a image up to step #5, but when it’s time to get a videostream in step#6, the python code will go through and I can clearly see that the LED n the camera is on but I am not seeing the window come up with a videostream of myself. What am I doing wrong? Maybe my FPS shouldn’t be the same as the one in your tutorial but I already tried to lessen it and it does the same thing.

How are you accessing your Pi? Are you ssh’ing into your Pi or using VNC?

i need to detect circles in video with c++ using raspicam and hough transform

Hey Maria, I actually cover circle detection in this blog post, but I only have Python code, not C++. I hope that helps point you in the right direction at least.

Hi Adrian

first of all i want to thank you for this very useful tutorial.

I want to ask about step 4, which im trying to install pi camera.

how long should i wait for the installation? because when the installation reach “Running setup.py bdist_wheel for numpy . . . -” it stops very long there and nothing in progress any further… (sry for my bad english) thank you!

For a Raspberry Pi 2, the installation can take 15-20 minutes. For a model B, it can take anywhere from 45-60 minutes In either case, you’ll likely want to go make a cup of coffee or go for a long walk while NumPy installs 🙂

Dear Adrian,

first off all, thanks for the great turtorial.

Is it possible to show the frame in fullscreen without any border at the top?

Or to show the frame in a specified coordination. It always shown up in the left bottom corner.

Thank you.

Nico

I don’t think it’s possible to show the frame “fullscreen” with OpenCV. The GUI functions included with OpenCV are meant to be barebones and used for debugging and building simple GUI-based projects. For more advanced GUI operations, I suggest using either Tkinter or Qt.

As for placing the frame in a specified coordinate, yes, you can actually accomplish that using the

cv2.moveWindowfunction:cv2.moveWindow("WindowName", x, y)Hi,i need to incresase fps to 60 i’m using camera board with raspicam

my code in c++

i tried using raspiCamCvSetCaptureProperty(capture,CV_CAP_PROP8_FPS,60)

but no effect 🙁

I don’t have any C++ code on this blog, but I would encourage you to read this blog post on increasing the FPS processing rate of your video pipeline.

Hello Adrian,

Should you recommend a way to save the video stream to the working directory in order to play it later?

Thanks in advance!

Sure, there are two blog posts I recommend for writing video to file:

Hi Adrian,

I did your previous tutorial to install OpenCV and did everything here up to step 5 to do try to display the image with the test_image.py script.

I’m in cv environment and when I type:

python test_image.py

I get this error message:

(Image:31875): Gtk-WARNING **: cannot open display:

And I obviously don’t see the image. Any idea about my problem?

Thanks. JP

It sounds like you’re SSH’ing into your Pi. Please see my reply to Kronos above — you need to enable X11 forwarding in your SSH command:

$ ssh -X pi@your_ip_addressHi,Thankyou for openning a new door for me to know pi,i am a student in China,i had a question that when i were tring do”sudo apt-get install libgtk2.0-dev”, it can’t work. And i am sure using the new “source.list”,how can i use the pi B+ to install the environment of Opencv?

Please figure it out! Very Thanks!

PS:Is it because these “software” out of date ?

PS2:I can’t buy your bool in China! PITY!

Hey Neal — I’m sorry to hear about the issue with the book in China. Send me an email, perhaps we can figure out a workaround. As for the libgtk-2.0-dev issue, what is the error message you are getting?

Hey Adrian,

I’m measuring the read rates for capture_continuous and it looks like every three or four frames, it takes significantly longer to generate a frame.

The read times look like this.

Frame 1: .02 (Seconds)

Frame 2: .03 (Seconds)

Frame 3: .02 (Seconds)

Frame 4: .11 (Seconds)

It’s happening in a somewhat regular pattern (every third/fourth frame takes 3/4 times as long ).

Any idea what’s going on or is that the expected behavior of capture_continuous.

Kav

Very interesting, I can’t say I’ve ever encountered that before (or measured it). You might want to try posting on the picamera GitHub to see if they know anything about it. I would also encourage you to try using threading to facilitate faster frame reads as well.

Hi Adrian .Thanks for the tutorial.I will ask how can I make a real time face recognition on raspberry pi over this tutorial or do you have another tutorial for this ?I will be very happy if you help me ,thanks.

I don’t have any real-time face recognition tutorials publicly available; however, I do cover it in detail inside PyImageSearch Gurus.

Was wondering if it is possible to run 2x Pi Cameras from the same Raspberry Pi?

I know there is only a single camera connector, but could a second be added via the GPIO pins or could the camera be chained together?

If a second Pi is required, what is the maximum length of the cable? Does it have to be a ribbon cable or are round cables available?

Thanks for your time.

I’ve seen various hacks online that have chained together up to 4 Pi Cameras, but in general, I don’t recommend this. Instead, I would just connect multiple USB cameras. It’s much easier this way 🙂

Hi Adrian

I am trying to install to raspberry pi camera module since last year

What I did is;

– source ~/.profile

– workon cv

– pip install “picamera[array]”

Finally I wrote the test_image.py code to test, but there is no any luck ever to run the code

It gives me the error

ImportError: No module named array

I guess I am the only one who is able to use raspberry pi camera

Any help will pe approximated

Thanks

Murat Gozu

It definitely sounds like

picameradid not install correctly. Try manually typing in thepip install "picamera[array]"command to ensure there are no formatting issues during the copy and paste.Hi Adrian,

Everything has been great and is work up to step 5. I have copied the code for capturing an image and saved it etc.

Running ‘python testimage.py’ from the terminal executes the code correctly.

However, when I run it from the python shell in IDLE3, i get the error:

Traceback (most recent call last):

File “/home/pi/.testimage.py”, line 6, in

import cv2

ImportError: No module named ‘cv2’

I have run the source ~/.profile and workon cv commands in the terminal before opening IDLE3.

I guess i’m lacking a little understanding about environments.

Is this behaving correctly?

If so, what is the advantage to running the program from the terminal, as opposed to from the IDLE3 shell? Because to me, it just feels more natural to press F5 from the actual program.

Thanks!

Michael

I presume you’re using the GUI version of IDLE? If so, that’s the problem. Unfortunately, IDLE does not respect Python virtual environments like the command line does. I would suggest either using the command line version or IDLE, or, better yet, use something like IPython Notebooks.

Hi Adrian,

When I run my code I’m getting error as

from picamera.array import PiRGBArray

ImportError : No module named picamera.array

What would be the reason?

Thanks.

Angel Jenifer

Make sure you have installed the

picamera[array]library:Hi Adrian,

I have the same issue above, though definitely installed “picamera[array]”, and now says already satisfied, but still same error when running in virt_env.

“no module named picamera.array”?

It looks to me that the issue is that picamera[array] is automatically installing to python3 when I have opencv3 installed for python2.

I either get no picamer, or no cv2 module? I can’t get the 2 to work together on python2. Even running:

$ sudo pip2 install “picamera[array]” says already satisfied, though I know it is not?

It sounds like you are not installing the

picamera[array]library into your Python 2.7 virtual environment where OpenCV 3 is installed. Can you runpip freezefrom your virtual environment and ensure that picamera is listed?Yeah so i only had to run it with workon cv. Now it works.

Congrats on resolving the issue Stefan!

Hey Adrian!

First of all, thank you for all your tutorials, I have managed to resolve almost all my issues using your guides. However, I am running into an error when trying to install picamera[array].

Within the virtual environment, when I try to install with the above command, I run into a “BadZipFile” error. When I try installing then with “sudo pip”, it says it is successfully installed but when I try to import the library it says that there is no module named picamera.array.

I have uninstalled and installed several times and after installation even if I only do it within the virtual environment, I can only import the picamera module outside of the virtualenv. Do you (or does anyone) know how to resolve this issue?

Thank you

It sounds like when you tried to install the picamera module inside your virtual environment there was a problem with your internet connection. Uninstall picamera from your virtual environment and then use this command to install:

$ pip install "picamera[array]" --no-cache-dirThis should ensure that a new .zip file of the module is downloaded.

Thanks a lot for nice guidance and suggestions.

My question is; in recording video, is it possible to change the “frame rate” and the “frame size”? I mean, for example, first I choose the frame rate =32 and size=(640,480), then after some time I change the frame rate =45 and size (320,240), but all in one program. is it possible?

If it is possible, then can I store the output video in one file? I mean, some portion of output file has different frame rate and size with other portion. is it possible?

If I understand your question correctly, you want to store one frame size at a given FPS for a period of time and then later store a different frame size at a different FPS? If so, no, that’s not possible. You can adjust the frame size simply by making your frame larger/smaller to fit the original output dimensions — but you cannot adjust the FPS. You would need to create two separate output files.

thank you.

you mean for changing FPS, I have to make another output file. But I can use only one code. In that code, if I change the FPS, then i store the images in another output file.

When I change the FPS or frame size, No need to reboot the raspberry, or no need to use another code, am I right?

Correct, you can use the same code. But if you want to change FPS or frame dimensions, you should create a separate output file.

Hello Adrian, Thank you for the lesson, very helpful.

I can successfully run the test_image.py script but when I run the test_video.py script I get no error but the image is black. I am using the Raspberry Pi 3 board and the 8 MP Ver2.1 camera.

I’m not sure what you mean by “image is black” — but the code should still work with the newer v2 camera module.

The video image output to the monitor (via HDMI) is solid black. However, if I test the camera using a raspvid command, like: “raspivid -t 5000 -o” the video image is as expected.

I also tested with the V1 pi camera with same results. I tried a better power supply (2 Amp) with same results. I reduced fps and image size with same results.

That is very strange. Can you confirm which version of picamera you are using? I’ve heard there are some issues with the latest 1.11 release.

I found the fix to my Black Video issue and I hope you will add this simple step to your lesson. After setting up RP3/OpenCV using Install guide: Raspberry Pi 3 + Raspbian Jessie + OpenCV 3, simply reboot the RP before trying to execute any CV related code. Following reboot, the black video image issue goes away. I repeated the complete install twice with the same results, on the third try I rebooted before executing opencv related code and all is well.

Hey Jim – Congrats on resolving the issue, but to be honest, I’ve never encountered it before. It’s a good tip for anyone else who encounters this issue, but to be honest, this sounds like something very specific to your setup.

Hi Adrian, thanks for all the lessons.

I also have the same Issue as the other Jim. When I run the test_video.py script I get a small window appear called Frame but the window is just black, no video appearing. Im running on a Pi 3 using the first gen camera. The LED on the front of the camera turns red as normal. any ideas? no error messages are output to the terminal window and the script exits when the Q key is pressed.

Im using a Raspian installation as detailed in your lesson for installing PI 3 with open CV, and im in the CV virtual env.

thanks

Jim

Hi Jim — please see my comment to “red” below to see the resolution to this problem. Also, I assume you’re using Python 2.7? Or are you using Python 3?

perfect! that fixed it, many thanks Adrian

Now for the cool stuff

Nice, glad to hear it 🙂

Hey Adrian,

I’m running a Raspberry Pi 3 B with the new 8 MP PiCamera. I have installed and am successfully running the (cv) environment. I got the document scanner to work with your example images and with images I have previously saved, but would now like to integrate my PiCamera into the scanner and skin detection programs (and others).

I’ve downloaded the source files, but am running into trouble at “Step 5: Accessing a single image of your Raspberry Pi using Python and OpenCV.” When I run test_example.py, it gets hung up on line 18 camera.caputre(raw.Capture, format = “bgr”). It gives me the following error- “TyperError: startswith first arg must be bytes or a tuple of bytes, not str”. Please let me know if you’ve got any insight into what could be going wrong or if you need more information.

Thanks in advance, I really appreciate all of these tutorials.

Man, I’ve been getting a lot of emails regarding this. Can you please confirm what version of picamera you’re running? I have a bad feeling that it might be the latest 1.11 version which if you look at the GitHub issues, is having a ton of problems. Luckily, I think the fix is simply to downgrade to a previous version. Please let me know which

picameraversion you’re running so I can confirm this (I’m traveling right now and don’t have physical access to my Pi).Hi Adrian,

Thanks so much for the response. I ran pip list while in the (cv) virtual environment, and it appears you were right. My picamera is version (1.11). What is the easiest way for me to downgrade to 1.10?

Please see my reply to “red” above.

Adrian, thank you for sharing your knowledge. I also had the aforementioned issue and wanted to let you know that it worked fine once I downgraded picamera to 1.10 as suggested.

Thank you!

Thanks for letting me know Felipe, I appreciate it!

It might help to uninstall picamera “sudo apt-get remove ” (python-picamera or python3-picamera [or both]) and then do a pip install… http://picamera.readthedocs.io/en/release-1.11/install.html#alternate-distro-installation

Away from my pi unfortunately but the “error” I get is something like gttk: lense focus was initialized and my frame is pitch black no picture for python test_video.py and I am in the source~/.profile

workon cv

python

I have a feeling that you’re using picamera v1.11 and Python 2.7. Try downgrading to picamera v1.10 and this should resolve the blank/black frame issue:

There are some issues with the most recent version of picamera that are causing a bunch of problems for Python 2.7 and Python 3 users.

Same problem here. I found that if I set the format to ‘rgb’, the sample will display the stream, but in the wrong colors. However, I did modify the sample so that a camera.capture occurs on the exit, and this file is saved as RGB with no issues on displaying the correct colors when I do an imshow on the resulting file..

Hey Paul — thanks for sharing. I’ll write an updated blog post that details some of the common errors in this comment thread.

Downgrading picamera worked for me!

Congrats on resolving the issue, Ben!

I was using picamera v1.13 and had the same black screen problem. This fixed it thanks!

Congrats on resolving the issue, Jake!

Hello Adrian,

Thanks for all the tutorials. It’s been really helpful. This tutorial worked great through vnc, and everything run fine. However, when I try to run test_image.py through terminal I get the error, “(Image:2063): Gtk-WARNING **: cannot open display:”. I tried to ssh in using -x like you recommended to a previous commenter, but it didn’t fix the error. Do you know any way to resolve this issue?

Thanks 🙂

The “X” should be capitalized, like this:

$ ssh -X pi@your_ip_addressFrom there, try running the