So you’re interested in deep learning and Convolutional Neural Networks. But where do you start? Which library do you use? There are just so many!

Inside this blog post, I detail 9 of my favorite Python deep learning libraries.

This list is by no means exhaustive, it’s simply a list of libraries that I’ve used in my computer vision career and found particular useful at one time or another.

Some of these libraries I use more than others — specifically, Keras, mxnet, and sklearn-theano.

Others, I use indirectly, such as Theano and TensorFlow (which libraries like Keras, deepy, and Blocks build upon).

And even others, I use only for very specific tasks (such as nolearn and their Deep Belief Network implementation).

The goal of this blog post is to introduce you to these libraries. I encourage you to read up on each them individually to determine which one will work best for you in your particular situation.

My Top 9 Favorite Python Deep Learning Libraries

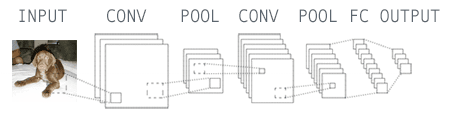

Again, I want to reiterate that this list is by no means exhaustive. Furthermore, since I am a computer vision researcher and actively work in the field, many of these libraries have a strong focus on Convolutional Neural Networks (CNNs).

I’ve organized this list of deep learning libraries into three parts.

The first part details popular libraries that you may already be familiar with. For each of these libraries, I provide a very general, high-level overview. I then detail some of my likes and dislikes about each library, along with a few appropriate use cases.

The second part dives into my personal favorite deep learning libraries that I use heavily on a regular basis (HINT: Keras, mxnet, and sklearn-theano).

Finally, I provide a “bonus” section for libraries that I have (1) not used in a long time, but still think you may find useful or (2) libraries that I haven’t tried yet, but look interesting.

Let’s go ahead and dive in!

For starters:

1. Caffe

It’s pretty much impossible to mention “deep learning libraries” without bringing up Caffe. In fact, since you’re on this page right now reading up on deep learning libraries, I’m willing to bet that you’ve already heard of Caffe.

So, what is Caffe exactly?

Caffe is a deep learning framework developed by the Berkeley Vision and Learning Center (BVLC). It’s modular. Extremely fast. And it’s used by academics and industry in start-of-the-art applications.

In fact, if you were to go through the most recent deep learning publications (that also provide source code), you’ll more than likely find Caffe models on their associated GitHub repositories.

While Caffe itself isn’t a Python library, it does provide bindings into the Python programming language. We typically use these bindings when actually deploying our network in the wild.

The reason I’ve included Caffe in this list is because it’s used nearly everywhere. You define your model architecture and solver methods in a plaintext, JSON-like file called .prototxt configuration files. The Caffe binaries take these .prototxt files and train your network. After Caffe is done training, you can take your network and classify new images via Caffe binaries, or better yet, through the Python or MATLAB APIs.

While I love Caffe for its performance (it can process 60 million images per day on a K40 GPU), I don’t like it as much as Keras or mxnet.

The main reason is that constructing an architecture inside the .prototxt files can become quite tedious and tiresome. And more to the point, tuning hyperparameters with Caffe can not be (easily) done programmatically! Because of these two reasons, I tend to lean towards libraries that allow me to implement the end-to-end network (including cross-validation and hyperparameter tuning) in a Python-based API.

2. Theano

Let me start by saying that Theano is beautiful. Without Theano, we wouldn’t have anywhere near the amount of deep learning libraries (specifically in Python) that we do today. In the same way that without NumPy, we couldn’t have SciPy, scikit-learn, and scikit-image, the same can be said about Theano and higher-level abstractions of deep learning.

At the very core, Theano is a Python library used to define, optimize, and evaluate mathematical expressions involving multi-dimensional arrays. Theano accomplishes this via tight integration with NumPy and transparent use of the GPU.

While you can build deep learning networks in Theano, I tend to think of Theano as the building blocks for neural networks, in the same way that NumPy serves as the building blocks for scientific computing. In fact, most of the libraries I mention in this blog post wrap around Theano to make it more convenient and accessible.

Don’t get me wrong, I love Theano — I just don’t like writing code in Theano.

While not a perfect comparison, building a Convolutional Neural Network in Theano is like writing a custom Support Vector Machine (SVM) in native Python with only a sprinkle of NumPy.

Can you do it?

Sure, absolutely.

Is it worth your time and effort?

Eh, maybe. It depends on how low-level you want to go/your application requires.

Personally, I’d rather use a library like Keras that wraps Theano into a more user-friendly API, in the same way that scikit-learn makes it easier to work with machine learning algorithms.

3. TensorFlow

Similar to Theano, TensorFlow is an open source library for numerical computation using data flow graphs (which is all that a Neural Network really is). Originally developed by the researchers on the Google Brain Team within Google’s Machine Intelligence research organization, the library has since been open sourced and made available to the general public.

A primary benefit of TensorFlow (as compared to Theano) is distributed computing, particularly among multiple-GPUs (although this is something Theano is working on).

Other than swapping out the Keras backend to use TensorFlow (rather than Theano), I don’t have much experience with the TensorFlow library. Over the next few months, I expect this to change, however.

4. Lasagne

Lasagne is a lightweight library used to construct and train networks in Theano. The key term here is lightweight — it is not meant to be a heavy wrapper around Theano like Keras is. While this leads to your code being more verbose, it does free you from any restraints, while still giving you modular building blocks based on Theano.

Simply put: Lasagne functions as a happy medium between the low-level programming of Theano and the higher-level abstractions of Keras.

My Go-To’s:

5. Keras

If I had to pick a favorite deep learning Python library, it would be hard for me to pick between Keras and mxnet — but in the end, I think Keras might win out.

Really, I can’t say enough good things about Keras.

Keras is a minimalist, modular neural network library that can use either Theano or TensorFlow as a backend. The primary motivation behind Keras is that you should be able to experiment fast and go from idea to result as quickly as possible.

Architecting networks in Keras feels easy and natural. It includes some of the latest state-of-the-art algorithms for optimizers (Adam, RMSProp), normalization (BatchNorm), and activation layers (PReLU, ELU, LeakyReLU).

Keras also places a heavy focus on Convolutional Neural Networks, something very near to my heart. Whether this was done intentionally or unintentionally, I think this is extremely valuable from a computer vision perspective.

More to the point, you can easily construct both sequence-based networks (where the inputs flow linearly through the network) and graph-based networks (where inputs can “skip” certain layers, only to be concatenated later). This makes implementing more complex network architectures such as GoogLeNet and SqueezeNet much easier.

My only problem with Keras is that it does not support multi-GPU environments for training a network in parallel. This may or may not be a deal breaker for you.

If I want to train a network as fast as possible, then I’ll likely use mxnet. But if I’m tuning hyperparameters, I’m likely to setup four independent experiments with Keras (running on each of my Titan X GPUs) and evaluate the results.

6. mxnet

My second favorite deep learning Python library (again, with a focus on training image classification networks), would undoubtedly be mxnet. While it can take a bit more code to standup a network in mxnet, what it does give you is an incredible number of language bindings (C++, Python, R, JavaScript, etc.)

The mxnet library really shines for distributed computing, allowing you to train your network across multiple CPU/GPU machines, and even in AWS, Azure, and YARN clusters.

Again, it takes a little more code to get an experiment up and running in mxnet (as compared to Keras), but if you’re looking to distribute training across multiple GPUs or systems, I would use mxnet.

7. sklearn-theano

There are times where you don’t need to train a Convolutional Neural Network end-to-end. Instead, you need to treat the CNN as a feature extractor. This is especially useful in situations where you don’t have enough data to train a full CNN from scratch. Instead, just pass your input images through a popular pre-trained architecture such as OverFeat, AlexNet, VGGNet, or GoogLeNet, and extract features from the FC layers (or whichever layer you decide to use).

In short, this is exactly what sklearn-theano allows you to do. You can’t train a model from scratch with it — but it’s fantastic for treating networks as feature extractors. I tend to use this library as my first stop when evaluating whether a particular problem is suitable for deep learning or not.

8. nolearn

I’ve used nolearn a few times already on the PyImageSearch blog, mainly when performing some initial GPU experiments on my MacBook Pro and performing deep learning on an Amazon EC2 GPU instance.

While Keras wraps Theano and TensorFlow into a more user-friendly API, nolearn does the same — only for Lasagne. Furthermore, all code in nolearn is compatible with scikit-learn, a huge bonus in my book.

I personally don’t use nolearn for Convolutional Neural Networks (CNNs), although you certainly could (I prefer Keras and mxnet for CNNs) — I mainly use nolearn for its implementation of Deep Belief Networks (DBNs).

9. DIGITS

Alright, you got me.

DIGITS isn’t a true deep learning library (although it is written in Python). DIGITS (Deep Learning GPU Training System) is actually a web application used for training deep learning models in Caffe (although I suppose you could hack the source code to work with a backend other than Caffe, but that sounds like a nightmare).

If you’ve ever worked with Caffe before, then you know it can be quite tedious to define your .prototxt files, generate your image dataset, run your network, and babysit your network training all via your terminal. DIGITS aims to fix this by allowing you to do (most) of these tasks in your browser.

Furthermore, the user interface is excellent, providing you with valuable statistics and graphs as your model trains. I also like that you can easily visualize activation layers of the network for various inputs. Finally, if you have a specific image that you would like to test, you can either upload the image to your DIGITS server or enter the URL of the image and your Caffe model will automatically classify the image and display the result in your browser. Pretty neat!

BONUS:

10. Blocks

I’ll be honest, I’ve never used Blocks before, although I do want to give it a try (hence why I’m including it in this list). Like many of the other libraries in this list, Blocks builds on top of Theano, exposing a much more user friendly API.

11. deepy

If you were to guess which library deepy wraps around, what would your guess be?

That’s right, it’s Theano.

I remember using deepy awhile ago (during one if its first initial commits), but I haven’t touched it in a good 6-8 months. I plan on giving it another try in future blog posts.

12. pylearn2

I feel compelled to include pylearn2 in this list for historical reasons, even though I don’t actively use it anymore. Pylearn2 is more than a general machine learning library (similar to scikit-learn in that it respect), but also includes implementations of deep learning algorithms.

The biggest concern I have with pylearn2 is that (as of this writing), it does not have an active developer. Because of this, I’m hesitant to recommend pylearn2 over more maintained and active libraries such as Keras and mxnet.

13. Deeplearning4j

This is supposed to be a Python-based list, but I thought I would include Deeplearning4j in here, mainly out of the immense respect I have for what they are doing — building an open source, distributed deep learning library for the JVM.

If you work in enterprise, you likely have a basement full of servers you use for Hadoop and MapReduce. Maybe you’re still using these machines. Maybe you’re not.

But what if you could use these same machines to apply deep learning?

It turns out you can — you just need Deeplearning4j.

What's next? We recommend PyImageSearch University.

84 total classes • 114+ hours of on-demand code walkthrough videos • Last updated: February 2024

★★★★★ 4.84 (128 Ratings) • 16,000+ Students Enrolled

I strongly believe that if you had the right teacher you could master computer vision and deep learning.

Do you think learning computer vision and deep learning has to be time-consuming, overwhelming, and complicated? Or has to involve complex mathematics and equations? Or requires a degree in computer science?

That’s not the case.

All you need to master computer vision and deep learning is for someone to explain things to you in simple, intuitive terms. And that’s exactly what I do. My mission is to change education and how complex Artificial Intelligence topics are taught.

If you're serious about learning computer vision, your next stop should be PyImageSearch University, the most comprehensive computer vision, deep learning, and OpenCV course online today. Here you’ll learn how to successfully and confidently apply computer vision to your work, research, and projects. Join me in computer vision mastery.

Inside PyImageSearch University you'll find:

- ✓ 84 courses on essential computer vision, deep learning, and OpenCV topics

- ✓ 84 Certificates of Completion

- ✓ 114+ hours of on-demand video

- ✓ Brand new courses released regularly, ensuring you can keep up with state-of-the-art techniques

- ✓ Pre-configured Jupyter Notebooks in Google Colab

- ✓ Run all code examples in your web browser — works on Windows, macOS, and Linux (no dev environment configuration required!)

- ✓ Access to centralized code repos for all 536+ tutorials on PyImageSearch

- ✓ Easy one-click downloads for code, datasets, pre-trained models, etc.

- ✓ Access on mobile, laptop, desktop, etc.

Summary

In this blog post, I reviewed some of my favorite libraries for deep learning and Convolutional Neural Networks. This list was by no means exhaustive and was certainly biased towards deep learning libraries that focus on computer vision and Convolutional Neural Networks.

All that said, I do think this is a great list to utilize if you’re just getting started in the deep learning field and looking for a library to try out.

In my personal opinion, I find it hard to beat Keras and mxnet. The Keras library sits on top of computational powerhouses such as Theano and TensorFlow, allowing you to construct deep learning architectures in remarkably few lines of Python code.

And while it may take a bit more code to construct and train a network with mxnet, you gain the ability to distribute training across multiple GPUs easily and efficiently. If you’re in a multi-GPU system/environment and want to leverage this environment to its full capacity, then definitely give mxnet a try.

Before you go, be sure to sign up for the PyImageSearch Newsletter using the form below to be notified when new deep learning posts are published (there will be a lot of them in the coming months!)

Join the PyImageSearch Newsletter and Grab My FREE 17-page Resource Guide PDF

Enter your email address below to join the PyImageSearch Newsletter and download my FREE 17-page Resource Guide PDF on Computer Vision, OpenCV, and Deep Learning.