What if we could build a real life Pokedex?

You know, just like Ash Ketchum — point your Pokedex at a Pokemon (or in this case, snap a photo of a Pokemon), identify it, and get its stats.

While this idea has its roots in the Pokemon TV show, I’m going to show you how to make it a reality.

Previous Posts:

Before we get too far into detail, here are some previous posts you can look over for context and more detail on building our Pokedex:

Step 2: Scraping our Pokemon Database

Prior to even starting to build our Pokemon search engine, we first need to gather the data. And this post is dedicated to exactly that — scraping and building our Pokemon database. I’ve structured this post to be a Python web scraping tutorial; by the time you have finished reading this post, you’ll be scraping the web with Python like a pro.

Our Data Source

I ended up deciding to scrape Pokemon DB because they have the some of the highest quality sprites that are easily accessible. And their HTML is nicely formatted and made it easy to download the Pokemon sprite images.

However, I cheated a little bit and copied and pasted the relevant portion of the webpage into a plaintext file. Here is a sample of some of the HTML:

<span class="infocard"><span class="infocard-img"><i class="pki" data-sprite="pkiAll n2"></i></span><span class="infocard-data"><a href="/sprites/ivysaur" class="ent-name">Ivysaur</a></span></span> <span class="infocard"><span class="infocard-img"><i class="pki" data-sprite="pkiAll n3"></i></span><span class="infocard-data"><a href="/sprites/venusaur" class="ent-name">Venusaur</a></span></span> <span class="infocard"><span class="infocard-img"><i class="pki" data-sprite="pkiAll n4"></i></span><span class="infocard-data"><a href="/sprites/charmander" class="ent-name">Charmander</a></span></span> <span class="infocard"><span class="infocard-img"><i class="pki" data-sprite="pkiAll n5"></i></span><span class="infocard-data"><a href="/sprites/charmeleon" class="ent-name">Charmeleon</a></span></span> <span class="infocard"><span class="infocard-img"><i class="pki" data-sprite="pkiAll n6"></i></span><span class="infocard-data"><a href="/sprites/charizard" class="ent-name">Charizard</a></span></span> <span class="infocard"><span class="infocard-img"><i class="pki" data-sprite="pkiAll n7"></i></span><span class="infocard-data"><a href="/sprites/squirtle" class="ent-name">Squirtle</a></span></span> <span class="infocard"><span class="infocard-img"><i class="pki" data-sprite="pkiAll n8"></i></span><span class="infocard-data"><a href="/sprites/wartortle" class="ent-name">Wartortle</a></span></span> <span class="infocard"><span class="infocard-img"><i class="pki" data-sprite="pkiAll n9"></i></span><span class="infocard-data"><a href="/sprites/blastoise" class="ent-name">Blastoise</a></span></span> <span class="infocard"><span class="infocard-img"><i class="pki" data-sprite="pkiAll n10"></i></span><span class="infocard-data"><a href="/sprites/caterpie" class="ent-name">Caterpie</a></span></span> <span class="infocard"><span class="infocard-img"><i class="pki" data-sprite="pkiAll n11"></i></span><span class="infocard-data"><a href="/sprites/metapod" class="ent-name">Metapod</a></span></span> <span class="infocard"><span class="infocard-img"><i class="pki" data-sprite="pkiAll n12"></i></span><span class="infocard-data"><a href="/sprites/butterfree" class="ent-name">Butterfree</a></span></span> <span class="infocard"><span class="infocard-img"><i class="pki" data-sprite="pkiAll n13"></i></span><span class="infocard-data"><a href="/sprites/weedle" class="ent-name">Weedle</a></span></span> ...

You can download the full HTML file using the form at the bottom of this post.

Scraping and Downloading

Now that we have our raw HTML, we need to parse it and download the sprite for each Pokemon.

I’m a big fan of lots of examples, lots of code, so let’s jump right in and figure out how we are going to do this:

# import the necessary packages

from bs4 import BeautifulSoup

import argparse

import requests

# construct the argument parser and parse the arguments

ap = argparse.ArgumentParser()

ap.add_argument("-p", "--pokemon-list", required = True,

help = "Path to where the raw Pokemon HTML file resides")

ap.add_argument("-s", "--sprites", required = True,

help = "Path where the sprites will be stored")

args = vars(ap.parse_args())

Lines 2-4 handle importing the packages we will be using. We’ll use BeautifulSoup to parse our HTML and requests to download the Pokemon images. Finally, argparse is used to parse our command line arguments.

To install Beautiful soup, simply use pip:

$ pip install beautifulsoup4

Then, on Lines 7-12 we parse our command line arguments. The switch --pokemon-list is the path to our HTML file that we are going to parse, while --sprites is the path to the directory where our Pokemon sprites will be downloaded and stored.

Now, let’s extract the Pokemon names from the HTML file:

# construct the soup and initialize the list of pokemon

# names

soup = BeautifulSoup(open(args["pokemon_list"]).read())

names = []

# loop over all link elements

for link in soup.findAll("a"):

# update the list of pokemon names

names.append(link.text)

On Line 16 we use BeautifulSoup to parse our HTML — we simply load our HTML file off disk and then pass it into the constructor. BeautifulSoup takes care of the rest. Line 17 then initializes the list to store our Pokemon names.

Then, we start to loop over all link elements on Line 20. The href attributes of these links point to a specific Pokemon. However, we do not need to follow each link. Instead, we just grab the inner text of the element. This text contains the name of our Pokemon.

# loop over the pokemon names

for name in names:

# initialize the parsed name as just the lowercase

# version of the pokemon name

parsedName = name.lower()

# if the name contains an apostrophe (such as in

# Farfetch'd, just simply remove it)

parsedName = parsedName.replace("'", "")

# if the name contains a period followed by a space

# (as is the case with Mr. Mime), then replace it

# with a dash

parsedName = parsedName.replace(". ", "-")

# handle the case for Nidoran (female)

if name.find(u'\u2640') != -1:

parsedName = "nidoran-f"

# and handle the case for Nidoran (male)

elif name.find(u'\u2642') != -1:

parsedName = "nidoran-m"

Now that we have a list of Pokemon names, we need to loop over them (Line 25) and format the name correctly so we can download the file. Ultimately, the formatted and sanitized name will be used in a URL to download the sprite.

Let’s examine each of these steps:

- Line 28: The first step to sanitizing the Pokemon name is to convert it to lowercase.

- Line 32: The first special case we need to handle is removing the apostrophe character. The apostrophe occurs in the name “Farfetch’d”.

- Line 37: Then, we need to replace the occurrence of a period and space. This happens in the name “Mr. Mime”. Notice the “. ” in the middle of the name. This needs to be removed.

- Lines 40-45: Now, we need to handle unicode characters that occur in the Nidoran family. The symbols for “male” and “female” are used in the actual game, but in order to download the sprite for the Nidorans, we need to manually construct the filename.

Now, we can finally download the Pokemon sprite:

# construct the URL to download the sprite

print "[x] downloading %s" % (name)

url = "http://img.pokemondb.net/sprites/red-blue/normal/%s.png" % (parsedName)

r = requests.get(url)

# if the status code is not 200, ignore the sprite

if r.status_code != 200:

print "[x] error downloading %s" % (name)

continue

# write the sprite to file

f = open("%s/%s.png" % (args["sprites"], name.lower()), "wb")

f.write(r.content)

f.close()

Line 49 constructs the URL of the Pokemon sprite. The base of the URL is http://img.pokemondb.net/sprites/red-blue/normal/ — we finish building the URL by appending the name of the Pokemon plus the “.png” file extension.

Downloading the actual image is handled on a single line (Line 50) using the requests package.

Lines 53-55 check the status code of the request. If the status code is not 200, indicating that the download was not successful, then we handle the error and continue looping over the Pokemon names.

Finally Lines 58-60 saves the sprite to file.

Running Our Scrape

Now that our code is complete, we can execute our scrape by issuing the following command:

$ python parse_and_download.py --pokemon-list pokemon_list.html --sprites sprites

This script assumes that the file that containing the Pokemon HTML is stored in pokemon_list.html and the downloaded Pokemon sprites will be stored in the sprites directory.

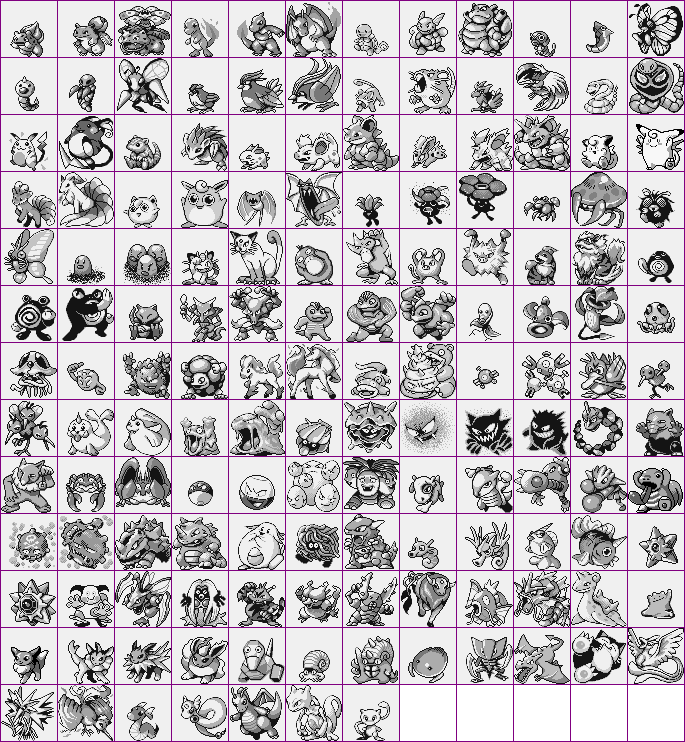

After the script has finished running, you should have a directory full of Pokemon sprites:

parse_and_download.py has finished running, you should have a directory filled with Pokemon sprites, like this.It’s that simple! Just a little bit of code and some knowledge on how to scrape images, we can build a Python script to scrape Pokemon sprites in under 75 lines of code.

Note: After I wrote this blog post, thegatekeeper07 suggested using the Veekun Pokemon Database. Using this database allows you to skip the scraping step and you can download a tarball of the Pokemon sprites. If you decide to take this approach, this is a great option; however, you might have to modify my source code a little bit to use the Veekun database. Just something to keep in mind!

What's next? We recommend PyImageSearch University.

84 total classes • 114+ hours of on-demand code walkthrough videos • Last updated: February 2024

★★★★★ 4.84 (128 Ratings) • 16,000+ Students Enrolled

I strongly believe that if you had the right teacher you could master computer vision and deep learning.

Do you think learning computer vision and deep learning has to be time-consuming, overwhelming, and complicated? Or has to involve complex mathematics and equations? Or requires a degree in computer science?

That’s not the case.

All you need to master computer vision and deep learning is for someone to explain things to you in simple, intuitive terms. And that’s exactly what I do. My mission is to change education and how complex Artificial Intelligence topics are taught.

If you're serious about learning computer vision, your next stop should be PyImageSearch University, the most comprehensive computer vision, deep learning, and OpenCV course online today. Here you’ll learn how to successfully and confidently apply computer vision to your work, research, and projects. Join me in computer vision mastery.

Inside PyImageSearch University you'll find:

- ✓ 84 courses on essential computer vision, deep learning, and OpenCV topics

- ✓ 84 Certificates of Completion

- ✓ 114+ hours of on-demand video

- ✓ Brand new courses released regularly, ensuring you can keep up with state-of-the-art techniques

- ✓ Pre-configured Jupyter Notebooks in Google Colab

- ✓ Run all code examples in your web browser — works on Windows, macOS, and Linux (no dev environment configuration required!)

- ✓ Access to centralized code repos for all 536+ tutorials on PyImageSearch

- ✓ Easy one-click downloads for code, datasets, pre-trained models, etc.

- ✓ Access on mobile, laptop, desktop, etc.

Summary

This post served as a Python web scraping tutorial: we downloaded sprite images for the original 151 Pokemon from the Red, Blue, and Green versions.

We made use of the BeautifulSoup and requests packages to download our Pokemon. These packages are essential to making scraping easy and simple, and keeping headaches to a minimum.

Now that we have our database of Pokemon, we can index them and characterize their shape using shape descriptors. We’ll cover that in the next blog post.

If you would like to receive an email update when posts in this series are released, please enter your email address in the form below:

Download the Source Code and FREE 17-page Resource Guide

Enter your email address below to get a .zip of the code and a FREE 17-page Resource Guide on Computer Vision, OpenCV, and Deep Learning. Inside you'll find my hand-picked tutorials, books, courses, and libraries to help you master CV and DL!