Last week’s blog post taught us how to write videos to file using OpenCV and Python. This is a great skill to have, but it also raises the question:

How do I write video clips containing interesting events to file rather than the entire video?

In this case, the overall goal is to construct a video synopsis, distilling the most key, salient, and interesting parts of the video stream into a series of short video files.

What actually defines a “key or interesting event” is entirely up to you and your application. Potential examples of key events can include:

- Motion being detected in a restricted access zone.

- An intruder entering your house or apartment.

- A car running a stop sign on a busy street by your home.

In each of these cases, you’re not interested in the entire video capture — instead, you only want the video clip that contains the action!

To see how capturing key event video clips with OpenCV is done (and build your own video synopsis), just keep reading.

Saving key event video clips with OpenCV

The purpose of this blog post is to demonstrate how to write short video clips to file when a particular action takes place. We’ll be using our knowledge gained from last week’s blog post on writing video to file with OpenCV to implement this functionality.

As I mentioned at the top of this post, defining “key” and “interesting” events in a video stream is entirely dependent on your application and the overalls goals of what you’re trying to build.

You might be interesting in detecting motion in a room. Monitoring your house. Or creating a system to observe traffic and store clips of motor vehicle drivers breaking the law.

As a simple example of both:

- Defining a key event.

- Writing the video clip to file containing the event.

We’ll be processing a video streaming and looking for occurrences of this green ball:

If this green ball appears in our video stream, we’ll open up a new file video (based on the timestamp of occurrence), write the clip to file, and then stop the writing process once the ball disappears from our view.

Furthermore, our implementation will have a number of desirable properties, including:

- Writing frames to our video file a few seconds before the action takes place.

- Writing frames to file a few seconds after the action finishes — in both cases, our goal is to not only capture the entire event, but also the context of the event as well.

- Utilizing threads to ensure our main program is not slowed down when performing I/O on both the input stream and the output video clip file.

- Leveraging built-in Python data structures such as

dequeandQueueso we need not rely on external libraries (other than OpenCV and imutils, of course).

Project structure

Before we get started implementing our key event video writer, let’s look at the project structure:

|--- output |--- pyimagesearch | |--- __init__.py | |--- keyclipwriter.py |--- save_key_events.py

Inside the pyimagesearch module, we’ll define a class named KeyClipWriter inside the keyclipwriter.py file. This class will handle accepting frames from an input video stream ad writing them to file in a safe, efficient, and threaded manner.

The driver script, save_key_events.py , will define the criteria of what an “interesting event” is (i.e., the green ball entering the view of the camera), followed by passing these frames on to the KeyClipWriter which will then create our video synopsis.

A quick note on Python + OpenCV versions

This blog post assumes you are using Python 3+ and OpenCV 3. As I mentioned in last week’s post, I wasn’t able to get the cv2.VideoWriter function to work on my OpenCV 2.4 installation, so after a few hours of hacking around with no luck, I ended up abandoning OpenCV 2.4 for this project and sticking with OpenCV 3.

The code in this lesson is technically compatible with Python 2.7 (again, provided you are using Python 2.7 with OpenCV 3 bindings), but you’ll need to change a few import statements (I’ll point these out along the way).

Writing key/interesting video clips to file with OpenCV

Let’s go ahead and get started reviewing our KeyClipWriter class:

# import the necessary packages from collections import deque from threading import Thread from queue import Queue import time import cv2 class KeyClipWriter: def __init__(self, bufSize=64, timeout=1.0): # store the maximum buffer size of frames to be kept # in memory along with the sleep timeout during threading self.bufSize = bufSize self.timeout = timeout # initialize the buffer of frames, queue of frames that # need to be written to file, video writer, writer thread, # and boolean indicating whether recording has started or not self.frames = deque(maxlen=bufSize) self.Q = None self.writer = None self.thread = None self.recording = False

We start off by importing our required Python packages on Lines 2-6. This tutorial assumes you are using Python 3, so if you’re using Python 2.7, you’ll need to change Line 4 from from queue import Queue to simply import Queue .

Line 9 defines the constructor to our KeyClipWriter , which accepts two optional parameters:

bufSize: The maximum number of frames to be keep cached in an in-memory buffer.timeout: An integer representing the number of seconds to sleep for when (1) writing video clips to file and (2) there are no frames ready to be written.

We then initialize four important variables on Lines 18-22:

frames: A buffer used to a store a maximum ofbufSizeframes that have been most recently read from the video stream.Q: A “first in, first out” (FIFO) Python Queue data structure used to hold frames that are awaiting to be written to video file.writer: An instantiation of thecv2.VideoWriterclass used to actually write frames to the output video file.thread: A PythonThreadinstance that we’ll use when writing videos to file (to avoid costly I/O latency delays).recording: Boolean value indicating whether or not we are in “recording mode”.

Next up, let’s review the update method:

def update(self, frame): # update the frames buffer self.frames.appendleft(frame) # if we are recording, update the queue as well if self.recording: self.Q.put(frame)

The update function requires a single parameter, the frame read from our video stream. We take this frame and store it in our frames buffer (Line 26). And if we are already in recording mode, we’ll store the frame in the Queue as well so it can be flushed to video file (Lines 29 and 30).

In order to kick-off an actual video clip recording, we need a start method:

def start(self, outputPath, fourcc, fps): # indicate that we are recording, start the video writer, # and initialize the queue of frames that need to be written # to the video file self.recording = True self.writer = cv2.VideoWriter(outputPath, fourcc, fps, (self.frames[0].shape[1], self.frames[0].shape[0]), True) self.Q = Queue() # loop over the frames in the deque structure and add them # to the queue for i in range(len(self.frames), 0, -1): self.Q.put(self.frames[i - 1]) # start a thread write frames to the video file self.thread = Thread(target=self.write, args=()) self.thread.daemon = True self.thread.start()

First, we update our recording boolean to indicate that we are in “recording mode”. Then, we initialize the cv2.VideoWriter using the supplied outputPath , fourcc , and fps provided to the start method, along with the frame spatial dimensions (i.e., width and height). For a complete review of the cv2.VideoWriter parameters, please refer to this blog post.

Line 39 initializes our Queue used to store the frames ready to be written to file. We then loop over all frames in our frames buffer and add them to the queue.

Finally, we spawn a separate thread to handle writing frames to video — this way we don’t slow down our main video processing pipeline by waiting for I/O operations to complete.

As noted above, the start method creates a new thread, calling the write method used to write frames inside the Q to file. Let’s define this write method:

def write(self): # keep looping while True: # if we are done recording, exit the thread if not self.recording: return # check to see if there are entries in the queue if not self.Q.empty(): # grab the next frame in the queue and write it # to the video file frame = self.Q.get() self.writer.write(frame) # otherwise, the queue is empty, so sleep for a bit # so we don't waste CPU cycles else: time.sleep(self.timeout)

Line 53 starts an infinite loop that will continue polling for new frames and writing them to file until our video recording has finished.

Lines 55 and 56 make a check to see if the recording should be stopped, and if so, we return from the thread.

Otherwise, if the Q is not empty, we grab the next frame and write it to the video file (Lines 59-63).

If there are no frames in the Q , we sleep for a bit so we don’t needlessly waste CPU cycles spinning (Lines 67 and 68). This is especially important when using the Queue data structure which is thread-safe, implying that we must acquire a lock/semaphore prior to updating the internal buffer. If we don’t call time.sleep when the buffer is empty, then the write and update methods will constantly be fighting for the lock. Instead, it’s best to let the writer sleep for a bit until there are a backlog of frames in the queue that need to be written to file.

We’ll also define a flush method which simply takes all frames left in the Q and dumps them to file:

def flush(self): # empty the queue by flushing all remaining frames to file while not self.Q.empty(): frame = self.Q.get() self.writer.write(frame)

A method like this is used when a video recording has finished and we need to immediately flush all frames to file.

Finally, we define the finish method below:

def finish(self): # indicate that we are done recording, join the thread, # flush all remaining frames in the queue to file, and # release the writer pointer self.recording = False self.thread.join() self.flush() self.writer.release()

This method indicates that the recording has been completed, joins the writer thread with the main script, flushes the remaining frames in the Q to file, and finally releases the cv2.VideoWriter pointer.

Now that we have defined the KeyClipWriter class, we can move on to the driver script used to implement the “key/interesting event” detection.

Saving key events with OpenCV

In order to keep this blog post simple and hands-on, we’ll define our “key event” to be when this green ball enters our video stream:

Once we see this green ball, we will call KeyClipWriter to write all frames that contain the green ball to file. Essentially, this will give us a set of short video clips that neatly summarizes the events of the entire video stream — in short, a video synopsis.

Of course, you can use this code as a boilerplate/starting point to defining your own actions — we’ll simply use the “green ball” event since we have covered it multiple times before on the PyImageSearch blog, including tracking object movement and ball tracking.

Before you proceed with the rest of this tutorial, make sure you have the imutils package installed on your system:

$ pip install imutils

This will ensure that you can use the VideoStream class which creates a unified access to both builtin/USB webcams and the Raspberry Pi camera module.

Let’s go ahead and get started. Open up the save_key_events.py file and insert the following code:

# import the necessary packages

from pyimagesearch.keyclipwriter import KeyClipWriter

from imutils.video import VideoStream

import argparse

import datetime

import imutils

import time

import cv2

# construct the argument parse and parse the arguments

ap = argparse.ArgumentParser()

ap.add_argument("-o", "--output", required=True,

help="path to output directory")

ap.add_argument("-p", "--picamera", type=int, default=-1,

help="whether or not the Raspberry Pi camera should be used")

ap.add_argument("-f", "--fps", type=int, default=20,

help="FPS of output video")

ap.add_argument("-c", "--codec", type=str, default="MJPG",

help="codec of output video")

ap.add_argument("-b", "--buffer-size", type=int, default=32,

help="buffer size of video clip writer")

args = vars(ap.parse_args())

Lines 2-8 import our necessary Python packages while Lines 11-22 parse our command line arguments. The set of command line arguments are detailed below:

--output: This is the path to the output directory where we will store the output video clips.--picamera: If you want to use your Raspberry Pi camera (rather than a builtin/USB webcam), then supply a value of--picamera 1. You can read more about accessing both builtin/USB webcams and the Raspberry Pi camera module (without changing a single line of code) in this post.--fps: This switch controls the desired FPS of your output video. This value should be similar to the number of frames per second your image processing pipeline can process.--codec: The FourCC codec of the output video clips. Please see the previous post for more information.--buffer-size: The size of the in-memory buffer used to store the most recently polled frames from the camera sensor. A larger--buffer-sizewill allow for more context before and after the “key event” to be included in the output video clip, while a smaller--buffer-sizewill store less frames before and after the “key event”.

Let’s perform some initialization:

# initialize the video stream and allow the camera sensor to

# warmup

print("[INFO] warming up camera...")

vs = VideoStream(usePiCamera=args["picamera"] > 0).start()

time.sleep(2.0)

# define the lower and upper boundaries of the "green" ball in

# the HSV color space

greenLower = (29, 86, 6)

greenUpper = (64, 255, 255)

# initialize key clip writer and the consecutive number of

# frames that have *not* contained any action

kcw = KeyClipWriter(bufSize=args["buffer_size"])

consecFrames = 0

Lines 26-28 initialize our VideoStream and allow the camera sensor to warmup.

From there, Lines 32 and 33 define the lower and upper color threshold boundaries for the green ball in the HSV color space. For more information on how we defined these color threshold values, please see this post.

Line 37 instantiates our KeyClipWriter using our supplied --buffer-size , along with initializing an integer used to count the number of consecutive frames that have not contained any interesting events.

We are now ready to start processing frames from our video stream:

# keep looping while True: # grab the current frame, resize it, and initialize a # boolean used to indicate if the consecutive frames # counter should be updated frame = vs.read() frame = imutils.resize(frame, width=600) updateConsecFrames = True # blur the frame and convert it to the HSV color space blurred = cv2.GaussianBlur(frame, (11, 11), 0) hsv = cv2.cvtColor(blurred, cv2.COLOR_BGR2HSV) # construct a mask for the color "green", then perform # a series of dilations and erosions to remove any small # blobs left in the mask mask = cv2.inRange(hsv, greenLower, greenUpper) mask = cv2.erode(mask, None, iterations=2) mask = cv2.dilate(mask, None, iterations=2) # find contours in the mask cnts = cv2.findContours(mask.copy(), cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE) cnts = imutils.grab_contours(cnts)

On Line 41 we start to looping over frames from our video stream. Lines 45 and 46 read the next frame from the video stream and then resizes it to have a width of 600 pixels.

Further pre-processing is done on Lines 50 and 51 by blurring the image slightly and then converting the image from the RGB color space to the HSV color space (so we can apply our color thresholding).

The actual color thresholding is performed on Line 56 using the cv2.inRange function. This method finds all pixels p that are greenLower <= p <= greenUpper . We then perform a series of erosions and dilations to remove any small blobs left in the mask.

Finally, Lines 61-63 find contours in the thresholded image.

If you are confused about any step of this processing pipeline, I would suggest going back to our previous posts on ball tracking and object movement to further familiarize yourself with the topic.

We are now ready to check and see if the green ball was found in our image:

# only proceed if at least one contour was found

if len(cnts) > 0:

# find the largest contour in the mask, then use it

# to compute the minimum enclosing circle

c = max(cnts, key=cv2.contourArea)

((x, y), radius) = cv2.minEnclosingCircle(c)

updateConsecFrames = radius <= 10

# only proceed if the radius meets a minimum size

if radius > 10:

# reset the number of consecutive frames with

# *no* action to zero and draw the circle

# surrounding the object

consecFrames = 0

cv2.circle(frame, (int(x), int(y)), int(radius),

(0, 0, 255), 2)

# if we are not already recording, start recording

if not kcw.recording:

timestamp = datetime.datetime.now()

p = "{}/{}.avi".format(args["output"],

timestamp.strftime("%Y%m%d-%H%M%S"))

kcw.start(p, cv2.VideoWriter_fourcc(*args["codec"]),

args["fps"])

Line 66 makes a check to ensure that at least one contour was found, and if so, Line 69 and 70 find the largest contour in the mask (according to the area) and use this contour to compute the minimum enclosing circle.

If the radius of the circle meets a minimum size of 10 pixels (Line 74), then we will assume that we have found the green ball. Lines 78-80 reset the number of consecFrames that do not contain any interesting events (since an interesting event is “currently happening”) and draw a circle highlighting our ball in the frame.

Finally, we make a check if to see if we are currently recording a video clip (Line 83). If not, we generate an output filename for the video clip based on the current timestamp and call the start method of the KeyClipWriter .

Otherwise, we’ll assume no key/interesting event has taken place:

# otherwise, no action has taken place in this frame, so

# increment the number of consecutive frames that contain

# no action

if updateConsecFrames:

consecFrames += 1

# update the key frame clip buffer

kcw.update(frame)

# if we are recording and reached a threshold on consecutive

# number of frames with no action, stop recording the clip

if kcw.recording and consecFrames == args["buffer_size"]:

kcw.finish()

# show the frame

cv2.imshow("Frame", frame)

key = cv2.waitKey(1) & 0xFF

# if the `q` key was pressed, break from the loop

if key == ord("q"):

break

If no interesting event has happened, we update consecFrames and pass the frame over to our buffer.

Line 101 makes an important check — if we are recording and have reached a sufficient number of consecutive frames with no key event, then we should stop the recording.

Finally, Lines 105-110 display the output frame to our screen and wait for a keypress.

Our final block of code ensures the video has been successfully closed and then performs a bit of cleanup:

# if we are in the middle of recording a clip, wrap it up if kcw.recording: kcw.finish() # do a bit of cleanup cv2.destroyAllWindows() vs.stop()

Video synopsis results

To generate video clips for key events (i.e., the green ball appearing on our video stream), just execute the following command:

$ python save_key_events.py --output output

I’ve included the full 1m 46s video (without extracting salient clips) below:

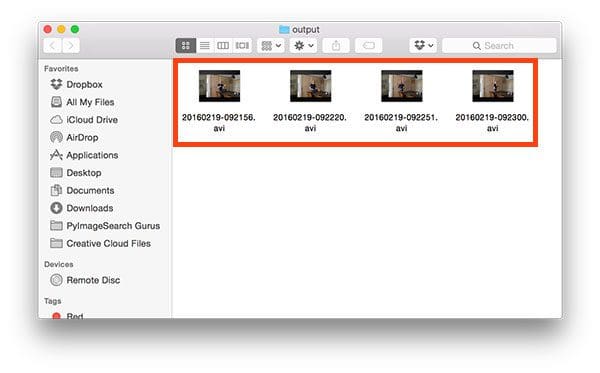

After running the save_key_events.py script, I now have 4 output videos, one for each the time green ball was present in my video stream:

The key event video clips are displayed below to demonstrate that our script is working properly, accurately extracting our “interesting events”, and essentially building a series of video clips functioning as a video synopsis:

Video clip #1:

Video clip #2:

Video clip #3:

Video clip #4:

What's next? We recommend PyImageSearch University.

84 total classes • 114+ hours of on-demand code walkthrough videos • Last updated: February 2024

★★★★★ 4.84 (128 Ratings) • 16,000+ Students Enrolled

I strongly believe that if you had the right teacher you could master computer vision and deep learning.

Do you think learning computer vision and deep learning has to be time-consuming, overwhelming, and complicated? Or has to involve complex mathematics and equations? Or requires a degree in computer science?

That’s not the case.

All you need to master computer vision and deep learning is for someone to explain things to you in simple, intuitive terms. And that’s exactly what I do. My mission is to change education and how complex Artificial Intelligence topics are taught.

If you're serious about learning computer vision, your next stop should be PyImageSearch University, the most comprehensive computer vision, deep learning, and OpenCV course online today. Here you’ll learn how to successfully and confidently apply computer vision to your work, research, and projects. Join me in computer vision mastery.

Inside PyImageSearch University you'll find:

- ✓ 84 courses on essential computer vision, deep learning, and OpenCV topics

- ✓ 84 Certificates of Completion

- ✓ 114+ hours of on-demand video

- ✓ Brand new courses released regularly, ensuring you can keep up with state-of-the-art techniques

- ✓ Pre-configured Jupyter Notebooks in Google Colab

- ✓ Run all code examples in your web browser — works on Windows, macOS, and Linux (no dev environment configuration required!)

- ✓ Access to centralized code repos for all 536+ tutorials on PyImageSearch

- ✓ Easy one-click downloads for code, datasets, pre-trained models, etc.

- ✓ Access on mobile, laptop, desktop, etc.

Summary

In this blog post, we learned how to save key event video clips to file using OpenCV and Python.

Exactly what defines a “key or interesting event” is entirely dependent on your application and the goals of your overall project. Examples of key events can include:

- Monitoring your front door for motion detection (i.e., someone entering your house).

- Recognizing the face of an intruder as they enter your house.

- Reporting unsafe driving outside your home to the authorities.

Again, exactly what constitutes a “key event” is near endless. However, regardless of how you define an interesting event, you can still use the Python code detailed in this post to help save these interesting events to file as a shortened video clip.

Using this methodology, you can condense hours of video stream footage into seconds of interesting events, effectively yielding a video synopsis — all generated using Python, computer vision, and image processing techniques.

Anyway, I hope you enjoyed this blog post!

If you did, please consider sharing it on your favorite social media outlet such as Facebook, Twitter, LinkedIn, etc. I put a lot of effort into the past two blog posts in this series and I would really appreciate it if you could help spread the word.

And before you, be sure to signup for the PyImageSearch Newsletter using the form below to receive email updates when new posts go live!

Download the Source Code and FREE 17-page Resource Guide

Enter your email address below to get a .zip of the code and a FREE 17-page Resource Guide on Computer Vision, OpenCV, and Deep Learning. Inside you'll find my hand-picked tutorials, books, courses, and libraries to help you master CV and DL!

Dude I hope you know how cool you are. As an enthusiastic reader of programming blogs, I’m always excited when you post something knew. The format of this blog is something that everybody who writes programming tutorials should strive for. Your books are pretty cool, too.

I know this is kinda spammy but I really wanted to tell you this.

It’s by no means spammy at all Alexander. After a rough day, your comment certainly put a smile on my face 🙂

Thank you for giving me the meat! Every weeks your share always make my eyes light up with delight. : )

Thanks Daniel, you certainly put a smile on my face 🙂

Cheers Adrian! As an avid reader of yours, this post is awesome! Thank you for your enthusiasm and hardwork as always! 😀

Thanks Kenny — I’m glad you enjoyed the blog post! 🙂

Hey there, you’re doing an awesome job and I’ve been reading your blog for some time now. Keep up the good stuff, I can already see your next project combining this and virtual reality!

Another great blog Adrian. You continue to bring it sir, thank you.

Johnny

Thanks Johnny!

Hey Adrian. As usual, nice post.

I am using Python 2.7 and there is also other thing needed to be changed to make this code works (If I ever missed the fact that you have wrote this in your post, pardon me, because I didn’t really read in details this post yet). Also, this is just a quick corrections I have tried. Maybe it is not perfect or not the best way etc. (still a novice here).

First way of correcting it:

In line 39 of the keyclipwriter.py file, the code

“self.Q = Queue()”

need to be changed to

“self.Q = Queue.Queue”

Second way of correcting it:

Replace the line

“from queue import Queue”

to

“from Queue import Queue” instead of “import Queue”

I think I’ve made a mistake. Instead of changing to

“self.Q = Queue.Queue”,

change to

“self.Q = Queue.Queue()”

Adrian, I realized that in your save_key_events.py file, in line 20 (referring to the above), the key to access the value of the buffer size if it is given by the user would be

“buffer-size”.

But in line 101 (again, referring to the above) of the same file, the buffer size value (if it is given by the user) is being accessed with key

“buffer_size”.

A mistake perhaps? Or I am not in the same page here?

The

argparselibrary automatically converts dashes to underscores. Using the keybuffer_sizeis correct.Thanks for the reply. A new knowledge for me =/

I admit, it’s a little bit of a nuance and something that has to be learned with experience 🙂

I’ve spent the last 3 days embedded in this article and others on your blog. Thanks very much for your work. It is great.

I’ve implemented your program here and I’ve noticed that my recorded video is like 2-3x faster than the reality or what I see on the original playback. It’s like watching something in fast forward. Is it possible this is a frame dropping issue? It doesn’t look like the library is designed to just save key frames, so I can’t think of what else this could be. Have you ever seen what I’m talking about or have other people reported it? I’m doing this on an rpi 3 having followed your rpi 3 opencv 3 tutorial.

Thanks,

Mike

Hey Mike — the likely issue is that you have set a high FPS in the

cv2.VideoWriter, but in reality, your video processing pipeline is not capable of processing that many frames per second. For example, if I want to write 32 FPS to file, but my processing loop can only process 10 frames per second, then my output video will look like it’s in “fast forward” mode.Thanks for the reply Adrian. This was exactly what was happening.

To improve…

I was able to increase the FPS a little bit by allocating more memory to the GPU by changing the /boot/config.txt gpu_mem to 512.

I also got a big performance gain by making the image width 300px vs 600px (although I don’t want to do this).

The program I’m using is a mixture of this script and your motion capture script. Basically motion capture is the trigger, but it’s using the rolling buffer to save the full event. I’m not sure exactly, but I’d guess I’m getting < 10 FPS. I've removed all of the green ball detection and any unneeded lines in the main processing loop.

Using your FPS testing script, I get 30 FPS normal and 100+ FPS when threaded.

I've been trying to optimize and figure out how to get the FPS up on the motion capture script, but I'm thinking maybe it is just not possible on the PI3, using CV2 go get past 10 FPS? Do you have a benchmark for this? Or any suggestions. I'm trying to do a simple motion capture app, but need a high FPS, I'm mainly trying to figure out what the PIs limits are, so if I'm hitting them, I can just accept that.

Thanks for all the great work you do on this blog! I've been glued to it for a few weeks now.

The FPS testing script is mainly used to test out how frames per second you can process, which essentially measures the throughput of your pipeline. It is not meant to be a true measure of the physical FPS of the device.

10 FPS sounds a bit low, but you could certainly be hitting a ceiling depending on how many images are going into the average computation. One suggestion I would have is to re-implement your code in C/C++. This should give you a noticeable performance increase as well.

Hey man!

First of all, Thanks for this great tutorial and all others too.

I am a novice and I am facing a few difficulties. I am getting this error:-

“from pyimagesearch.keyclipwriter import KeyClipWriter

ImportError: No module named pyimagesearch.keyclipwriter”

I understood your KeyClipWriter code but I am not getting how to incorporate into the main code. Also, I am not able to find “pyimagesearch” using pip. Can you tell me what I need to do with the KeyClipWriter code?

Many thanks in advance!

Hey Kartik — it sounds like you’re copying and pasting the code as you work through the tutorial. That’s a great way to learn; however, I would wait until you have a bit more experience before doing that. Please use the “Downloads” section of this tutorial to download the code. This will give you the proper directory structure for the project and allow you to execute the code.

Hi Adrian, great tutorial as always.

But, can i change the extension of the output video to .mp4 or .webm? Because i need to play it on the web. Since w3shools.com says “AVI (Audio Video Interleave). Developed by Microsoft. Commonly used in video cameras and TV hardware. Plays well on Windows computers, but not in web browsers.” (Source: https://www.w3schools.com/html/html_media.asp)

I cover how to save video files to disk in various formats in this post. However, keep in mind that saving videos to dish can be a bit of a pain and you might need to install different codecs and re-install OpenCV. In many ways, it’s a trial and error process.

Love the blog! I am getting an error that I can’t figure out when I start the call kcw.start(__):

[DEBUG] Filename: /home/pi/raspsecurity/videos/20171224-204800.avi

Traceback (most recent call last):

File “pi_surveillance.py”, line 133, in

kcw.start(p, cv2.VideoWriter_fourcc(*’MJPG’), 20)

File “/home/pi/raspsecurity/pyimagesearch/keyclipwriter.py”, line 40, in start

(self.frames[0].shape[1], self.frames[0].shape[0]), True)

IndexError: deque index out of range

I tried changing all off the variables passed to start() to constants and still get the error, any guidance how what is going wrong?

It looks like there might be action starting from the very first frame when the deque hasn’t had a chance to fill up. This code assumes there are already frames in the deque. I’m pretty sure this is the root cause of the error but make sure you debug this as well.

Hello, I am also getting the same error. If you solved it can you share your solution?

I got the same error and I replaced the line (self.frames[0].shape[1], self.frames[0].shape[0]) to the desired frame size. I made (480,360) and after that it was working. If you want you can give the size of input video frame, what you have to remove is shape.

Instead of detecting green ball i.e green lower and green upper

I want to save video locally when there is motion captured in camera.

So how can I modify contour part.

I have seen you tutorial of Room status occupied and unoccupied.And also this tutorial of saving .avi videos locally when green ball is detected but I am getting errors when I try to combine can you please help how it can be done or can you please upload a tutorial regarding that. As it will be really helpful for others as well.

I am using raspberry pi camera

It sounds like you’ve already seen my motion detection tutorial using thresholding + contours. As you noted, you will need the combine the two to build the solution. I’m not sure what errors you may be getting, could you be more specific?

Can you please share a tutorial combining the two?

It will be really helpful

instead of detecting green ball it is needed to detect the motion in the frame and capture the video locally on raspberry pi either in avi or mp4 format till the motion in going on in the frame.

for example:

Just as like when ever there is room status: occupied save video locally .

Hey Kartik — I don’t have any plans on combining the two but if I do, I will let you know. I’m happy to help but I cannot write the code for you. If you’re getting stuck, no worries! That happens if you’re new to OpenCV or image processing. Instead of getting discouraged I would suggest working through Practical Python and OpenCV to help you learn the fundamentals first. It can be challenging to combine computer vision/OpenCV techniques without a strong understanding of the fundamentals. Take a look, I have faith in you and I know you can do it! 🙂

thank for amazing tuto

how can i store that motion part as a image file instead of video any idea?

You can use the

cv2.imwritefunction to write images/frames to disk.Can anyone help me how to change the green ball to some vehicle or knife. Whether this code is sufficient to detect any object or i have to write code for detecting the objects i want. If i have to write on my own then how to achieve it?

Here the key event is detecting green ball. How can i change it to Reporting unsafe driving outside my home to the authorities? Some one help me please.

You would want to swap out the green ball detector for an object detector capable of detecting weapons. I also demonstrate how to train your own custom weapon detector inside my book, Deep Learnng for Computer Vision with Python.

Thanks for the source code Adrian.

You are saving videos as separate files whenever the green ball coming into the frame. So the number of output video files completely depends on how many times the green ball coming into the frame. But I want to make it as a single video file having all the frames that green ball appears in the frame

Why not have a separate Python script that merges all the videos into a single larger video?

Hi Adrian,

i have already combined some of your tutorials.

but the cpu usage is around 95-100%, so there is no much resources for other tasks.

However, do you have a guide for 24/7 video recording and webstreaming with a raspberry pi?

Try using the “pdb” Python debugger and look at what is eating up your CPU resources. It may be the threading from the “VideoStream” class (assuming that is the class you are using to access your webcam).

How can i save only one key event video clip and break the loop once a event is being captured and do not save the repeating event.

I had no idea my desire to make one little project turned into months of trying to learn more about all this. I too have been working on combining this with motion detection instead of color detection and adding regions of interest (roi). I also would like more than 10fps 😉 Are there any examples of how to convert this to C++? I am thinking it might be an entirely different install of everything on the Pi? All the libraries? Is Imutils a C++ library? I can program C++ and understand what I would need structure wise, but since everything here seems Python oriented, I don’t know how to get started with C++

Sorry Fred, I don’t have any C++ examples. I primarily just cover Python here on the PyImageSearch blog. Good luck with the project!