A few weeks ago, I demonstrated how to order the (x, y)-coordinates of a rotated bounding box in a clockwise fashion — an extremely useful skill that is critical in many computer vision applications, including (but not limited to) perspective transforms and computing the dimensions of an object in an image.

One PyImageSearch reader emailed in, curious about this clockwise ordering, and posed a similar question:

Is it possible to find the extreme north, south, east, and west coordinates from a raw contour?

“Of course it is!”, I replied.

Today, I’m going to share my solution to find extreme points along a contour with OpenCV and Python.

Finding extreme points in contours with OpenCV

In the remainder of this blog post, I am going to demonstrate how to find the extreme north, south, east, and west (x, y)-coordinates along a contour, like in the image at the top of this blog post.

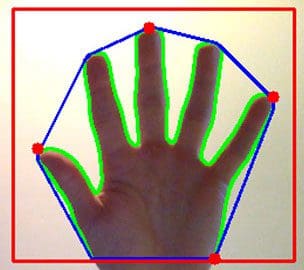

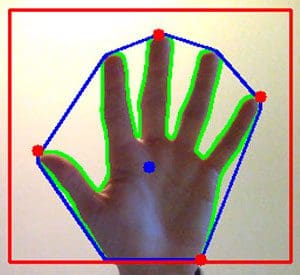

While this skill isn’t inherently useful by itself, it’s often used as a pre-processing step to more advanced computer vision applications. A great example of such an application is hand gesture recognition:

In the figure above, we have segmented the skin/hand from the image, computed the convex hull (outlined in blue) of the hand contour, and then found the extreme points along the convex hull (red circles).

By computing the extreme points along the hand, we can better approximate the palm region (highlighted as a blue circle):

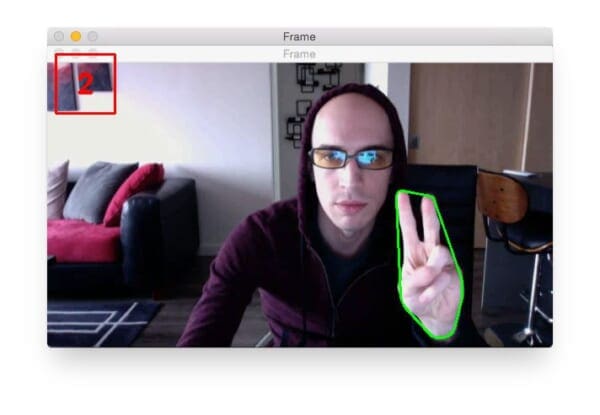

Which in turn allows us to recognize gestures, such as the number of fingers we are holding up:

Note: I cover how to recognize hand gestures inside the PyImageSearch Gurus course, so if you’re interested in learning more, be sure to claim your spot in line for the next open enrollment!

Implementing such a hand gesture recognition system is outside the scope of this blog post, so we’ll instead utilize the following image:

Where our goal is to compute the extreme points along the contour of the hand in the image.

Let’s go ahead and get started. Open up a new file, name it extreme_points.py , and let’s get coding:

# import the necessary packages

import imutils

import cv2

# load the image, convert it to grayscale, and blur it slightly

image = cv2.imread("hand_01.png")

gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

gray = cv2.GaussianBlur(gray, (5, 5), 0)

# threshold the image, then perform a series of erosions +

# dilations to remove any small regions of noise

thresh = cv2.threshold(gray, 45, 255, cv2.THRESH_BINARY)[1]

thresh = cv2.erode(thresh, None, iterations=2)

thresh = cv2.dilate(thresh, None, iterations=2)

# find contours in thresholded image, then grab the largest

# one

cnts = cv2.findContours(thresh.copy(), cv2.RETR_EXTERNAL,

cv2.CHAIN_APPROX_SIMPLE)

cnts = imutils.grab_contours(cnts)

c = max(cnts, key=cv2.contourArea)

Lines 2 and 3 import our required packages. We then load our example image from disk, convert it to grayscale, and blur it slightly.

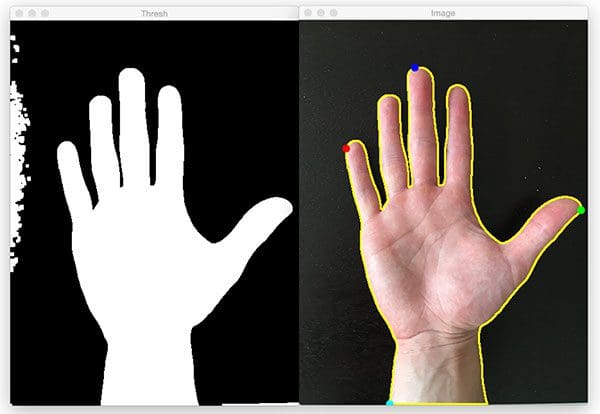

Line 12 performs thresholding, allowing us to segment the hand region from the rest of the image. After thresholding, our binary image looks like this:

In order to detect the outlines of the hand, we make a call to cv2.findContours , followed by sorting the contours to find the largest one, which we presume to be the hand itself (Lines 18-21).

Before we can find extreme points along a contour, it’s important to understand that a contour is simply a NumPy array of (x, y)-coordinates. Therefore, we can leverage NumPy functions to help us find the extreme coordinates.

# determine the most extreme points along the contour extLeft = tuple(c[c[:, :, 0].argmin()][0]) extRight = tuple(c[c[:, :, 0].argmax()][0]) extTop = tuple(c[c[:, :, 1].argmin()][0]) extBot = tuple(c[c[:, :, 1].argmax()][0])

For example, Line 24 finds the smallest x-coordinate (i.e., the “west” value) in the entire contour array c by calling argmin() on the x-value and grabbing the entire (x, y)-coordinate associated with the index returned by argmin() .

Similarly, Line 25 finds the largest x-coordinate (i.e., the “east” value) in the contour array using the argmax() function.

Lines 26 and 27 perform the same operation, only for the y-coordinate, giving us the “north” and “south” coordinates, respectively.

Now that we have our extreme north, south, east, and west coordinates, we can draw them on our image :

# draw the outline of the object, then draw each of the

# extreme points, where the left-most is red, right-most

# is green, top-most is blue, and bottom-most is teal

cv2.drawContours(image, [c], -1, (0, 255, 255), 2)

cv2.circle(image, extLeft, 8, (0, 0, 255), -1)

cv2.circle(image, extRight, 8, (0, 255, 0), -1)

cv2.circle(image, extTop, 8, (255, 0, 0), -1)

cv2.circle(image, extBot, 8, (255, 255, 0), -1)

# show the output image

cv2.imshow("Image", image)

cv2.waitKey(0)

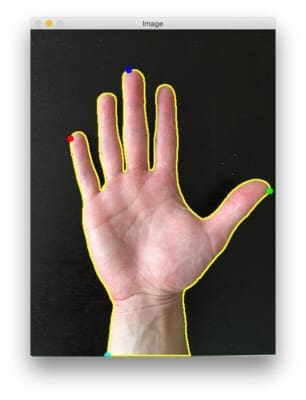

Line 32 draws the outline of the hand in yellow, while Lines 33-36 draw circles for each of the extreme points, detailed below:

- West: Red

- East: Green

- North: Blue

- South: Teal

Finally, Lines 39 and 40 display the results to our screen.

To execute our script, make sure you download the code and images associated with this post (using the “Downloads” form found at the bottom of this tutorial), navigate to your code directory, and then execute the following command:

$ python extreme_points.py

Your should then see the following out image:

As you can see we have successfully labeled each of the extreme points along the hand. The western-most point is labeled in red, the northern-most point in blue, the eastern-most point in green, and finally the southern-most point in teal.

Below we can see a second example of labeling the extreme points a long a hand:

Let’s examine one final instance:

And that’s all there is to it!

Just keep in mind that the contours list returned by cv2.findContours is simply a NumPy array of (x, y)-coordinates. By calling argmin() and argmax() on this array, we can extract the extreme (x, y)-coordinates.

What's next? We recommend PyImageSearch University.

84 total classes • 114+ hours of on-demand code walkthrough videos • Last updated: February 2024

★★★★★ 4.84 (128 Ratings) • 16,000+ Students Enrolled

I strongly believe that if you had the right teacher you could master computer vision and deep learning.

Do you think learning computer vision and deep learning has to be time-consuming, overwhelming, and complicated? Or has to involve complex mathematics and equations? Or requires a degree in computer science?

That’s not the case.

All you need to master computer vision and deep learning is for someone to explain things to you in simple, intuitive terms. And that’s exactly what I do. My mission is to change education and how complex Artificial Intelligence topics are taught.

If you're serious about learning computer vision, your next stop should be PyImageSearch University, the most comprehensive computer vision, deep learning, and OpenCV course online today. Here you’ll learn how to successfully and confidently apply computer vision to your work, research, and projects. Join me in computer vision mastery.

Inside PyImageSearch University you'll find:

- ✓ 84 courses on essential computer vision, deep learning, and OpenCV topics

- ✓ 84 Certificates of Completion

- ✓ 114+ hours of on-demand video

- ✓ Brand new courses released regularly, ensuring you can keep up with state-of-the-art techniques

- ✓ Pre-configured Jupyter Notebooks in Google Colab

- ✓ Run all code examples in your web browser — works on Windows, macOS, and Linux (no dev environment configuration required!)

- ✓ Access to centralized code repos for all 536+ tutorials on PyImageSearch

- ✓ Easy one-click downloads for code, datasets, pre-trained models, etc.

- ✓ Access on mobile, laptop, desktop, etc.

Summary

In this blog post, I detailed how to find the extreme north, south, east, and west (x, y)-coordinates along a given contour. This method can be used on both raw contours and rotated bounding boxes.

While finding the extreme points along a contour may not seem interesting on its own, it’s actually a very useful skill to have, especially as a preprocessing step to more advanced computer vision and image processing algorithms, such as hand gesture recognition.

To learn more about hand gesture recognition, and how finding extreme points along a contour is useful in recognizing gestures, be sure to signup for the next open enrollment in the PyImageSearch Gurus course!

See you inside!

Download the Source Code and FREE 17-page Resource Guide

Enter your email address below to get a .zip of the code and a FREE 17-page Resource Guide on Computer Vision, OpenCV, and Deep Learning. Inside you'll find my hand-picked tutorials, books, courses, and libraries to help you master CV and DL!