My Uncle John is a long haul tractor trailer truck driver.

For each new assignment, he picks his load up from a local company early in the morning and then sets off on a lengthy, enduring cross-country trek across the United States that takes him days to complete.

John is a nice, outgoing guy, who carries a smart, witty demeanor. He also fits the “cowboy of the highway” stereotype to a T, sporting a big ole’ trucker cap, red-checkered flannel shirt, and a faded pair of Levi’s that have more than one splotch of oil stain from quick and dirty roadside fixes. He also loves his country music.

I caught up with John a few weeks ago during a family dinner and asked him about his trucking job.

I was genuinely curious — before I entered high school I thought it would be fun to drive a truck or a car for a living (personally, I find driving to be a pleasurable, therapeutic experience).

But my question was also a bit self-motivated as well:

Earlier that morning I had just finished writing the code for this blog post and wanted to get his take on how computer science (and more specifically, computer vision) was affecting his trucking job.

The truth was this:

John was scared about his future employment, his livelihood, and his future.

The first five sentences out of his mouth included the words:

- Tesla

- Self-driving cars

- Artificial Intelligence (AI)

Many proponents of autonomous, self-driving vehicles argue that the first industry that will be completely and totally overhauled by self-driving cars/trucks (even before consumer vehicles) is the long haul tractor trailer business.

If self-driving tractor trailers becomes a reality in the next few years, John has good reason to be worried — he’ll be out of a job, one that he’s been doing his entire life. He’s also getting close to retirement and needs to finish out his working years strong.

This isn’t speculation either: NVIDIA recently announced a partnership with PACCAR, a leading global truck manufacturer. The goal of this partnership is to make self-driving semi-trailers a reality.

After John and I were done discussing self-driving vehicles, I asked him the critical question that this very blog post hinges on:

Have you ever fallen asleep at the wheel?

I could tell instantly that John was uncomfortable. He didn’t look me in the eye. And when he finally did answer, it wasn’t a direct one — instead he recalled a story about his friend (name left out on purpose) who fell asleep after disobeying company policy on maximum number of hours driven during a 24 hour period.

The man ran off the highway, the contents of his truck spilling all over the road, blocking the interstate almost the entire night. Luckily, no one was injured, but it gave John quite the scare as he realized that if it could happen to other drivers, it could happen to him as well.

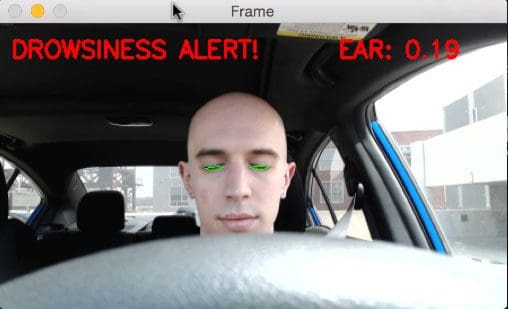

I then explained to John my work from earlier in the day — a computer vision system that can automatically detect driver drowsiness in a real-time video stream and then play an alarm if the driver appears to be drowsy.

While John said he was uncomfortable being directly video surveyed while driving, he did admit that it the technique would be helpful in the industry and ideally reduce the number of fatigue-related accidents.

Today, I am going to show you my implementation of detecting drowsiness in a video stream — my hope is that you’ll be able to use it in your own applications.

To learn more about drowsiness detection with OpenCV, just keep reading.

Drowsiness detection with OpenCV

Two weeks ago I discussed how to detect eye blinks in video streams using facial landmarks.

Today, we are going to extend this method and use it to determine how long a given person’s eyes have been closed for. If there eyes have been closed for a certain amount of time, we’ll assume that they are starting to doze off and play an alarm to wake them up and grab their attention.

To accomplish this task, I’ve broken down today’s tutorial into three parts.

In the first part, I’ll show you how I setup my camera in my car so I could easily detect my face and apply facial landmark localization to monitor my eyes.

I’ll then demonstrate how we can implement our own drowsiness detector using OpenCV, dlib, and Python.

Finally, I’ll hop in my car and go for a drive (and pretend to be falling asleep as I do).

As we’ll see, the drowsiness detector works well and reliably alerts me each time I start to “snooze”.

Rigging my car with a drowsiness detector

The camera I used for this project was a Logitech C920. I love this camera as it:

- Is relatively affordable.

- Can shoot in full 1080p.

- Is plug-and-play compatible with nearly every device I’ve tried it with (including the Raspberry Pi).

I took this camera and mounted it to the top of my dash using some double-sided tape to keep it from moving around during the drive (Figure 1 above).

The camera was then connected to my MacBook Pro on the seat next to me:

Originally, I had intended on using my Raspberry Pi 3 due to (1) form factor and (2) the real-world implications of building a driver drowsiness detector using very affordable hardware; however, as last week’s blog post discussed, the Raspberry Pi isn’t quite fast enough for real-time facial landmark detection.

In a future blog post I’ll be discussing how to optimize the Raspberry Pi along with the dlib compile to enable real-time facial landmark detection. However, for the time being, we’ll simply use a standard laptop computer.

With all my hardware setup, I was ready to move on to building the actual drowsiness detector using computer vision techniques.

The drowsiness detector algorithm

The general flow of our drowsiness detection algorithm is fairly straightforward.

First, we’ll setup a camera that monitors a stream for faces:

If a face is found, we apply facial landmark detection and extract the eye regions:

Now that we have the eye regions, we can compute the eye aspect ratio (detailed here) to determine if the eyes are closed:

If the eye aspect ratio indicates that the eyes have been closed for a sufficiently long enough amount of time, we’ll sound an alarm to wake up the driver:

In the next section, we’ll implement the drowsiness detection algorithm detailed above using OpenCV, dlib, and Python.

Building the drowsiness detector with OpenCV

To start our implementation, open up a new file, name it detect_drowsiness.py , and insert the following code:

# import the necessary packages from scipy.spatial import distance as dist from imutils.video import VideoStream from imutils import face_utils from threading import Thread import numpy as np import playsound import argparse import imutils import time import dlib import cv2

Lines 2-12 import our required Python packages.

We’ll need the SciPy package so we can compute the Euclidean distance between facial landmarks points in the eye aspect ratio calculation (not strictly a requirement, but you should have SciPy installed if you intend on doing any work in the computer vision, image processing, or machine learning space).

We’ll also need the imutils package, my series of computer vision and image processing functions to make working with OpenCV easier.

If you don’t already have imutils installed on your system, you can install/upgrade imutils via:

$ pip install --upgrade imutils

We’ll also import the Thread class so we can play our alarm in a separate thread from the main thread to ensure our script doesn’t pause execution while the alarm sounds.

In order to actually play our WAV/MP3 alarm, we need the playsound library, a pure Python, cross-platform implementation for playing simple sounds.

The playsound library is conveniently installable via pip :

$ pip install playsound

However, if you are using macOS (like I did for this project), you’ll also want to install pyobjc, otherwise you’ll get an error related to AppKit when you actually try to play the sound:

$ pip install pyobjc

I only tested playsound on macOS, but according to both the documentation and Taylor Marks (the developer and maintainer of playsound ), the library should work on Linux and Windows as well.

Note: If you are having problems with playsound , please consult their documentation as I am not an expert on audio libraries.

To detect and localize facial landmarks we’ll need the dlib library which is imported on Line 11. If you need help installing dlib on your system, please refer to this tutorial.

Next, we need to define our sound_alarm function which accepts a path to an audio file residing on disk and then plays the file:

def sound_alarm(path): # play an alarm sound playsound.playsound(path)

We also need to define the eye_aspect_ratio function which is used to compute the ratio of distances between the vertical eye landmarks and the distances between the horizontal eye landmarks:

def eye_aspect_ratio(eye): # compute the euclidean distances between the two sets of # vertical eye landmarks (x, y)-coordinates A = dist.euclidean(eye[1], eye[5]) B = dist.euclidean(eye[2], eye[4]) # compute the euclidean distance between the horizontal # eye landmark (x, y)-coordinates C = dist.euclidean(eye[0], eye[3]) # compute the eye aspect ratio ear = (A + B) / (2.0 * C) # return the eye aspect ratio return ear

The return value of the eye aspect ratio will be approximately constant when the eye is open. The value will then rapid decrease towards zero during a blink.

If the eye is closed, the eye aspect ratio will again remain approximately constant, but will be much smaller than the ratio when the eye is open.

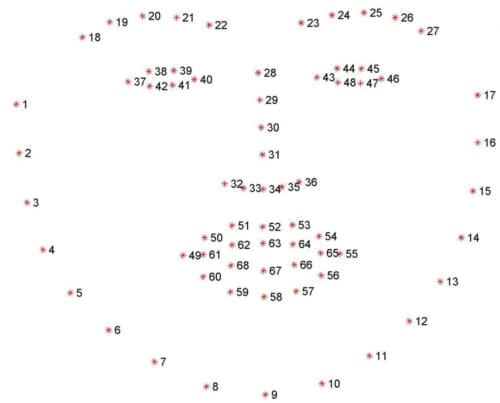

To visualize this, consider the following figure from Soukupová and Čech’s 2016 paper, Real-Time Eye Blink Detection using Facial Landmarks:

On the top-left we have an eye that is fully open with the eye facial landmarks plotted. Then on the top-right we have an eye that is closed. The bottom then plots the eye aspect ratio over time.

As we can see, the eye aspect ratio is constant (indicating the eye is open), then rapidly drops to zero, then increases again, indicating a blink has taken place.

In our drowsiness detector case, we’ll be monitoring the eye aspect ratio to see if the value falls but does not increase again, thus implying that the person has closed their eyes.

You can read more about blink detection and the eye aspect ratio in my previous post.

Next, let’s parse our command line arguments:

# construct the argument parse and parse the arguments

ap = argparse.ArgumentParser()

ap.add_argument("-p", "--shape-predictor", required=True,

help="path to facial landmark predictor")

ap.add_argument("-a", "--alarm", type=str, default="",

help="path alarm .WAV file")

ap.add_argument("-w", "--webcam", type=int, default=0,

help="index of webcam on system")

args = vars(ap.parse_args())

Our drowsiness detector requires one command line argument followed by two optional ones, each of which is detailed below:

--shape-predictor: This is the path to dlib’s pre-trained facial landmark detector. You can download the detector along with the source code to this tutorial by using the “Downloads” section at the bottom of this blog post.--alarm: Here you can optionally specify the path to an input audio file to be used as an alarm.--webcam: This integer controls the index of your built-in webcam/USB camera.

Now that our command line arguments have been parsed, we need to define a few important variables:

# define two constants, one for the eye aspect ratio to indicate # blink and then a second constant for the number of consecutive # frames the eye must be below the threshold for to set off the # alarm EYE_AR_THRESH = 0.3 EYE_AR_CONSEC_FRAMES = 48 # initialize the frame counter as well as a boolean used to # indicate if the alarm is going off COUNTER = 0 ALARM_ON = False

Line 48 defines the EYE_AR_THRESH . If the eye aspect ratio falls below this threshold, we’ll start counting the number of frames the person has closed their eyes for.

If the number of frames the person has closed their eyes in exceeds EYE_AR_CONSEC_FRAMES (Line 49), we’ll sound an alarm.

Experimentally, I’ve found that an EYE_AR_THRESH of 0.3 works well in a variety of situations (although you may need to tune it yourself for your own applications).

I’ve also set the EYE_AR_CONSEC_FRAMES to be 48 , meaning that if a person has closed their eyes for 48 consecutive frames, we’ll play the alarm sound.

You can make the drowsiness detector more sensitive by decreasing the EYE_AR_CONSEC_FRAMES — similarly, you can make the drowsiness detector less sensitive by increasing it.

Line 53 defines COUNTER , the total number of consecutive frames where the eye aspect ratio is below EYE_AR_THRESH .

If COUNTER exceeds EYE_AR_CONSEC_FRAMES , then we’ll update the boolean ALARM_ON (Line 54).

The dlib library ships with a Histogram of Oriented Gradients-based face detector along with a facial landmark predictor — we instantiate both of these in the following code block:

# initialize dlib's face detector (HOG-based) and then create

# the facial landmark predictor

print("[INFO] loading facial landmark predictor...")

detector = dlib.get_frontal_face_detector()

predictor = dlib.shape_predictor(args["shape_predictor"])

The facial landmarks produced by dlib are an indexable list, as I describe here:

Therefore, to extract the eye regions from a set of facial landmarks, we simply need to know the correct array slice indexes:

# grab the indexes of the facial landmarks for the left and # right eye, respectively (lStart, lEnd) = face_utils.FACIAL_LANDMARKS_IDXS["left_eye"] (rStart, rEnd) = face_utils.FACIAL_LANDMARKS_IDXS["right_eye"]

Using these indexes, we’ll easily be able to extract the eye regions via an array slice.

We are now ready to start the core of our drowsiness detector:

# start the video stream thread

print("[INFO] starting video stream thread...")

vs = VideoStream(src=args["webcam"]).start()

time.sleep(1.0)

# loop over frames from the video stream

while True:

# grab the frame from the threaded video file stream, resize

# it, and convert it to grayscale

# channels)

frame = vs.read()

frame = imutils.resize(frame, width=450)

gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

# detect faces in the grayscale frame

rects = detector(gray, 0)

On Line 69 we instantiate our VideoStream using the supplied --webcam index.

We then pause for a second to allow the camera sensor to warm up (Line 70).

On Line 73 we start looping over frames in our video stream.

Line 77 reads the next frame , which we then preprocess by resizing it to have a width of 450 pixels and converting it to grayscale (Lines 78 and 79).

Line 82 applies dlib’s face detector to find and locate the face(s) in the image.

The next step is to apply facial landmark detection to localize each of the important regions of the face:

# loop over the face detections for rect in rects: # determine the facial landmarks for the face region, then # convert the facial landmark (x, y)-coordinates to a NumPy # array shape = predictor(gray, rect) shape = face_utils.shape_to_np(shape) # extract the left and right eye coordinates, then use the # coordinates to compute the eye aspect ratio for both eyes leftEye = shape[lStart:lEnd] rightEye = shape[rStart:rEnd] leftEAR = eye_aspect_ratio(leftEye) rightEAR = eye_aspect_ratio(rightEye) # average the eye aspect ratio together for both eyes ear = (leftEAR + rightEAR) / 2.0

We loop over each of the detected faces on Line 85 — in our implementation (specifically related to driver drowsiness), we assume there is only one face — the driver — but I left this for loop in here just in case you want to apply the technique to videos with more than one face.

For each of the detected faces, we apply dlib’s facial landmark detector (Line 89) and convert the result to a NumPy array (Line 90).

Using NumPy array slicing we can extract the (x, y)-coordinates of the left and right eye, respectively (Lines 94 and 95).

Given the (x, y)-coordinates for both eyes, we then compute their eye aspect ratios on Line 96 and 97.

Soukupová and Čech recommend averaging both eye aspect ratios together to obtain a better estimation (Line 100).

We can then visualize each of the eye regions on our frame by using the cv2.drawContours function below — this is often helpful when we are trying to debug our script and want to ensure that the eyes are being correctly detected and localized:

# compute the convex hull for the left and right eye, then # visualize each of the eyes leftEyeHull = cv2.convexHull(leftEye) rightEyeHull = cv2.convexHull(rightEye) cv2.drawContours(frame, [leftEyeHull], -1, (0, 255, 0), 1) cv2.drawContours(frame, [rightEyeHull], -1, (0, 255, 0), 1)

Finally, we are now ready to check to see if the person in our video stream is starting to show symptoms of drowsiness:

# check to see if the eye aspect ratio is below the blink # threshold, and if so, increment the blink frame counter if ear < EYE_AR_THRESH: COUNTER += 1 # if the eyes were closed for a sufficient number of # then sound the alarm if COUNTER >= EYE_AR_CONSEC_FRAMES: # if the alarm is not on, turn it on if not ALARM_ON: ALARM_ON = True # check to see if an alarm file was supplied, # and if so, start a thread to have the alarm # sound played in the background if args["alarm"] != "": t = Thread(target=sound_alarm, args=(args["alarm"],)) t.deamon = True t.start() # draw an alarm on the frame cv2.putText(frame, "DROWSINESS ALERT!", (10, 30), cv2.FONT_HERSHEY_SIMPLEX, 0.7, (0, 0, 255), 2) # otherwise, the eye aspect ratio is not below the blink # threshold, so reset the counter and alarm else: COUNTER = 0 ALARM_ON = False

On Line 111 we make a check to see if the eye aspect ratio is below the “blink/closed” eye threshold, EYE_AR_THRESH .

If it is, we increment COUNTER , the total number of consecutive frames where the person has had their eyes closed.

If COUNTER exceeds EYE_AR_CONSEC_FRAMES (Line 116), then we assume the person is starting to doze off.

Another check is made, this time on Line 118 and 119 to see if the alarm is on — if it’s not, we turn it on.

Lines 124-128 handle playing the alarm sound, provided an --alarm path was supplied when the script was executed. We take special care to create a separate thread responsible for calling sound_alarm to ensure that our main program isn’t blocked until the sound finishes playing.

Lines 131 and 132 draw the text DROWSINESS ALERT! on our frame — again, this is often helpful for debugging, especially if you are not using the playsound library.

Finally, Lines 136-138 handle the case where the eye aspect ratio is larger than EYE_AR_THRESH , indicating the eyes are open. If the eyes are open, we reset COUNTER and ensure the alarm is off.

The final code block in our drowsiness detector handles displaying the output frame to our screen:

# draw the computed eye aspect ratio on the frame to help

# with debugging and setting the correct eye aspect ratio

# thresholds and frame counters

cv2.putText(frame, "EAR: {:.2f}".format(ear), (300, 30),

cv2.FONT_HERSHEY_SIMPLEX, 0.7, (0, 0, 255), 2)

# show the frame

cv2.imshow("Frame", frame)

key = cv2.waitKey(1) & 0xFF

# if the `q` key was pressed, break from the loop

if key == ord("q"):

break

# do a bit of cleanup

cv2.destroyAllWindows()

vs.stop()

To see our drowsiness detector in action, proceed to the next section.

Testing the OpenCV drowsiness detector

To start, make sure you use the “Downloads” section below to download the source code + dlib’s pre-trained facial landmark predictor + example audio alarm file utilized in today’s blog post.

I would then suggest testing the detect_drowsiness.py script on your local system in the comfort of your home/office before you start to wire up your car for driver drowsiness detection.

In my case, once I was sufficiently happy with my implementation, I moved my laptop + webcam out to my car (as detailed in the “Rigging my car with a drowsiness detector” section above), and then executed the following command:

$ python detect_drowsiness.py \ --shape-predictor shape_predictor_68_face_landmarks.dat \ --alarm alarm.wav

I have recorded my entire drive session to share with you — you can find the results of the drowsiness detection implementation below:

Note: The actual alarm.wav file came from this website, credited to Matt Koenig.

As you can see from the screencast, once the video stream was up and running, I carefully started testing the drowsiness detector in the parking garage by my apartment to ensure it was indeed working properly.

After a few tests, I then moved on to some back roads and parking lots were there was very little traffic (it was a major holiday in the United States, so there were very few cars on the road) to continue testing the drowsiness detector.

Remember, driving with your eyes closed, even for a second, is dangerous, so I took extra special precautions to ensure that the only person who could be harmed during the experiment was myself.

As the results show, our drowsiness detector is able to detect when I’m at risk of dozing off and then plays a loud alarm to grab my attention.

The drowsiness detector is even able to work in a variety of conditions, including direct sunlight when driving on the road and low/artificial lighting while in the concrete parking garage.

What's next? We recommend PyImageSearch University.

84 total classes • 114+ hours of on-demand code walkthrough videos • Last updated: February 2024

★★★★★ 4.84 (128 Ratings) • 16,000+ Students Enrolled

I strongly believe that if you had the right teacher you could master computer vision and deep learning.

Do you think learning computer vision and deep learning has to be time-consuming, overwhelming, and complicated? Or has to involve complex mathematics and equations? Or requires a degree in computer science?

That’s not the case.

All you need to master computer vision and deep learning is for someone to explain things to you in simple, intuitive terms. And that’s exactly what I do. My mission is to change education and how complex Artificial Intelligence topics are taught.

If you're serious about learning computer vision, your next stop should be PyImageSearch University, the most comprehensive computer vision, deep learning, and OpenCV course online today. Here you’ll learn how to successfully and confidently apply computer vision to your work, research, and projects. Join me in computer vision mastery.

Inside PyImageSearch University you'll find:

- ✓ 84 courses on essential computer vision, deep learning, and OpenCV topics

- ✓ 84 Certificates of Completion

- ✓ 114+ hours of on-demand video

- ✓ Brand new courses released regularly, ensuring you can keep up with state-of-the-art techniques

- ✓ Pre-configured Jupyter Notebooks in Google Colab

- ✓ Run all code examples in your web browser — works on Windows, macOS, and Linux (no dev environment configuration required!)

- ✓ Access to centralized code repos for all 536+ tutorials on PyImageSearch

- ✓ Easy one-click downloads for code, datasets, pre-trained models, etc.

- ✓ Access on mobile, laptop, desktop, etc.

Summary

In today’s blog post I demonstrated how to build a drowsiness detector using OpenCV, dlib, and Python.

Our drowsiness detector hinged on two important computer vision techniques:

- Facial landmark detection

- Eye aspect ratio

Facial landmark prediction is the process of localizing key facial structures on a face, including the eyes, eyebrows, nose, mouth, and jawline.

Specifically, in the context of drowsiness detection, we only needed the eye regions (I provide more detail on how to extract each facial structure from a face here).

Once we have our eye regions, we can apply the eye aspect ratio to determine if the eyes are closed. If the eyes have been closed for a sufficiently long enough period of time, we can assume the user is at risk of falling asleep and sound an alarm to grab their attention. More details on the eye aspect ratio and how it was derived can be found in my previous tutorial on blink detection.

If you’ve enjoyed this blog post on drowsiness detection with OpenCV (and want to learn more about computer vision techniques applied to faces), be sure to enter your email address in the form below — I’ll be sure to notify you when new content is published here on the PyImageSearch blog.

Download the Source Code and FREE 17-page Resource Guide

Enter your email address below to get a .zip of the code and a FREE 17-page Resource Guide on Computer Vision, OpenCV, and Deep Learning. Inside you'll find my hand-picked tutorials, books, courses, and libraries to help you master CV and DL!

Hello, Adrian.

I’d like to ask you a few questions about this post.

I use raspberry pies 3 and I’m using an SAMSUNG SPC-B900W webcam.

You mentioned that you did not perform well in the raspberry Pie 3 article.

I’d like to reduce the incidence of this phenomenon, but how can I solve this phenomenon?

Please see my reply to “N.Trewartha” regarding the Raspberry Pi 3.

Dear Adrian,

If someone wear sun glasses ,How will you detect the person is sleeping ?????

Plz reply

Bro, your codes are awesome.

I need a small, what are the packages need to install on windows 10.

And is the driver drowsiness detection system code does work on windows?

Yes, this code will run on Windows 10 provided you have OpenCV and dlib installed.

No it’s easily work on windows u need to install some python library opencv scipy numpy imutils cmake playsound before install this packages firstly check python installation and it’s environmental path settings .

dear Amit Shukla,can u please tell me how to install these libraries in command prompt?when I am trying to install dlib,opencv,scipy and everything ,it arises a connection time out error everytime.so help me with this problem.

8.5.2017

A super project.

I will try to do this on a RPi 3 so I have a solution fpr the car.

Any tips ?

If you intend on using a Raspberry Pi for this, I would:

1. Use Haar cascades rather than the HOG face detector. While Haar cascades are less accurate, they are also faster.

2. Use skip frames and only detect faces in every N frames. This will also speedup the pipeline.

Adrian, what exactly do you mean when you say,”Use skip frames”?Is this switch or an option that we can use?I am planning to implement this on R-Pi3 in my car and would love to understand more.Btw,fantastic article.A huge fan of your site and courses.

Also any tips or articles on the precompilation of dlib libraries and perf tips for R-pi3?

By “skip frames” I mean literally only process every N frames for face detection (i.e., “skipping frames”). I plan on doing an updated blog post on how to optimize facial landmark detection for the Raspberry Pi, so stay tuned for that post.

WOW!!!

THANKS~

I’m waiting for the results, too!

Hey Adrian/Vikram,

I found if you only perform detection every once in a while as well it improves performance quite significantly, I trained my own dlib shape predictor/detector and ran very smooth on a Raspi by only performing detection once at startup and then on large movements (Please bear in mind the picamera was at this stage locked in a fixed place, so there was not lots of movement, so this may not work for your own setup, but give it a shot!)

Thanks for sharing Alexon. And just to add to the comment further, this method is called “frame skipping” and is often used to improve the speed of frame processing pipelines.

Awesome Adrian! Fantastic post as usual. Looking forward to the release of your deep learning book!

Thank you Kenny! 🙂

How many FPS you can process ?

This depends on what device you are running on.

How to detect when a driver wears shades.

you can’t

My idea was to use a IR camera that usually takes the thermal image of the face instead of the RGB colored image . There should be slight variation in the thermal image when eyes are closed even when the shades are on .

I should definitely work for a good IR Camera .

Fantastic work!

What if the driver wears sunglasses? Any ideas?

If the driver wears sunglasses and you cannot detect the eyes then you cannot apply this algorithm. I would suggest extending the approach to also monitor the head tilt of the driver as well.

Typical sunglasses filter out visible light but do not block infrared.

Chevrolet’s Super Cruise has an IR emitter built into the steering wheel. On top of the steering column they have a camera with visible light filter (and IR pass through). By using the IR reflections from the eyes they ensure that the driver is watching the road.

That’s pretty cool, thanks for sharing Marco.

Is this the most dangerous and risky software test you have ever made?

Off the top of my head, yes. But I was driving very slow (5-10 MPH) on uncrowded streets. The video made it seem like I was going much faster.

I love to read your great posts. Amazing work, very impressive.

Greetings from germany.

Thank you Hermi, I hope all is well in Germany.

Fantastic job Adrian! Both the results and the write up. I’m patiently waiting for a ultra dice counter. 🙂

I’m glad you enjoyed the blog post mapembert! What do you mean by an “ultra dice counter”?

Nice tutorial and nice application for facial landmarks, thank you! Cool car! ( I am Subaru lover too 🙂

Thanks Oleh, I’m glad you enjoyed the tutorial! I also really love my Subaru as well. Living in the north-eastern part of the United States, it’s often help to have AWD drive to get around on snowy days 😉

Awesome work Adrian! A slight change from Blink detector but a nice application.

I’ve a question regarding this.

Dont you think you should also consider the moving state of tha car coz there’s no point of any alert if the car is stationary and driver is sleepy.

I know we would need some sensor to detect the speed of the car for this. But i would like to know exactly what device do we need to use for this, how do we connect to our system and required modules for our code to incorporate this functionality.

I often get questions on how to build practical computer vision applications based on previous blog posts. This post on drowsiness detection, as you noted, is an extension of blink detection.

As for considering the moving state of the car, absolutely — but that’s outside what we are focusing on: computer vision. If you were to implement this method in a factory for cars you would have sensors that could tell you if the car was moving, how fast, etc. Exactly how you access this information is dependent on the manufacturer of the car.

In view of the importance of this application, would it not be sensible to use a faster single board computer, such as perhaps an Odroid? Or would that still be inadequate?

For an entirely self-contained project I would likely use a device from the NVIDIA TX series.

Thank you.

The connection was successful.

Movement is about 5 seconds slower.

What should I do if my camera is slow?

Thank you.

The connection was successful.

But there is no sound.

Is there a solution?

What should I download separately?

If there is no sound, then there is an issue with the

playsoundlibrary. As I mentioned in the blog post, I’m not an expert on playing sounds with the Python programming language so you will need to consult theplaysounddocumentation.Nice practical application of openCV. Am a huge fan of your blog, however my primary niche of interest is in Geo sciences field.

Any chance you will venture into creating Geo related blog posts in future?

I mean openCV in GIS, Remote Sensing, Geomatics, Geology, Geography etc…

Hi Umar — I personally don’t do any work with geo-related projects, but it’s something I would consider exploring in the future.

Do you have any plans to support night driving?

At the present time no, but I will certainly consider it.

Hi I work on raspberry pi 3 I think I did everything right but occur an error like that :

usage: detect_drowsiness.py [-h] -p SHAPE_PREDICTOR [-a ALARM] [-w WEBCAM]

detect_drowsiness.py: error: argument -p/–shape-predictor is required

It’s not an error. You need to read up on command line arguments before continuing.

I am also getting same but don’t know what to do ?

$ python pi_detect_drowsiness.py

usage: pi_detect_drowsiness.py [-h] -c CASCADE -p SHAPE_PREDICTOR [-a ALARM]

pi_detect_drowsiness.py: error: the following arguments are required: -c/–cascade, -p/–shape-predictor

I solve my problem by this

$ python pi_detect_drowsiness.py –shape-predictor shape_predictor_68_face_landmarks.dat –cascade haarcascade_frontalface_default.xml

thank you all.

For others struggling with the same issue please read this post on command line arguments.

I’m using windows. I stuck at that command line arguments. I dont know how to run this code

It’s okay if you are using Windows, command line arguments still work in Windows. To run the script open a command line prompt and follow the instructions in the post. If you need help with command line arguments, read this post first.

Hi Omer. I am also having the same problem did you fix this problem. Please let me know.

Hi, i have the same problem. Did you fix this problem, please let me know.

usage: detect_drowsiness.py [-h] -p SHAPE_PREDICTOR [-a ALARM] [-w WEBCAM]

detect_drowsiness.py: error: the following arguments are required: -p/–shape-predictor is

I work with windows 10.

usage: detect_drowsiness.py [-h] -p SHAPE_PREDICTOR [-a ALARM] [-w WEBCAM]

detect_drowsiness.py: error: the following arguments are required: -p/–shape-predictor

How to solve the above error?

Thanks in advance

Please read the comments before posting. I have addressed this question in my reply to “Ömer”.

Playsound library not working, giving import error So i used Pygame,i redefined the sound_alarm by putting the pygame code inside it and called it with separate thread it’s working fine.

Thanks

Thanks for sharing Nitesh!

how can i get mine working, my video stream is very slow and the sound this not working

Playsound library working in my case. Thanks for the suggestion though 🙂

hey.

I have tried to run the code on raspberry pi 3.

The code is working fine but has a delay of 5-10 sec.

What would u suggest me to do to run it real time on the pi?

I will be doing a separate blog post that provides optimizations for running blink detection and drowsiness detection on the Raspberry Pi. There are a number of optimizations that need to be made, too many to detail in a comment.

Hello Adrian

Does it work if the driver using glasses ? especially sun glasses ?

Thx

In most cases, no. You need to be able to reliably detect the facial landmarks surrounding the eyes. Sun glasses especially can obscure this and give incorrect results. Remember, if you can’t detect eyes, you can’t detect blinks.

Thanks for writing this article! This is something I’ve been looking for.

I live in South Korea and deadly traffic accidents caused by drivers(especially overworked bus or truck drivers) falling asleep behind the wheel occur almost regularly.

I’ve been thinking about implementing a system that utilizes dual cameras, one for eye blinking monitoring, the other for monitoring the road.

The front road monitoring camera would be capturing the image of the car in your lane and by analyzing how rapidly you are approaching the vehicle, you could warn the driver. I have a few vague ideas as to how to solve this problem but I am just starting to wet my beak in computer vision so if you write an article about this subject, I’d appreciate it so much!

[INFO] loading facial landmark predictor…

Traceback (most recent call last):

File “/home/pi/Downloads/drowsiness-detection/detect_drowsiness.py”, line 64, in

predictor = dlib.shape_predictor(args[“shape_predictor”])

RuntimeError: Unable to open shape_predictor_68_face_landmarks.dat

I got this error on raspberry 🙁

Make sure you use the “Downloads” section of this blog post to download the source code and shape_predictor_68_face_landmarks.dat file.

How did u solve this error???Even I have got the same error.

To solve this error you will need to read up on command line arguments and how they work. Once you read up on them you will understand how to solve this error.

Hi Adrian!

I got the following error and don’t know what to do!

…

(h, w) = image.shape[:2]

AttributeError: ‘NoneType’ object has no attribute ‘shape’

Double-check that OpenCV can access your webcam. I cover the reason for these NoneType errors in this blog post.

I am also having the same error. Did you find out how to solve it ?

if you use desktop(no laptop), you must send “–webcam 0″ as command line argument whether you check the line this type. chance as”–webcam 1 to webcam 0″

Can we use a laptop webcam?

Yes, you can absolutely use a laptop webcam. I used a laptop webcam to debug this script before I moved to a normal webcam in my car.

Do you think is a good idea to try to reuse the detected pose of every frame, (to implement a tracking algorithm).

The result would be the same as using the landmark detection every frame. I would be glad if you could recommend me some papers to do the tracking.

Best Regards,

Ricardo

Object tracking in video is a huge body of work. My main suggestion would be to start with dlib’s correlation tracker and go from there.

Hey Adrian, nice post! This helps me a lot to unsderstand about the science behind this project. I used Raspberry Pi 3 and can’t figure to use skip frame or using haar-cascade instead of HOG. Any references to do that?

And when will you release the Raspberry Pi version of this tutorial? Can’t wait for your next interesting post.

Thanks

I’m not sure when I’ll be releasing the Raspberry Pi version of the tutorial — most of my time lately has been spent writing Deep Learning for Computer Vision with Python.

As for using Haar cascades for face detection, be sure to take a look at Practical Python and OpenCV where I discuss how to perform face detection in video streams using Haar cascades.

Adrian I have a similar problem at hand to detect the eye deflection.I have a video file of eye portion of the QA person who checks the defective bottle. If a person sees at one point there is no defect as soon as his eyes deflect up or sideways there can be defect.How can the implementation be done.

I’m not familiar with the term “eye deflection”. Can you explain it or provide a link to a page that describes it?

Great Work Adrian….

I have seen while two faces come in frame it detects both and EAR is overlapped. So in real time driving I don’t want to detect any face other than the first one. Any suggestion implementing on that?

For who facing sound problem I have installed pygame module and works fine.

import pygame

def sound_alarm(path):

# play an alarm sound

pygame.mixer.init()

pygame.mixer.music.load(path)

pygame.mixer.music.play()

Hopefully it helps others 🙂

There are a few methods to do this. The easiest solution is to find the face with the largest bounding box as this face will be the one closest to the camera.

find the face with the largest bounding box

You use the

cv2.boundingRectfunction to compute the bounding box coordinates. If you are new to working with OpenCV that’s okay but I would recommend you read through Practical Python and OpenCV to help you learn the fundamentals, including face detection and working with bounding boxes.Thanks a lot dude really it worked great

Hi Adrian. I really love your tutorials, they´ve helped me a lot. Actually I have to do a work using blink detection but my teacher didn´t let me use a PC, so I want to use Raspberry Pi 3, but as you said, it´s not fast enough. What other development board can I use that is fast enough??

You can make this code fast enough to run on the Raspberry Pi. Swap out the dlib HOG + Linear SVM detector to use Haar cascades and use skip frames.

Hi Adrian, I agree with Itzia, when it talks about its editions and how much it helps us to improve in the Programming of Computer Vision!!

You could make an example teaching how to swap out the dlib HOG + Linear SVM detector to use HAAR Cascades?? We would be really grateful!!!

Yes, I will be doing a dedicated Haar cascade + Raspberry Pi blog post in the future (hopefully soon).

please please please 🙂

According to my current schedule, I’ll be releasing the Raspberry Pi + drowsiness detector post in October 2017 (i.e., later this month).

Train engineers (drivers) are afflicted by the same issue. The solution there is much lower tech. They have a pedal that they have to repeatedly press throughout their shift. If they fail to press the pedal in the allotted time an audio warning is sounded. If the warning goes unheeded (presumably because the engineer fell asleep) then the train comes to a stop.

Excellent solution and a great example of how simple engineering can be used instead of more complicated approaches.

Hey..Thanks a lot for your posts ,I regularly follow them. Your tutorials are fun and easy to understand..Recently I have developed a keen interest in explainable AI(XAI) but there not much papers or interesting applications available for Computer vision and Image processing field of XAI.. I was hoping if you could come up with some fun applications in this field

Hi Anne — I think you would benefit greatly from the PyImageSearch Gurus course and my new book, Deep Learning for Computer Vision with Python. Inside both the course and book I include practical, real-world projects.

Hi adrain I need to know how to run Raspberry pi with voice commands. Like siri I need to know the date time weather meanings of words or any python code to be executed just through voice commands with a wake up call and “What is the time”? type of commands.

Hi Kiruthika — I am not familiar with voice command libraries/packages.

Hi adrain I am getting ‘select timeout’ error every time I run this code. Please help me out

This sounds like an issue with your camera. Double-check that the camera is connected properly to your system and that you can access it via OpenCV.

Hey Adrian, cool stuff. The open source movement is remarkable. That is progress, also thanks to your contributions.

Anyhow, imagine I have exploited your tutorial to make a yawn detector. When people yawn with their mouth wide open it’s straightforward, but different people have different styles of yawning so what could you suggest to allow also people who yawn by placing their hand in front of the mouth to be detected reliably following your approach?

At that point you would need to train a machine learning classifier to recognize various types of yawns. Using simple heuristics like the aspect ratio of facial regions is not going to be robust enough, especially if parts of the face are occluded.

thanks

I am not able to install dlib library for windows. please help

Hi Darshil — I don’t support Windows here on the PyImageSearch blog, only Linux and macOS. Please take a look at the official dlib install instructions for Windows.

Hi, Dr. Rosebrock

Your posts are very helpful for me. Thanks a lot.

I have a question.

You use the eye aspect ratio (EAR) method.

I think ‘PERCLOS’ is also good method to detect drowsiness.

PERCLOS is the ratio of the full size of the eyes to the size of current eyes.

I want to calculate PERCLOS, but I have a problem.

I can calculate the size of current eyes, but I can’t calculate full size of them (fully opened eyes).

How can I calculate it?

Sorry for poor English skill.

I haven’t used PERCLOS before. Do you have a link I could use to read more about it?

Of course

https://ntl.bts.gov/lib/10000/10100/10114/tb98-006.pdf

https://image.slidesharecdn.com/201410icasmmexicocity-absent-notgiven-150621212630-lva1-app6892/95/detecting-fatigue-lessons-learned-19-638.jpg?cb=1434922163

Thanks for sharing. You mentioned not being able to compute the size of the “fully open” eyes. Can you elaborate on what you mean by that? If the facial landmarks can localize the eyes you can compute the size.

I apologize for not being word-perfect in English.

English is not my mother tongue; please excuse any errors on my part.

Hi Adrian,

Thanks for this wonderful tutorial. Huge fan of you. I want to know how can we detect yawning as well as head movement of drivers including with eye blinking for detecting drowsiness among drivers as this will give a proper indication about their condition. Could you explain a little if possible. Thanks in advance.

Head movement can be tracked by monitoring the (x, y)-coordinates of the facial landmarks across successive frames. You could combine this approach with this post to monitor how direction changes. Yawning could potentially be distinguished my monitoring the lips/mouth landmarks and applying a similar aspect ratio test.

Hi Adrian,

Thanks for this wonderful tutorial. I m fan of you. I want to know how can solve this problem

[INFO] loading facial landmark predictor…

[INFO] starting video stream thread…

Instruction non permise (core dumped)

Hi Saimon — can you insert some “print” statements into your code to debug and determine exactly which line is causing the seg-fault? I would need to know exactly which line is causing the problem to advise.

Hello, Adrian!

My program is terminating in a similar way too!

[INFO] loading facial landmark predictor…

[INFO] starting video stream thread…

here1

here2

here3

here4

here5

here6

Illegal instruction

(I inserted some “here#” statements to debug the code. It stopped here:

gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

print(“here6”) —>this was the last “here#”

# detect faces in the grayscale frame

rects = detector(gray, )

print(“here7”)

The webcam starts and stops in a few seconds and no frame appears.

It sounds like your system is trying to compute the detected faces. Since it’s this line that is throwing the illegal instructions error, I think you should try re-compiling and re-installing dlib.

Do you think this method will work with an infra red camera? I.e. do you think the face detection you used will work with such image?

Hi, I’m using Raspberry Pi 3 and I’m facing a problem with scipy library t is installed from pip3 from the last version but I got this error:

from scipy import distance as dist

ImportError: cannot import name ‘distance’

I want to know what is the cause of the problem. And thnx

Which version of SciPy do you have installed?

Hi Adrian,

This project was terrific! I found it really inspiring…now I am installing dlib into my raspberry pi, however it is taking an eternity to end the last step…I did the process of swap memory and the other adjustments and it is still running. Thanks!

Parabens!!

Hey Juan — it will take awhile to compile and install. I would suggest letting it run overnight.

Hey Adrian,

Thanks for this! Not being the greatest coder, I was banging my head trying to figure out the logic to adapt your blink detection blog post to a sleep/drowsiness detector. The addition of the audible alarm makes this so much cooler!

Thanks John 🙂

Hey Adrian,

If I wanna use Raspberry pi drowsiness detection, what changes shall i do.

Also please post your new blog about optimizing raspberry pi for real time facial landmark detection.

Thank you.

I have already published the optimized drowsiness detection + Raspberry Pi post. You can find it here.

Hi Adrian,

Will this code work for any other video stream of a drowsy driver(ie, not real time)

I tuned some of the parameters, including the EAR threshold and consecutive number of frames based on this video. It should translate reasonably well to other videos but you may have to tune the parameters for your application.

Thanks. And one more question, what is the index of the webcam?. say, iam trying to interface it with the webcam of my laptop

You’ll want to check this yourself on your own machine. Typically it’s “0” for the first webcam and “1” for the second. Again, you’ll need to check that on your machine.

what does .xml, .dar files do..how can i use them..how did you create them..please explain clearly..iam new to this..

thank you.

Hey Vamshi — this blog post doesn’t have any XML files so I’m not sure what you are referring to?

hi..

when i download the code,three files are being dowloaded

shape_predictor_68_face_landmarks.dat

haarcascade_frontalface_default.xml

pi_detect_drowsiness.py

iam confused..how do i use them..

can i do the same drowsines detection using neural networks..?

The

haarcascade_frontalface_default.xmlis the face detector model andshape_predictor_68_face_landmarks.datis your facial landmark predictor. Thepi_detect_drowsiness.pyPython script loads the models and uses them to first detector a face and then localize the facial landmarks. To see how to execute the script please refer to the blog post.but sir does this blog post code use cnn..i guess not..i need to know how to implement the same thing in cnn..is it possible..

This method does not need a CNN. A CNN would be overkill.

Thanks for sharing your ideas. Your tutorials are amazing!!!

Really helps in understanding the concept.

Thank you Satish, I really appreciate that 🙂

Sorry. What version do you use of Python ?. I try to test it with windows.

This code will work with both Python 2.7 and Python 3.

the code is not working in me..

Hey Kent — what specifically is not working? Is the code giving you an error? Keep in mind that myself and others can only help if you provide more detail and explain exactly what the problem is.

can we make .exe file for this application? IF it can be made then can u provide me some tips.

We typically do not create .exe applications from Python scripts. For simple Python script it is technically possible but since we use OpenCV, which is dependent on a number of libraries, it’s very complicated I do not recommend it.

is there any method to deploy this application?

Not easily, but that isn’t my particular area of expertise. I would suggest putting together a VM or Docker image with OpenCV pre-installed along with your application and shipping the VM/Docker image directly.

if someone where sun glasses then is there any solution?????????

Wow. This is amazing! I’m currently working on a school project that is focused on drowsy driving. This article is really informative, especially the details on facial landmarks and recognition.

Thanks Lee! And best of luck with the school project 🙂

this is giving error in windows

usage: detect_drowsiness.py [-h] -p SHAPE_PREDICTOR [-a ALARM] [-w WEBCAM]

detect_drowsiness.py: error: the following arguments are required: -p/–shape-predictor

Hey Mukul — you need to execute the script from your terminal and supply the command line arguments. If you are new to command line arguments, that’s okay, but you need to read up on them first before you try to execute the script.

thanku sir.it worked.

one think i want to ask does the shape_predictor_68_face_landmarks.dat

comes along with the dlib library.or you created it for this project.

i am also getting the same error.is your error solved.If solved will u please share the solution

for windows :

all you need to de is first go to the directory where you have placed these files in cmd prompt with “cd” command;

then you need to run the following command:

python detect_drowsiness.py -p shape_predictor_68_face_landmarks.dat -a alarm.wav

awesome project

Thanks Mike, I’m glad you enjoyed it!

i want to know .did you created the facial landmark file for this project.plz provide some detailed description of it.can we use it on other projects also.

I did not create the facial landmark predictor — that was created by Davis King of dlib. You should refer to his example on training custom shape landmark predictors.

what if i want to use picam instaed of a webcam?

See this blog post.

im getting an error called excepted an inteded block on line 21 ..plz help

Make sure you use the “Downloads” section of this blog post to download the source code and example video. Don’t try to copy and paste the code.

Hello Adrian I’m getting this error running the code

Traceback (most recent call last):

File “detect_drowsiness.py”, line 6, in

from scipy.spatial import distance as dist

ImportError: No module named ‘scipy’

and when i want to install scipy with “pip install scipy” the terminal gives this message:

Requirement already satisfied: scipy in /usr/lib/python2.7/dist-packages

In the stages of installing opencv on ubuntu i think my python 3 was my choice and in the test it was ok(python 2.7 test result was not ok)

what should I do? How can I install scipy on python 3?

How did you install OpenCV on your system? Did you use a PyImageSearch tutorial? If so, you may have forgotten to install SciPy into the Python virtual environment:

Hi. Wonderfull job! I already finished your book about OpenCv and going to get your deep learning book.

I have a question/problem. I cannot find a shape predictor for 194 points, but there are dataset that you mentioned. How precize could it be if I train it?

And are there any shape predictors or datasets

for profile face?

Davis King, the creator of dlib, includes a number of models and scripts/programs that can be used to train shape predictors. I would suggest starting with the dlib models page.

hello Adrian.

my webcam can’t work in wmware Ubuntu runner.

Can you fix it?

(my laptop is HP Probook 4540s – win 10)

Exactly how (or even if) it’s possible to enable your webcam through virtualization software such VMware is dependent on your OS, system, and VM software version. I’m not sure what the process would be for your particular system so you should spend some time researching it.

Hii …can you please tell me what setup should be made before running the code

Make sure you follow my instructions on installing OpenCV for your particular operating system. From there you can follow this guide.

Sir ,

I am implementing drowsiness detection on raspberry Pi including yawning detection also following your blog. Programming is working fine but it takes a lot of running time on raspberry pi. Can you suggest methods to speed up the processing.

I actually wrote a Raspberry Pi optimized drowsiness detector. You can find it here.

Hi Adrian, I am new to Computer Vision and Python. But with your blog i have successfully applied this drowsiness detection on AWS cloud server on a saved video file. However i can view the result by logging to AWS through SSH with -X flag. But it is taking a lot of time so i am wondering how can i save the EAR value corresponding to each frame and corresponding Drowsiness alert message into text file. Can you specify the code snippet which i need to change for the same? And does it help me to fasten the detection and improving the performance?

Congrats on running the script in AWS, that’s a big step. The reason why you are seeing such a lag is not due to the slowness of the processing, it’s due to the I/O latency of forwarding the video frames from the cloud to your machine. You could write some additional code to “log” the drowsiness events or you could consider writing the video back to disk with the results drawn on it.

Hi your work amazing as usually. I want to detect eye pupil in video using dlib, but i do not have an idea how to do it. Please help. Can you give me some advance please

I don’t have any tutorials on pupil detection or tracking but I know some PyImageSearch readers have tried this method.

Thank you so much

I use pygame instead of playsound.

import pygame // instead of playsound at line 7

pygame.init()

pygame.mixer.music.load(‘alarm.wav’)

pygame.mixer.music.play()

time.sleep(2)

pygame.mixer.music.stop()

instead of playsound.playsound(path) at line 16

I learned a lot from this.

Thanks Adrian

Thanks for sharing!

You are amazing!

Hi Adrian

Is there any solution to this problem

i have used python

i got this error

predictor = dlib.shape_predictor(args[“shape_predictor”])

RuntimeError: Unable to open shape_predictor_68_face_landmarks.dat

Make sure you use the “Downloads” section of this blog post to download the source code + .dat file used for detecting facial landmarks. Based on your error you are not supplying the correct path to your .dat file.

Hi Adrian.

I’m using Python, and I’m with this problem.

usage: detect_drowsiness.py [-h] -p SHAPE_PREDICTOR [-a ALARM]

detect_drowsiness.py: error: the following arguments are required: -p/–shape_predictor

Do you have any solution?

Make sure you read this tutorial on command line arguments.

hello andrian.am using raspberrypi zero and pi camera.showing error “object has no atribute ‘shape’ ”

please help

It sounds like OpenCV cannot access your webcam. You can read more about the error and how to solve it here.

Can we similarly use mouth aspect ratio to detect whether mouth is open for yawn detection?

Absolutely! Give it a try 🙂

How can i detect smile of a person using facial landmarks,considering different people will be having different lip size and smile type having different.

There are a few ways to approach this problem. The first is to not use facial landmarks at all and train a custom model to perform smile detection. In fact, this is the exact approach I took when writing a chapter for smile detection inside Deep Learning for Computer Vision with Python.

Secondly, even though lip sizes may vary from person to person you should be able to compute the aspect ratio of the lips, similar to what we’ve done in this post. Give it a try!

Hi Adrian

I’m new to this and after i read your post and the paper, i found that u didn’t use the svm or the markov model they propose to classify the drowsiness but instead using just consecutive number of frame to classify it.

Can you tell me the reason why?

Refer to my previous post on blink detection for the reasoning (it was just too advanced for an introductory post).

Traceback (most recent call last):

…

ImportError: No module named scipy.spatial

scipy.spatial isnt automatically imported with scipy. What do I do?

You need to install the SciPy library:

$ pip install scipyHi Adrian,

Thanks for the awesome tutorial. I just want to ask something, is there a way we can detect whether the person is in camera frame or not ? Suppose the person fell asleep and his face is not in camera frame, in that case how can we detect that and (maybe) we can trigger another alarm which is for longer duration so that the person wakes up ?

If the person’s face is no longer in field of view of the camera then you cannot apply this technique. You may want to consider a more advanced algorithm that includes some sort of temporal information of the face, such as head bobbing, etc. that indicates sleep. I would do some research on “activity recognition”.

Just one more doubt. You are using detector and predictor in loops to find the face landmarks( and coordinates ) and convert it into a numpy array. Suppose the person’s face is not in camera, in that case what would be the output of detector and predictor and what values will be stored in numpy array.

If the person’s face is not in the view of the camera then the face will not be detected and the facial landmarks will not be computed.

Can we know that whether the detector has detected a face or not ? I mean, is there any syntax for that, so that we can check for how many frames the face is detected and vice versa.

If the detector reports a face location then by definition the detector has detected a face — that’s how face detectors and object detectors in general work. If you’re trying to count the number of a frames a face appears in you may want to consider performing some super basic face recognition. A great first step would be computing the embeddings for each face via this tutorial and then doing some face clustering as well.

Can u please provide some video tutorial of the project

I tend to prefer writing over creating video. I don’t have any plans to do a video tutorial other than the actual video demos that I publish.

Can I make it using Android Studio?

You would need to convert the code from Python to Java + OpenCV, but yes, the general algorithm will work.

Hi,

Great tutorial.

I had used this code on a webcam video. What I see is the EARs are almost around the similar values (as in case of open eyes) even when the eyes are closed. This probably happens when because I am moving my head a little in other directions or back-and-forth. I believe your code is based on the assumption that the face should always be still.

Can you please help me on this?

how to detect face landmark using c++

You should refer to the dlib docs for a C++ example.

hi Adrian can you please solve the bellow error

File “detect_drowsiness.py”, line 68, in

(lStart, lEnd) = face_utils.FACIAL_LANDMARKS_IDXS[“left_eye”]

AttributeError: module ‘imutils.face_utils’ has no attribute ‘FACIAL_LANDMARKS_IDXS’

This was caused in the latest release of imutils v0.5. I’ll be fixing it in within the next few days with a release of imutils v0.5.1, but in the meantime just change “FACIAL_LANDMARKS_IDXS” to FACIAL_LANDMARKS__68_IDXS and it will work.

Hello Adrain, I am running this on Jetson TX1 with 9 FPS. However i am wondering how can i run this with GPU support or does it automatically do so? If so can you mark the code which accesses the GPU or underlying hardware in the code as i cannot see any CUDA support or GPU programming code? If not how can i add GPU Support for this? Waiting for your response.

In short, there really isn’t an easy way to add GPU support (yet). You can re-code in C++ and use the OpenCV + GPU bindings, but not yet for strict Python. Python + OpenCV + CUDA support is coming soon though!

I just wanted to make some changes in the code. Like what I want is if sleepy for 1 min then it will show that sleepy from 1 min, if sleepy for 40 sec then show sleepy from 40 sec and if sleepy more than 1.30 mins then will show that sleepy from 1.30 mins. This is the changes which our teachers wants. But I am not able to do it. Can you please tell me how should I do this, where I need to do the changes in the code and what will be the code.

Thank you.

Hey Janhavi — if your teacher wants you to build a project that can detect drowsiness/sleepiness then I suspect your teacher is looking for you to gain a particular skill by doing it. As someone who spent many years and school, and knows the value of an education, I’m not going to give you the answer.

But I will give you a hint:

You should consider using the

timelibrary to grab a timestamp when the drowsiness detector starts and ends…adrian is it possible to use this code with an optimize opencv?

I’m not sure what you mean by “an optimize OpenCV”? Could you clarify?

Hi, Fantastic article, even for starters. Question. would 720p webcam be sufficient for achieving this? or minimum required is 1080p? How would it make any difference? Thanks.

I would suggest experimenting with different resolution webcams. Exactly which one is ideal for your project really depends on your lighting conditions. Experiment and you will find your answer 🙂

Hi adrian your tutorial is awesome. I have a question you are resizing the frame using imutils by the width of 450. Im trying to implement this code on raspberry pi if i changed the width to something like 280 it is smooth af however i need to keep a near distance to a camera for it to perfom the algorithm because the imutils is maintaing the aspect ratio the face will become smaller. Is there a way where i can resize the frame at the same time not maintaining the aspect ratio? or zoom / crop vid? Thank you sir godbless

The less data there is to process, the faster your algorithm will run. Furthermore, resizing an image can be seen as a form of “noise reduction”. If you know there is a particular area of the frame you want to monitor you could:

1. Manually extract it via NumPy array slicing

2. Explicitly set the desired dimensions of the frame via your “picamera” library (assuming you’re using the Pi).

Hi adrian. I already installed playsound but somehow i got an error saying import gi not found. How can i fix this? TIA

Can I reach you through your email

Thanks

You can use the contact form on the PyImageSearch site.

it wont work , can u pls help

usage: detect_blinks.py [-h] -p SHAPE_PREDICTOR [-v VIDEO]

detect_blinks.py: error: the following arguments are required: -p/–shape-predictor

If you’re new to command line arguments that’s okay, but make sure you read up on them before continuing. From there there your error will be resolved 🙂

…

AttributeError: ‘NoneType’ object has no attribute ‘shape’

hey Adrian, plz tell me what is the problem here??

Your path to either the input image or input video is incorrect, depending on which version of the script you are using, which is causing your image to be “None”. You can learn more about NoneType errors, including how to resolve them, inside this post.

Hi , I just want to know that if I want to use this project without using laptop along with me so what can I do for this.

You could use other hardware, such as a Raspberry Pi.

Hi andrian

Thanks a lot for this valuable blogs

I run the above code but I get the following error:

ModuleNotFoundError: No module named ‘gi’

I am using ubuntu 18.04 OS

Hey Adrian,

What changes should I make if I’m to use my laptop webcam ?

Thank you.

There are no changes required if you want to run it on your laptop webcam.

hey…

what is required to pass at “-p” argument required error is occurring

You’re not passing in the command line argument. Make sure you read-up on command line arguments before continuing.

Sir, do you have code without the help of command line argument?

I believe in your, Kritika. Read the argparse tutorial I linked you to and you will be able to resolve the problem 🙂

Hi Sir,

Firstly, thanks for the program. There is a problem. My program does not initiate .wav file alarm. Can you tell the possible reason?

It’s hard to say what the exact problem is there. Can you play the .wav file from your command line?

Sir how can i turn on the alarm while the driver is drowsy because the alarm only run once

That is much less of a computer vision question as a general programming/engineering question. There are a few ways to approach the problem but I would suggest updating Lines 125-128 to start a thread that will loop infinitely, playing the sound. Then have a global variable that the thread checks to see if the driver has woken up.

I am very grateful to you for the tutorial on detecting drowsiness with the opening of the eye but how does the detector respond if a user wears sunglasses?

Can you add the detection of the appearance of wrinkles when yawning without saying please do a tutorial to make this article more wonderful.

If the user is wearing sunglasses you will be unable to correctly detect and localize the eye. You can also use the same algorithm for yawning as well — just monitor the aspect ratio of the mouth landmarks. I’ll be discussing the yawn detector in my up coming Computer Vision and Raspberry Pi book.

I was able to detect yawning but I could not find a link between the number of yawning and falling asleep.

If people wear sunglasses and yawn, can they still drive ??

the program is executing successfully but the problem is how to close the window .It is not stopping even if i click on close button.

Click on the window opened by OpenCV and press the “q” key onyour keyboard.

can i have documentation of this project?

The blog post/tutorial itself is the documentation.

What are the hardware requirements for driver drowsiness alert if I want to map it into my car.

Can u list them

I used a standard laptop for this project. I wrote a separate tutorial that uses a Raspberry Pi as well. Most modern machines can run this code.

First of all, I would like to thank you a lot for sharing your knowledge.

I’ve been following your posts for a while and I gain a lot of skills and comprehension as well.

About the alarm:

Once it starts it wont stop for some reason..Even though I have my eyes open.

I resetted the ALARM_ON flag to False exactly as you shown us, and yet – the alarm keeps on buzzing

That is certainly strange behavior. Are you using the code used from the “Downloads” section of this tutorial? Or did you copy and paste it? Make sure you’re using the code from the “Downloads” section to ensure there are no copy and paste errors.

As always nice work!

I didn’t see anyone else have this problem, but I’m on the Ubuntu GURUs VM and I installed PLAYSOUND. The code would run great, but no sound came out. Sound is fine in the VM as I can play the wave file.

When I tried calling the wave file from the Python CLP I would get ‘missing GI library’ error.

I ended up running this

pip install vext

pip install vext.gi

to solve the problem and it all works fine now.

In case that helps anyone

Thanks so much for sharing, Paul!

I’m trying with pi camera module, but it not run,

vs = VideoStream(usePiCamera=True).start()

IndentationError: unexpected indent

please tell me why

thanks you

Make sure you use the “Downloads” section of the tutorial to download the source code (don’t copy and paste). During your copy and paste you introduced an indentation error.

error: the following arguments are required: -p/–shape-predictor

I got an error !

You need to provide the command line arguments to the script.

Thanks for sharing! By any chance, is it possible to make an android app using this code? I’d like to try running this code on Android. Could you give me any advice?!

OpenCV does provide Android/Java bindings. I would suggest researching them (I have never personally used them myself).

I am trying to use Yawning Detection in a situation where the Driver is wearing sunglasses and hence Eye region wont be extracted . How should I switch to the Yawn detection script on what condition . I tried using :

if leftEye is None : and if leftEyeHull is None:

Both of them are not working .

You’ll want to use this tutorial to extract the mouth region via facial landmarks.

Thanks for the great post , Adrian. I was wondering is it possible to find the head angle w.r.t. camera with this approach? If I want to find the head angle and body angle is it possible?

I don’t have any tutorials on head/body pose estimation but I will certainly consider it for the future. Thank you for the suggestion!

Is anyone suggest how to make Logitech C920 compatible with this program?

Whenever I run this program, it detect my built-in MacBook camera not the Logitech C920.

Thanks!

You now have two webcams on your system — the built-in one and the C920. To detect the C920 just add in the

--webcam 1switch when executing the script.What you mean is add “–webcam 1” into script or add into executed command ?

Thanks!

It sounds like you may be know to command line arguments and how they work. That’s okay, but you need to read this post first. From there you’ll be able to understand command line arguments.

Hey,

Can you tell me which dataset you have used in this program?

Kindly share its link also.

There was no dataset used for this project, it’s entirely heuristic-based. You can use the “Downloads” section of this tutorial to download the source code if you would like.

everything is given but i doubt if it’s practical to take a laptop(or Raspberry pi 3) in a car…..is there any other hardware implementation that will support this code??? like smartphone or some other liteweight implementation???

can you tell which algo have you used in this drowsiness detection program?

See this tutorial.

nice one!!!!

I would like to detect faces at a distance away is it possible?

I’m not sure what you mean by “at a distance away” — are you referring to “long range” face detection of some sort?

hi sir, what if I wanna to add on a buzzer rather than a alarm by using GPIO, ehat should I put in the main source code? thank you so much.

You would want to refer to the documentation of whatever buzzer you are using. That’s unfortunately impossible to answer without knowing which buzzer you are using.

Hello There I am getting error when I run the code

ImportError: No module named imutils.video

help needed fast

Thank you

You first need to install the “imutils” library:

$ pip install imutilshi i am very grateful for your tutorials. it’s so great .

I want to stream this sleepy detection video onto a website but don’t know how. Do you have any tutorials like that?

I will actually be covering that exact topic in my upcoming Computer Vision + Raspberry Pi book. Stay tuned!

I tried to use the command argument –alarm alarm.wav but it doesn’t work if the eye closed is detected, I connected to the speaker by using headphone jack, the music is play well by just playing apart the file at the command prompt.

Hello! Very helpful tutorials. I have a case where the detector sometimes detects two faces and that makes it work wrong.

How do I handle this case?

Sir, may I know how to make the alarm keep looping until the open eye is detected, because the alarm is short and it only plays once even though our eyes is still open.

You would monitor the eye aspect ratio. Once the eye has been detected as “open” you can turn off the alarm. You will need to update the source code to this yourself. Give it a try!

Great Brother Thanks I should have a try.

i have downloaded the ‘shape_predictor_68_face_landmarks.dat’ file but still i am having this error :

“usage: ipykernel_launcher.py [-h] -p SHAPE_PREDICTOR [-a ALARM] [-w WEBCAM]

ipykernel_launcher.py: error: argument -p/–shape-predictor is required”

Can you please help me out!

You need to supply the command line argument to the script.

hey,

i am having an error : “name ‘args’ is not defined” in this line – predictor = dlib.shape_predictor(args[“shape_predictor”])

what can i do?

Make sure you are using the “Downloads” section of this blog post to download the source code — it sounds like you’re copying and pasting and have introduced an error into the code.

I want to add Mouth opening ratio(MOR) algorithm to increase the efficiency of drowsiness detection. can you help me with that?

I’ll be covering how to use a mouth opening ratio, including yawn detection, inside my upcoming Computer Vision + Raspberry Pi book. Stay tuned!

Hi Adrian Rosebrock.

Thanks for the great tutorial.

I have a question,

You used pre-trained facial landmark detector to get facial landmarks. i need to know how to create that facial landmark detector manually?

Thank you

Make sure you refer to the dlib library and associated documentation. There is an example script that dlib provides for training custom shape prediction models as well.

Thanks. I will Check that

Thank you for your code. Could you please help me do the same with cnn? This is my college project and I have no idea how do i do it with cnn. My project guide wants me to get it done with cnn. I have seen the same question posted earlier and the reply. I hope you would help me. Thanks

You would need to gather a dataset of “normal” vs. “tired/drowsy” drivers. From there you could attempt to train a CNN on the faces. If you need help getting up to speed quick with deep learning and training your own CNNs, refer to my book, Deep Learning for Computer Vision with Python.

Thank you for the tutorial. But when i tried this program in night my webcam couldnot get any images just black. Can you give me the idea for this issue?

Have you tried using an infrared camera so the camera can see in the dark? A standard webcam would not work well at night.

I’ve tried searching and I think cctv has infrared but when I use cv2.videocap it doesn’t work and after searching for cctv it is an analog signal.

Can you be more specific? By “open” do you mean “execute” the script?

ModuleNotFoundError: No module named ‘scipy’

how to solve this error, Pls Help

You need to install the SciPy library:

$ pip install scipyHi Adrian,