Last updated on Mar 15th, 2023.

Today’s tutorial kicks off a new series of blog posts on object tracking, arguably one of the most requested topics here on PyImageSearch.

Object tracking is the process of:

- Taking an initial set of object detections (such as an input set of bounding box coordinates)

- Creating a unique ID for each of the initial detections

- And then tracking each of the objects as they move around frames in a video, maintaining the assignment of unique IDs

Furthermore, object tracking allows us to apply a unique ID to each tracked object, making it possible for us to count unique objects in a video. Object tracking is paramount to building a person counter (which we’ll do later in this series).

An ideal object tracking algorithm will:

- Only require the object detection phase once (i.e., when the object is initially detected)

- Will be extremely fast — much faster than running the actual object detector itself

- Be able to handle when the tracked object “disappears” or moves outside the boundaries of the video frame

- Be robust to occlusion

- Be able to pick up objects it has “lost” in between frames

This is a tall order for any computer vision or image processing algorithm and there are a variety of tricks we can play to help improve our object trackers.

But before we can build such a robust method we first need to study the fundamentals of object tracking.

In today’s blog post, you will learn how to implement centroid tracking with OpenCV, an easy to understand, yet highly effective tracking algorithm.

In future posts in this object tracking series, I’ll start going into more advanced kernel-based and correlation-based tracking algorithms.

To learn how to get started building your first object tracking with OpenCV, just keep reading!

- Update July 2021: Added section on alternative object trackers, including object trackers built directly into the OpenCV library.

Simple object tracking with OpenCV

In the remainder of this post, we’ll be implementing a simple object tracking algorithm using the OpenCV library.

This object tracking algorithm is called centroid tracking as it relies on the Euclidean distance between (1) existing object centroids (i.e., objects the centroid tracker has already seen before) and (2) new object centroids between subsequent frames in a video.

We’ll review the centroid algorithm in more depth in the following section. From there we’ll implement a Python class to contain our centroid tracking algorithm and then create a Python script to actually run the object tracker and apply it to input videos.

Finally, we’ll run our object tracker and examine the results, noting both the positives and the drawbacks of the algorithm.

The centroid tracking algorithm

The centroid tracking algorithm is a multi-step process. We will review each of the tracking steps in this section.

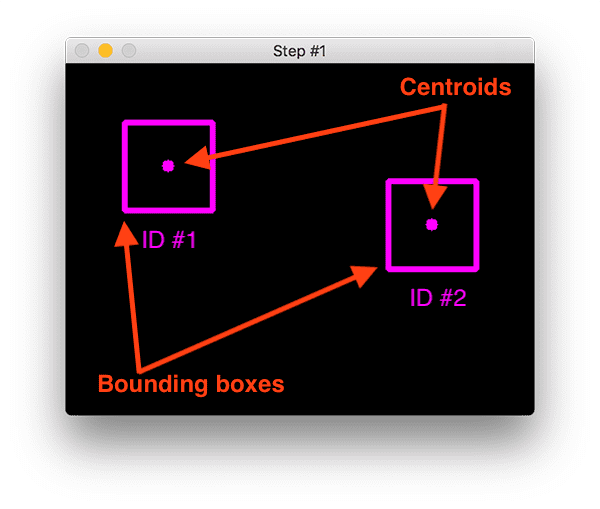

Step #1: Accept bounding box coordinates and compute centroids

The centroid tracking algorithm assumes that we are passing in a set of bounding box (x, y)-coordinates for each detected object in every single frame.

These bounding boxes can be produced by any type of object detector you would like (color thresholding + contour extraction, Haar cascades, HOG + Linear SVM, SSDs, Faster R-CNNs, etc.), provided that they are computed for every frame in the video.

Once we have the bounding box coordinates we must compute the “centroid”, or more simply, the center (x, y)-coordinates of the bounding box. Figure 1 above demonstrates accepting a set of bounding box coordinates and computing the centroid.

Since these are the first initial set of bounding boxes presented to our algorithm we will assign them unique IDs.

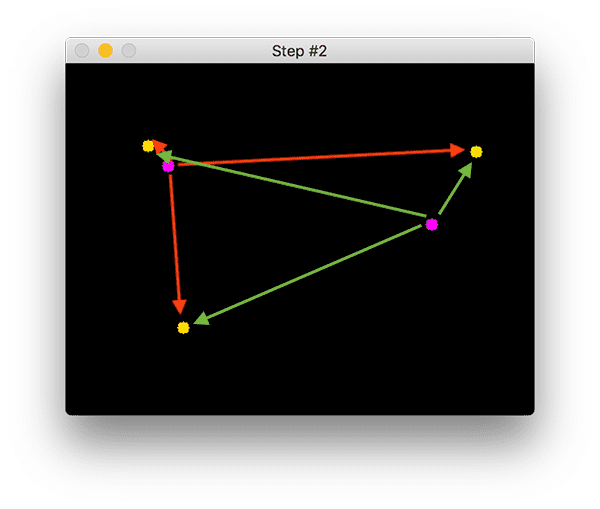

Step #2: Compute Euclidean distance between new bounding boxes and existing objects

For every subsequent frame in our video stream we apply Step #1 of computing object centroids; however, instead of assigning a new unique ID to each detected object (which would defeat the purpose of object tracking), we first need to determine if we can associate the new object centroids (yellow) with the old object centroids (purple). To accomplish this process, we compute the Euclidean distance (highlighted with green arrows) between each pair of existing object centroids and input object centroids.

From Figure 2 you can see that we have this time detected three objects in our image. The two pairs that are close together are two existing objects.

We then compute the Euclidean distances between each pair of original centroids (yellow) and new centroids (purple). But how do we use the Euclidean distances between these points to actually match them and associate them?

The answer is in Step #3.

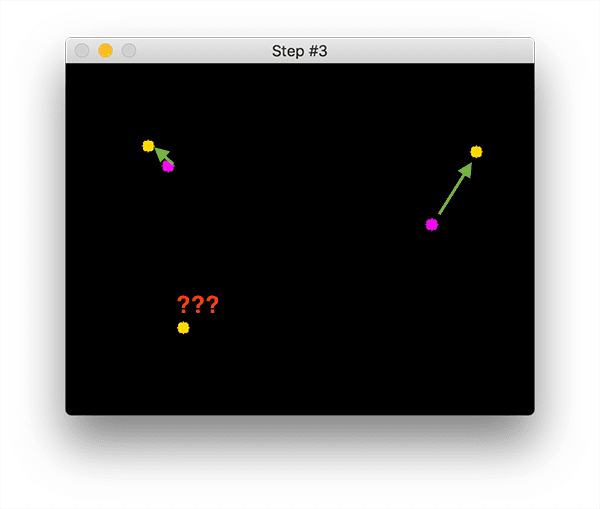

Step #3: Update (x, y)-coordinates of existing objects

The primary assumption of the centroid tracking algorithm is that a given object will potentially move in between subsequent frames, but the distance between the centroids for frames and

will be smaller than all other distances between objects.

Therefore, if we choose to associate centroids with minimum distances between subsequent frames we can build our object tracker.

In Figure 3 you can see how our centroid tracker algorithm chooses to associate centroids that minimize their respective Euclidean distances.

But what about the lonely point in the bottom-left?

It didn’t get associated with anything — what do we do with it?

Step #4: Register new objects

In the event that there are more input detections than existing objects being tracked, we need to register the new object. “Registering” simply means that we are adding the new object to our list of tracked objects by:

- Assigning it a new object ID

- Storing the centroid of the bounding box coordinates for that object

We can then go back to Step #2 and repeat the pipeline of steps for every frame in our video stream.

Figure 4 demonstrates the process of using the minimum Euclidean distances to associate existing object IDs and then registering a new object.

Step #5: Deregister old objects

Any reasonable object tracking algorithm needs to be able to handle when an object has been lost, disappeared, or left the field of view.

Exactly how you handle these situations is really dependent on where your object tracker is meant to be deployed, but for this implementation, we will deregister old objects when they cannot be matched to any existing objects for a total of N subsequent frames.

Object tracking project structure

To see today’s project structure in your terminal, simply use the tree command:

$ tree --dirsfirst . ├── pyimagesearch │ ├── __init__.py │ └── centroidtracker.py ├── object_tracker.py ├── deploy.prototxt └── res10_300x300_ssd_iter_140000.caffemodel 1 directory, 5 files

Our pyimagesearch module is not pip-installable — it is included with today’s “Downloads” (which you’ll find at the bottom of this post). Inside you’ll find the centroidtracker.py file which contains the CentroidTracker class.

The CentroidTracker class is an important component used in the object_tracker.py driver script.

The remaining .prototxt and .caffemodel files are part of the OpenCV deep learning face detector. They are necessary for today’s face detection + tracking method, but you could easily use another form of detection (more on that later).

Be sure that you have NumPy, SciPy, and imutils installed before you proceed:

$ pip install numpy scipy imutils

…in addition to having OpenCV 3.3+ installed. If you follow one of my OpenCV install tutorials, be sure to replace the tail end of the wget command to grab at least OpenCV 3.3 (and update the paths in the CMake command). You’ll need 3.3+ to ensure you have the DNN module.

Implementing centroid tracking with OpenCV

Before we can apply object tracking to our input video streams, we first need to implement the centroid tracking algorithm. While you’re digesting this centroid tracker script, just keep in mind Steps 1-5 above and review the steps as necessary.

As you’ll see, the translation of steps to code requires quite a bit of thought, and while we perform all steps, they aren’t linear due to the nature of our various data structures and code constructs.

I would suggest

- Reading the steps above

- Reading the code explanation for the centroid tracker

- And finally reading the steps above once more

This process will bring everything full circle and allow you to wrap your head around the algorithm.

Once you’re sure you understand the steps in the centroid tracking algorithm, open up the centroidtracker.py inside the pyimagesearch module and let’s review the code:

# import the necessary packages from scipy.spatial import distance as dist from collections import OrderedDict import numpy as np class CentroidTracker(): def __init__(self, maxDisappeared=50): # initialize the next unique object ID along with two ordered # dictionaries used to keep track of mapping a given object # ID to its centroid and number of consecutive frames it has # been marked as "disappeared", respectively self.nextObjectID = 0 self.objects = OrderedDict() self.disappeared = OrderedDict() # store the number of maximum consecutive frames a given # object is allowed to be marked as "disappeared" until we # need to deregister the object from tracking self.maxDisappeared = maxDisappeared

On Lines 2-4 we import our required packages and modules — distance , OrderedDict , and numpy .

Our CentroidTracker class is defined on Line 6. The constructor accepts a single parameter, the maximum number of consecutive frames a given object has to be lost/disappeared for until we remove it from our tracker (Line 7).

Our constructor builds four class variables:

nextObjectID: A counter used to assign unique IDs to each object (Line 12). In the case that an object leaves the frame and does not come back formaxDisappearedframes, a new (next) object ID would be assigned.objects: A dictionary that utilizes the object ID as the key and the centroid (x, y)-coordinates as the value (Line 13).disappeared: Maintains number of consecutive frames (value) a particular object ID (key) has been marked as “lost”for (Line 14).maxDisappeared: The number of consecutive frames an object is allowed to be marked as “lost/disappeared” until we deregister the object.

Let’s define the register method which is responsible for adding new objects to our tracker:

def register(self, centroid): # when registering an object we use the next available object # ID to store the centroid self.objects[self.nextObjectID] = centroid self.disappeared[self.nextObjectID] = 0 self.nextObjectID += 1

The register method is defined on Line 21. It accepts a centroid and then adds it to the objects dictionary using the next available object ID.

The number of times an object has disappeared is initialized to 0 in the disappeared dictionary (Line 25).

Finally, we increment the nextObjectID so that if a new object comes into view, it will be associated with a unique ID (Line 26).

Similar to our register method, we also need a deregister method:

def deregister(self, objectID): # to deregister an object ID we delete the object ID from # both of our respective dictionaries del self.objects[objectID] del self.disappeared[objectID]

Just like we can add new objects to our tracker, we also need the ability to remove old ones that have been lost or disappeared from our the input frames themselves.

The deregister method is defined on Line 28. It simply deletes the objectID in both the objects and disappeared dictionaries, respectively (Lines 31 and 32).

The heart of our centroid tracker implementation lives inside the update method:

def update(self, rects): # check to see if the list of input bounding box rectangles # is empty if len(rects) == 0: # loop over any existing tracked objects and mark them # as disappeared for objectID in list(self.disappeared.keys()): self.disappeared[objectID] += 1 # if we have reached a maximum number of consecutive # frames where a given object has been marked as # missing, deregister it if self.disappeared[objectID] > self.maxDisappeared: self.deregister(objectID) # return early as there are no centroids or tracking info # to update return self.objects

The update method, defined on Line 34, accepts a list of bounding box rectangles, presumably from an object detector (Haar cascade, HOG + Linear SVM, SSD, Faster R-CNN, etc.). The format of the rects parameter is assumed to be a tuple with this structure: (startX, startY, endX, endY) .

If there are no detections, we’ll loop over all object IDs and increment their disappeared count (Lines 37-41). We’ll also check if we have reached the maximum number of consecutive frames a given object has been marked as missing. If that is the case we need to remove it from our tracking systems (Lines 46 and 47). Since there is no tracking info to update, we go ahead and return early on Line 51.

Otherwise, we have quite a bit of work to do over the next seven code blocks in the update method:

# initialize an array of input centroids for the current frame inputCentroids = np.zeros((len(rects), 2), dtype="int") # loop over the bounding box rectangles for (i, (startX, startY, endX, endY)) in enumerate(rects): # use the bounding box coordinates to derive the centroid cX = int((startX + endX) / 2.0) cY = int((startY + endY) / 2.0) inputCentroids[i] = (cX, cY)

On Line 54 we’ll initialize a NumPy array to store the centroids for each rect .

Then, we loop over bounding box rectangles (Line 57) and compute the centroid and store it in the inputCentroids list (Lines 59-61).

If there are currently no objects we are tracking, we’ll register each of the new objects:

# if we are currently not tracking any objects take the input # centroids and register each of them if len(self.objects) == 0: for i in range(0, len(inputCentroids)): self.register(inputCentroids[i])

Otherwise, we need to update any existing object (x, y)-coordinates based on the centroid location that minimizes the Euclidean distance between them:

# otherwise, are are currently tracking objects so we need to # try to match the input centroids to existing object # centroids else: # grab the set of object IDs and corresponding centroids objectIDs = list(self.objects.keys()) objectCentroids = list(self.objects.values()) # compute the distance between each pair of object # centroids and input centroids, respectively -- our # goal will be to match an input centroid to an existing # object centroid D = dist.cdist(np.array(objectCentroids), inputCentroids) # in order to perform this matching we must (1) find the # smallest value in each row and then (2) sort the row # indexes based on their minimum values so that the row # with the smallest value is at the *front* of the index # list rows = D.min(axis=1).argsort() # next, we perform a similar process on the columns by # finding the smallest value in each column and then # sorting using the previously computed row index list cols = D.argmin(axis=1)[rows]

The updates to existing tracked objects take place beginning at the else on Line 72. The goal is to track the objects and to maintain correct object IDs — this process is accomplished by computing the Euclidean distances between all pairs of objectCentroids and inputCentroids , followed by associating object IDs that minimize the Euclidean distance.

Inside of the else block beginning on Line 72, we will:

- Grab

objectIDsandobjectCentroidvalues (Lines 74 and 75). - Compute the distance between each pair of existing object centroids and new input centroids (Line 81). The output NumPy array shape of our distance map

Dwill be(# of object centroids, # of input centroids). - To perform the matching we must (1) Find the smallest value in each row, and (2) Sort the row indexes based on the minimum values (Line 88). We perform a very similar process on the columns, finding the smallest value in each column, and then sorting them based on the ordered rows (Line 93). Our goal is to have the index values with the smallest corresponding distance at the front of the lists.

The next step is to use the distances to see if we can associate object IDs:

# in order to determine if we need to update, register, # or deregister an object we need to keep track of which # of the rows and column indexes we have already examined usedRows = set() usedCols = set() # loop over the combination of the (row, column) index # tuples for (row, col) in zip(rows, cols): # if we have already examined either the row or # column value before, ignore it # val if row in usedRows or col in usedCols: continue # otherwise, grab the object ID for the current row, # set its new centroid, and reset the disappeared # counter objectID = objectIDs[row] self.objects[objectID] = inputCentroids[col] self.disappeared[objectID] = 0 # indicate that we have examined each of the row and # column indexes, respectively usedRows.add(row) usedCols.add(col)

Inside the code block above, we:

- Initialize two sets to determine which row and column indexes we have already used (Lines 98 and 99). Keep in mind that a set is similar to a list but it contains only unique values.

- Then we loop over the combinations of

(row, col)index tuples (Line 103) in order to update our object centroids:- If we’ve already used either this row or column index, ignore it and

continueto loop (Lines 107 and 108). - Otherwise, we have found an input centroid that:

- 1. Has the smallest Euclidean distance to an existing centroid

- 2. And has not been matched with any other object

- In that case, we update the object centroid (Lines 113-115) and make sure to add the

rowandcolto their respectiveusedRowsandusedColssets

- If we’ve already used either this row or column index, ignore it and

There are likely indexes in our usedRows + usedCols sets that we have NOT examined yet:

# compute both the row and column index we have NOT yet # examined unusedRows = set(range(0, D.shape[0])).difference(usedRows) unusedCols = set(range(0, D.shape[1])).difference(usedCols)

So we must determine which centroid indexes we haven’t examined yet and store them in two new convenient sets (unusedRows and unusedCols ) on Lines 124 and 125.

Our final check handles any objects that have become lost or if they’ve potentially disappeared:

# in the event that the number of object centroids is # equal or greater than the number of input centroids # we need to check and see if some of these objects have # potentially disappeared if D.shape[0] >= D.shape[1]: # loop over the unused row indexes for row in unusedRows: # grab the object ID for the corresponding row # index and increment the disappeared counter objectID = objectIDs[row] self.disappeared[objectID] += 1 # check to see if the number of consecutive # frames the object has been marked "disappeared" # for warrants deregistering the object if self.disappeared[objectID] > self.maxDisappeared: self.deregister(objectID)

To finish up:

- If the number of object centroids is greater than or equal to the number of input centroids (Line 131):

- We need to verify if any of these objects are lost or have disappeared by looping over unused row indexes if any (Line 133).

- In the loop, we will:

- 1. Increment their

disappearedcount in the dictionary (Line 137). - 2. Check if the

disappearedcount exceeds themaxDisappearedthreshold (Line 142), and, if so we’ll deregister the object (Line 143).

- 1. Increment their

Otherwise, the number of input centroids is greater than the number of existing object centroids, so we have new objects to register and track:

# otherwise, if the number of input centroids is greater # than the number of existing object centroids we need to # register each new input centroid as a trackable object else: for col in unusedCols: self.register(inputCentroids[col]) # return the set of trackable objects return self.objects

We loop over the unusedCols indexes (Line 149) and we register each new centroid (Line 150). Finally, we’ll return the set of trackable objects to the calling method (Line 153).

Understanding the centroid tracking distance relationship

Our centroid tracking implementation was quite long, and admittedly, the most confusing aspect of the algorithm is Lines 81-93.

If you’re having trouble following along with what that code is doing you should consider opening a Python shell and performing the following experiment:

>>> from scipy.spatial import distance as dist

>>> import numpy as np

>>> np.random.seed(42)

>>> objectCentroids = np.random.uniform(size=(2, 2))

>>> centroids = np.random.uniform(size=(3, 2))

>>> D = dist.cdist(objectCentroids, centroids)

>>> D

array([[0.82421549, 0.32755369, 0.33198071],

[0.72642889, 0.72506609, 0.17058938]])

Once you’ve started a Python shell in your terminal with the python command, import distance and numpy as shown on Lines 1 and 2).

Then, set a seed for reproducibility (Line 3) and generate 2 (random) existing objectCentroids (Line 4) and 3 inputCentroids (Line 5).

From there, compute the Euclidean distance between the pairs (Line 6) and display the results (Lines 7-9). The result is a matrix D of distances with two rows (# of existing object centroids) and three columns (# of new input centroids).

Just like we did earlier in the script, let’s find the minimum distance in each row and sort the indexes based on this value:

>>> D.min(axis=1) array([0.32755369, 0.17058938]) >>> rows = D.min(axis=1).argsort() >>> rows array([1, 0])

First, we find the minimum value for each row, allowing us to figure out which existing object is closest to the new input centroid (Lines 10 and 11). By then sorting on these values (Line 12) we can obtain the indexes of these rows (Lines 13 and 14).

In this case, the second row (index 1 ) has the smallest value and then the first row (index 0 ) has the next smallest value.

Using a similar process for the columns:

>>> D.argmin(axis=1) array([1, 2]) >>> cols = D.argmin(axis=1)[rows] >>> cols array([2, 1])

…we first examine the values in the columns and find the index of the value with the smallest column (Lines 15 and 16).

We then sort these values using our existing rows (Lines 17-19).

Let’s print the results and analyze them:

>>> print(list(zip(rows, cols))) [(1, 2), (0, 1)]

The final step is to combine them using zip (Lines 20). The resulting list is printed on Line 21.

Analyzing the results, we find that:

D[1, 2]has the smallest Euclidean distance implying that the second existing object will be matched against the third input centroid.- And

D[0, 1]has the next smallest Euclidean distance which implies that the first existing object will be matched against the second input centroid.

I’d like to reiterate here that now that you’ve reviewed the code, you should go back and review the steps to the algorithm in the previous section. From there you’ll be able to associate the code with the more linear steps outlined here.

Implementing the object tracking driver script

Now that we have implemented our CentroidTracker class, let’s put it to work with an object tracking driver script.

The driver script is where you can use your own preferred object detector, provided that it produces a set of bounding boxes. This could be a Haar Cascade, HOG + Linear SVM, YOLO, SSD, Faster R-CNN, etc. For this example script, I’m making use of OpenCV’s deep learning face detector, but feel free to make your own version of the script which implements a different detector.

Inside this script, we will:

- Work with a live

VideoStreamobject to grab frames from your webcam - Load and utilize OpenCV’s deep learning face detector

- Instantiate our

CentroidTrackerand use it to track face objects in the video stream - And display our results which includes bounding boxes and object ID annotations overlaid on the frames

When you’re ready, open up object_tracker.py from today’s “Downloads” and follow along:

# import the necessary packages

from pyimagesearch.centroidtracker import CentroidTracker

from imutils.video import VideoStream

import numpy as np

import argparse

import imutils

import time

import cv2

# construct the argument parse and parse the arguments

ap = argparse.ArgumentParser()

ap.add_argument("-p", "--prototxt", required=True,

help="path to Caffe 'deploy' prototxt file")

ap.add_argument("-m", "--model", required=True,

help="path to Caffe pre-trained model")

ap.add_argument("-c", "--confidence", type=float, default=0.5,

help="minimum probability to filter weak detections")

args = vars(ap.parse_args())

First, we specify our imports. Most notably we’re using the CentroidTracker class that we just reviewed. We’re also going to use VideoStream from imutils and OpenCV.

We have three command line arguments which are all related to our deep learning face detector:

--prototxt: The path to the Caffe “deploy” prototxt.--model: The path to the pre-trained model models.--confidence: Our probability threshold to filter weak detections. I found that a default value of0.5is sufficient.

The prototxt and model files come from OpenCV’s repository and I’m including them in the “Downloads” for your convenience.

Note: In case you missed it at the start of this section, I’ll repeat that you can use any detector you wish. As an example, we’re using a deep learning face detector which produces bounding boxes. Feel free to experiment with other detectors, just be sure that you have capable hardware to keep up with the more complex ones (some may run best with a GPU, but today’s face detector can easily run on a CPU).

Next, let’s perform our initializations:

# initialize our centroid tracker and frame dimensions

ct = CentroidTracker()

(H, W) = (None, None)

# load our serialized model from disk

print("[INFO] loading model...")

net = cv2.dnn.readNetFromCaffe(args["prototxt"], args["model"])

# initialize the video stream and allow the camera sensor to warmup

print("[INFO] starting video stream...")

vs = VideoStream(src=0).start()

time.sleep(2.0)

In the above block, we:

- Instantiate our

CentroidTracker,ct(Line 21). Recall from the explanation in the previous section that this object has three methods: (1)register, (2)deregister, and (3)update. We’re only going to use theupdatemethod as it will register and deregister objects automatically. We also initializeHandW(our frame dimensions) toNone(Line 22). - Load our serialized deep learning face detector model from disk using OpenCV’s DNN module (Line 26).

- Start our

VideoStream,vs(Line 30). Withvshandy, we’ll be able to capture frames from our camera in our nextwhileloop. We’ll allow our camera2.0seconds to warm up (Line 31).

Now let’s begin our while loop and start tracking face objects:

# loop over the frames from the video stream while True: # read the next frame from the video stream and resize it frame = vs.read() frame = imutils.resize(frame, width=400) # if the frame dimensions are None, grab them if W is None or H is None: (H, W) = frame.shape[:2] # construct a blob from the frame, pass it through the network, # obtain our output predictions, and initialize the list of # bounding box rectangles blob = cv2.dnn.blobFromImage(frame, 1.0, (W, H), (104.0, 177.0, 123.0)) net.setInput(blob) detections = net.forward() rects = []

We loop over frames and resize them to a fixed width (while preserving aspect ratio) on Lines 34-47. Our frame dimensions are grabbed as needed (Lines 40 and 41).

Then we pass the frame through the CNN object detector to obtain predictions and object locations (Lines 46-49).

We initialize a list of rects , our bounding box rectangles on Line 50.

From there, let’s process the detections:

# loop over the detections

for i in range(0, detections.shape[2]):

# filter out weak detections by ensuring the predicted

# probability is greater than a minimum threshold

if detections[0, 0, i, 2] > args["confidence"]:

# compute the (x, y)-coordinates of the bounding box for

# the object, then update the bounding box rectangles list

box = detections[0, 0, i, 3:7] * np.array([W, H, W, H])

rects.append(box.astype("int"))

# draw a bounding box surrounding the object so we can

# visualize it

(startX, startY, endX, endY) = box.astype("int")

cv2.rectangle(frame, (startX, startY), (endX, endY),

(0, 255, 0), 2)

We loop over the detections beginning on Line 53. If the detection exceeds our confidence threshold, indicating a valid detection, we:

- Compute the bounding box coordinates and append them to the

rectslist (Lines 59 and 60) - Draw a bounding box around the object (Lines 64-66)

Finally, let’s call update on our centroid tracker object, ct :

# update our centroid tracker using the computed set of bounding

# box rectangles

objects = ct.update(rects)

# loop over the tracked objects

for (objectID, centroid) in objects.items():

# draw both the ID of the object and the centroid of the

# object on the output frame

text = "ID {}".format(objectID)

cv2.putText(frame, text, (centroid[0] - 10, centroid[1] - 10),

cv2.FONT_HERSHEY_SIMPLEX, 0.5, (0, 255, 0), 2)

cv2.circle(frame, (centroid[0], centroid[1]), 4, (0, 255, 0), -1)

# show the output frame

cv2.imshow("Frame", frame)

key = cv2.waitKey(1) & 0xFF

# if the `q` key was pressed, break from the loop

if key == ord("q"):

break

# do a bit of cleanup

cv2.destroyAllWindows()

vs.stop()

The ct.update call on Line 70 handles the heavy lifting in our simple object tracker with Python and OpenCV script.

We would be done here and ready to loop back to the top if we didn’t care about visualization.

But that’s no fun!

On Lines 73-79 we display the centroid as a filled in circle and the unique object ID number text. Now we’ll be able to visualize the results and check to see if our CentroidTracker properly keeps track of our objects by associating the correct IDs with the objects in the video stream.

We’ll display the frame on Line 82 until the quit key (“q”) has been pressed (Lines 83-87). If the quit key is pressed, we simply break and perform cleanup (Lines 87-91).

Centroid object tracking results

To see our centroid tracker in action using the “Downloads” section of this blog post to download the source code and OpenCV face detector. From there, open up a terminal and execute the following command:

For a more detailed demonstration of our object tracker, including commentary, be sure to refer to the video below:

Limitations and drawbacks

While our centroid tracker worked great in this example, there are two primary drawbacks of this object tracking algorithm.

The first is that it requires that object detection step to be run on every frame of the input video.

- For very fast object detectors (i.e., color thresholding and Haar cascades) having to run the detector on every input frame is likely not an issue.

- But if you are (1) using a significantly more computationally expensive object detector such as HOG + Linear SVM or deep learning-based detectors on (2) a resource-constrained device, your frame processing pipeline will slow down tremendously as you will be spending the entire pipeline running a very slow detector.

The second drawback is related to the underlying assumptions of the centroid tracking algorithm itself — centroids must lie close together between subsequent frames.

- This assumption typically holds, but keep in mind we are representing our 3D world with 2D frames — what happens when an object overlaps with another one?

- The answer is that object ID switching could occur.

- If two or more objects overlap each other to the point where their centroids intersect and instead have the minimum distance to the other respective object, the algorithm may (unknowingly) swap the object ID.

- It’s important to understand that the overlapping/occluded object problem is not specific to centroid tracking — it happens for many other object trackers as well, including advanced ones.

- However, the problem is more pronounced with centroid tracking as we relying strictly on the Euclidean distances between centroids and no additional metrics, heuristics, or learned patterns.

As long as you keep these assumptions and limitations in mind when using centroid tracking the algorithm will work wonderfully for you.

Alternative object tracking methods

The object tracking method we implemented here today is called centroid tracking.

Provided we can apply our object detector on each and every input frame, we can apply centroid tracking to take the results of the object detector and associate each object with a unique ID (and therefore track the object as it moves throughout the scene).

However, applying a dedicated object detector in each and every frame can be computationally expensive, especially if you are using a resource constrained device (like the Raspberry Pi, for example), and may prevent your computer vision pipeline from running in real-time.

The following tutorial on OpenCV object tracking covers the eight more popular object trackers built into the OpenCV library:

- BOOSTING tracker

- MIL tracker

- KCF tracker

- CSRT tracker

- MedianFlow tracker

- TLD tracker

- MOSSE tracker

- GOTURN tracker

Once you understand how to use OpenCV and dlib’s object trackers, you can move on to multi-object tracking:

And from there, you can start building actual real-world projects that leverage object tracking:

What's next? We recommend PyImageSearch University.

84 total classes • 114+ hours of on-demand code walkthrough videos • Last updated: February 2024

★★★★★ 4.84 (128 Ratings) • 16,000+ Students Enrolled

I strongly believe that if you had the right teacher you could master computer vision and deep learning.

Do you think learning computer vision and deep learning has to be time-consuming, overwhelming, and complicated? Or has to involve complex mathematics and equations? Or requires a degree in computer science?

That’s not the case.

All you need to master computer vision and deep learning is for someone to explain things to you in simple, intuitive terms. And that’s exactly what I do. My mission is to change education and how complex Artificial Intelligence topics are taught.

If you're serious about learning computer vision, your next stop should be PyImageSearch University, the most comprehensive computer vision, deep learning, and OpenCV course online today. Here you’ll learn how to successfully and confidently apply computer vision to your work, research, and projects. Join me in computer vision mastery.

Inside PyImageSearch University you'll find:

- ✓ 84 courses on essential computer vision, deep learning, and OpenCV topics

- ✓ 84 Certificates of Completion

- ✓ 114+ hours of on-demand video

- ✓ Brand new courses released regularly, ensuring you can keep up with state-of-the-art techniques

- ✓ Pre-configured Jupyter Notebooks in Google Colab

- ✓ Run all code examples in your web browser — works on Windows, macOS, and Linux (no dev environment configuration required!)

- ✓ Access to centralized code repos for all 536+ tutorials on PyImageSearch

- ✓ Easy one-click downloads for code, datasets, pre-trained models, etc.

- ✓ Access on mobile, laptop, desktop, etc.

Summary

In today’s blog post you learned how to perform simple object tracking with OpenCV using an algorithm called centroid tracking.

The centroid tracking algorithm works by:

- Accepting bounding box coordinates for each object in every frame (presumably by some object detector).

- Computing the Euclidean distance between the centroids of the input bounding boxes and the centroids of existing objects that we already have examined.

- Updating the tracked object centroids to their new centroid locations based on the new centroid with the smallest Euclidean distance.

- And if necessary, marking objects as either “disappeared” or deregistering them completely.

Our centroid tracker performed well in this example tutorial but has two primary downsides:

- It requires that we run an object detector for each frame of the video — if your object detector is computationally expensive to run you would not want to utilize this method.

- It does not handle overlapping objects well and due to the nature of the Euclidean distance between centroids, it’s actually possible for our centroids to “swap IDs” which is far from ideal.

Despite its downsides, centroid tracking can be used in quite a few object tracking applications provided (1) your environment is somewhat controlled and you don’t have to worry about potentially overlapping objects and (2) your object detector itself can be run in real-time.

If you enjoyed today’s blog post, be sure to download the code using the form below. I’ll be back next week with another object tracking tutorial!

Download the Source Code and FREE 17-page Resource Guide

Enter your email address below to get a .zip of the code and a FREE 17-page Resource Guide on Computer Vision, OpenCV, and Deep Learning. Inside you'll find my hand-picked tutorials, books, courses, and libraries to help you master CV and DL!