Last updated on July 8, 2021.

In this tutorial you will learn how to build a “people counter” with OpenCV and Python. Using OpenCV, we’ll count the number of people who are heading “in” or “out” of a department store in real-time.

Building a person counter with OpenCV has been one of the most-requested topics here on the PyImageSearch and I’ve been meaning to do a blog post on people counting for a year now — I’m incredibly thrilled to be publishing it and sharing it with you today.

Enjoy the tutorial and let me know what you think in the comments section at the bottom of the post!

A dataset of videos with people moving around is crucial for a people counter. It allows the model to learn to detect and track people across frames, thereby counting the number of people accurately.

Roboflow has free tools for each stage of the computer vision pipeline that will streamline your workflows and supercharge your productivity.

Sign up or Log in to your Roboflow account to access state of the art dataset libaries and revolutionize your computer vision pipeline.

You can start by choosing your own datasets or using our PyimageSearch’s assorted library of useful datasets.

Bring data in any of 40+ formats to Roboflow, train using any state-of-the-art model architectures, deploy across multiple platforms (API, NVIDIA, browser, iOS, etc), and connect to applications or 3rd party tools.

To get started building a people counter with OpenCV, just keep reading!

- Update July 2021: Added section on how to improve the efficiency, speed, and FPS throughput rate of the people counter by using multi-object tracking spread across multiple processes/cores.

OpenCV People Counter with Python

In the first part of today’s blog post, we’ll be discussing the required Python packages you’ll need to build our people counter.

From there I’ll provide a brief discussion on the difference between object detection and object tracking, along with how we can leverage both to create a more accurate people counter.

Afterwards, we’ll review the directory structure for the project and then implement the entire person counting project.

Finally, we’ll examine the results of applying people counting with OpenCV to actual videos.

Required Python libraries for people counting

In order to build our people counting applications, we’ll need a number of different Python libraries, including:

Additionally, you’ll also want to access the “Downloads” section of this blog post to retrieve my source code which includes:

- My special

pyimagesearchmodule which we’ll implement and use later in this post - The Python driver script used to start the people counter

- All example videos used here in the post

I’m going to assume you already have NumPy, OpenCV, and dlib installed on your system.

If you don’t have OpenCV installed, you’ll want to head to my OpenCV install page and follow the relevant tutorial for your particular operating system.

If you need to install dlib, you can use this guide.

Finally, you can install/upgrade your imutils via the following command:

$ pip install --upgrade imutils

Understanding object detection vs. object tracking

There is a fundamental difference between object detection and object tracking that you must understand before we proceed with the rest of this tutorial.

When we apply object detection we are determining where in an image/frame an object is. An object detector is also typically more computationally expensive, and therefore slower, than an object tracking algorithm. Examples of object detection algorithms include Haar cascades, HOG + Linear SVM, and deep learning-based object detectors such as Faster R-CNNs, YOLO, and Single Shot Detectors (SSDs).

An object tracker, on the other hand, will accept the input (x, y)-coordinates of where an object is in an image and will:

- Assign a unique ID to that particular object

- Track the object as it moves around a video stream, predicting the new object location in the next frame based on various attributes of the frame (gradient, optical flow, etc.)

Examples of object tracking algorithms include MedianFlow, MOSSE, GOTURN, kernalized correlation filters, and discriminative correlation filters, to name a few.

If you’re interested in learning more about the object tracking algorithms built into OpenCV, be sure to refer to this blog post.

Combining both object detection and object tracking

Highly accurate object trackers will combine the concept of object detection and object tracking into a single algorithm, typically divided into two phases:

- Phase 1 — Detecting: During the detection phase we are running our computationally more expensive object tracker to (1) detect if new objects have entered our view, and (2) see if we can find objects that were “lost” during the tracking phase. For each detected object we create or update an object tracker with the new bounding box coordinates. Since our object detector is more computationally expensive we only run this phase once every N frames.

- Phase 2 — Tracking: When we are not in the “detecting” phase we are in the “tracking” phase. For each of our detected objects, we create an object tracker to track the object as it moves around the frame. Our object tracker should be faster and more efficient than the object detector. We’ll continue tracking until we’ve reached the N-th frame and then re-run our object detector. The entire process then repeats.

The benefit of this hybrid approach is that we can apply highly accurate object detection methods without as much of the computational burden. We will be implementing such a tracking system to build our people counter.

Project structure

Let’s review the project structure for today’s blog post. Once you’ve grabbed the code from the “Downloads” section, you can inspect the directory structure with the tree command:

$ tree --dirsfirst . ├── pyimagesearch │ ├── __init__.py │ ├── centroidtracker.py │ └── trackableobject.py ├── mobilenet_ssd │ ├── MobileNetSSD_deploy.caffemodel │ └── MobileNetSSD_deploy.prototxt ├── videos │ ├── example_01.mp4 │ └── example_02.mp4 ├── output │ ├── output_01.avi │ └── output_02.avi └── people_counter.py 4 directories, 10 files

Zeroing in on the most-important two directories, we have:

pyimagesearch/: This module contains the centroid tracking algorithm. The centroid tracking algorithm is covered in the “Combining object tracking algorithms” section, but the code is not. For a review of the centroid tracking code (centroidtracker.py) you should refer to the first post in the series.mobilenet_ssd/: Contains the Caffe deep learning model files. We’ll be using a MobileNet Single Shot Detector (SSD) which is covered at the top of this blog post in the section, “Single Shot Detectors for object detection”.

The heart of today’s project is contained within the people_counter.py script — that’s where we’ll spend most of our time. We’ll also review the trackableobject.py script today.

Combining object tracking algorithms

To implement our people counter we’ll be using both OpenCV and dlib. We’ll use OpenCV for standard computer vision/image processing functions, along with the deep learning object detector for people counting.

We’ll then use dlib for its implementation of correlation filters. We could use OpenCV here as well; however, the dlib object tracking implementation was a bit easier to work with for this project.

I’ll be including a deep dive into dlib’s object tracking algorithm in next week’s post.

Along with dlib’s object tracking implementation, we’ll also be using my implementation of centroid tracking from a few weeks ago. Reviewing the entire centroid tracking algorithm is outside the scope of this blog post, but I’ve included a brief overview below.

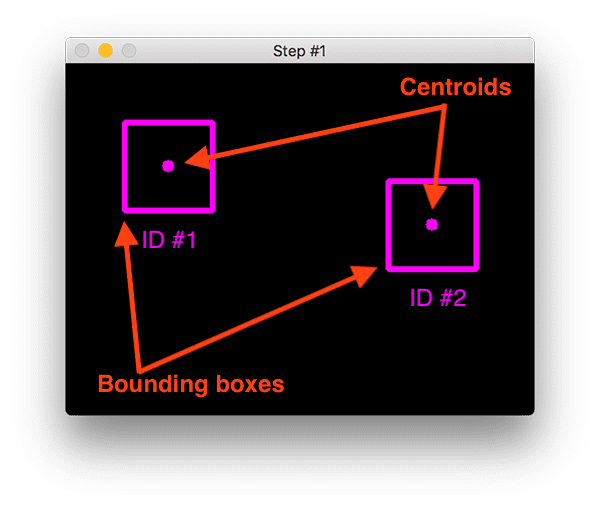

At Step #1 we accept a set of bounding boxes and compute their corresponding centroids (i.e., the center of the bounding boxes):

The bounding boxes themselves can be provided by either:

- An object detector (such as HOG + Linear SVM, Faster R- CNN, SSDs, etc.)

- Or an object tracker (such as correlation filters)

In the above image you can see that we have two objects to track in this initial iteration of the algorithm.

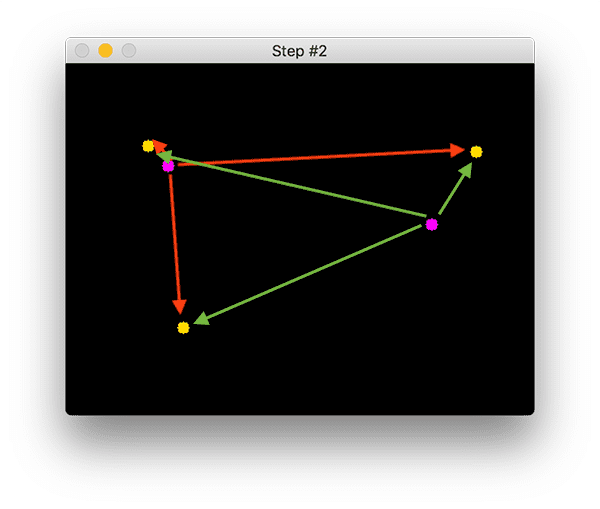

During Step #2 we compute the Euclidean distance between any new centroids (yellow) and existing centroids (purple):

The centroid tracking algorithm makes the assumption that pairs of centroids with minimum Euclidean distance between them must be the same object ID.

In the example image above we have two existing centroids (purple) and three new centroids (yellow), implying that a new object has been detected (since there is one more new centroid vs. old centroid).

The arrows then represent computing the Euclidean distances between all purple centroids and all yellow centroids.

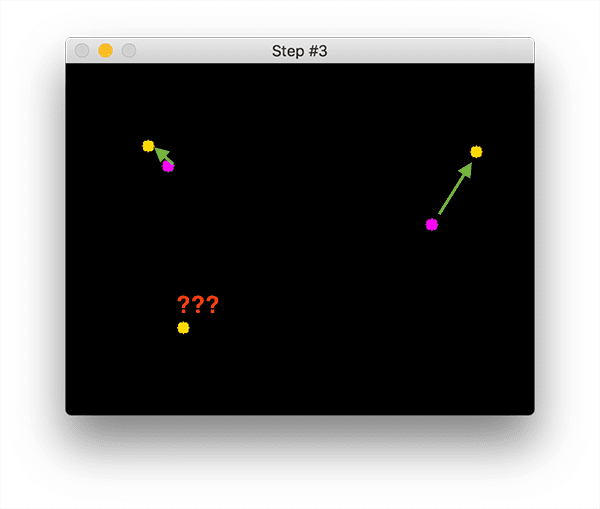

Once we have the Euclidean distances we attempt to associate object IDs in Step #3:

In Figure 3 you can see that our centroid tracker has chosen to associate centroids that minimize their respective Euclidean distances.

But what about the point in the bottom-left?

It didn’t get associated with anything — what do we do?

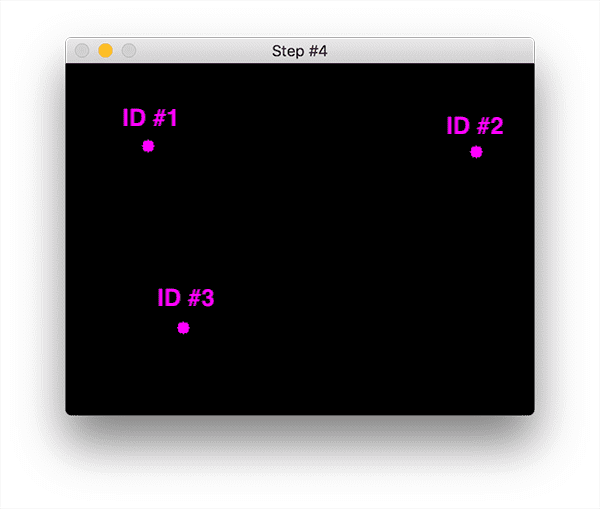

To answer that question we need to perform Step #4, registering new objects:

Registering simply means that we are adding the new object to our list of tracked objects by:

- Assigning it a new object ID

- Storing the centroid of the bounding box coordinates for the new object

In the event that an object has been lost or has left the field of view, we can simply deregister the object (Step #5).

Exactly how you handle when an object is “lost” or is “no longer visible” really depends on your exact application, but for our people counter, we will deregister people IDs when they cannot be matched to any existing person objects for 40 consecutive frames.

Again, this is only a brief overview of the centroid tracking algorithm.

Note: For a more detailed review, including an explanation of the source code used to implement centroid tracking, be sure to refer to this post.

Creating a “trackable object”

In order to track and count an object in a video stream, we need an easy way to store information regarding the object itself, including:

- It’s object ID

- It’s previous centroids (so we can easily to compute the direction the object is moving)

- Whether or not the object has already been counted

To accomplish all of these goals we can define an instance of TrackableObject — open up the trackableobject.py file and insert the following code:

class TrackableObject: def __init__(self, objectID, centroid): # store the object ID, then initialize a list of centroids # using the current centroid self.objectID = objectID self.centroids = [centroid] # initialize a boolean used to indicate if the object has # already been counted or not self.counted = False

The TrackableObject constructor accepts an objectID + centroid and stores them. The centroids variable is a list because it will contain an object’s centroid location history.

The constructor also initializes counted as False , indicating that the object has not been counted yet.

Implementing our people counter with OpenCV + Python

With all of our supporting Python helper tools and classes in place, we are now ready to built our OpenCV people counter.

Open up your people_counter.py file and insert the following code:

# import the necessary packages from pyimagesearch.centroidtracker import CentroidTracker from pyimagesearch.trackableobject import TrackableObject from imutils.video import VideoStream from imutils.video import FPS import numpy as np import argparse import imutils import time import dlib import cv2

We begin by importing our necessary packages:

- From the

pyimagesearchmodule, we import our customCentroidTrackerandTrackableObjectclasses. - The

VideoStreamandFPSmodules fromimutils.videowill help us to work with a webcam and to calculate the estimated Frames Per Second (FPS) throughput rate. - We need

imutilsfor its OpenCV convenience functions. - The

dliblibrary will be used for its correlation tracker implementation. - OpenCV will be used for deep neural network inference, opening video files, writing video files, and displaying output frames to our screen.

Now that all of the tools are at our fingertips, let’s parse command line arguments:

# construct the argument parse and parse the arguments

ap = argparse.ArgumentParser()

ap.add_argument("-p", "--prototxt", required=True,

help="path to Caffe 'deploy' prototxt file")

ap.add_argument("-m", "--model", required=True,

help="path to Caffe pre-trained model")

ap.add_argument("-i", "--input", type=str,

help="path to optional input video file")

ap.add_argument("-o", "--output", type=str,

help="path to optional output video file")

ap.add_argument("-c", "--confidence", type=float, default=0.4,

help="minimum probability to filter weak detections")

ap.add_argument("-s", "--skip-frames", type=int, default=30,

help="# of skip frames between detections")

args = vars(ap.parse_args())

We have six command line arguments which allow us to pass information to our people counter script from the terminal at runtime:

--prototxt: Path to the Caffe “deploy” prototxt file.--model: The path to the Caffe pre-trained CNN model.--input: Optional input video file path. If no path is specified, your webcam will be utilized.--output: Optional output video path. If no path is specified, a video will not be recorded.--confidence: With a default value of0.4, this is the minimum probability threshold which helps to filter out weak detections.--skip-frames: The number of frames to skip before running our DNN detector again on the tracked object. Remember, object detection is computationally expensive, but it does help our tracker to reassess objects in the frame. By default we skip30frames between detecting objects with the OpenCV DNN module and our CNN single shot detector model.

Now that our script can dynamically handle command line arguments at runtime, let’s prepare our SSD:

# initialize the list of class labels MobileNet SSD was trained to

# detect

CLASSES = ["background", "aeroplane", "bicycle", "bird", "boat",

"bottle", "bus", "car", "cat", "chair", "cow", "diningtable",

"dog", "horse", "motorbike", "person", "pottedplant", "sheep",

"sofa", "train", "tvmonitor"]

# load our serialized model from disk

print("[INFO] loading model...")

net = cv2.dnn.readNetFromCaffe(args["prototxt"], args["model"])

First, we’ll initialize CLASSES — the list of classes that our SSD supports. This list should not be changed if you’re using the model provided in the “Downloads”. We’re only interested in the “person” class, but you could count other moving objects as well (however, if your “pottedplant”, “sofa”, or “tvmonitor” grows legs and starts moving, you should probably run out of your house screaming rather than worrying about counting them! ? ).

On Line 38 we load our pre-trained MobileNet SSD used to detect objects (but again, we’re just interested in detecting and tracking people, not any other class). To learn more about MobileNet and SSDs, please refer to my previous blog post.

From there we can initialize our video stream:

# if a video path was not supplied, grab a reference to the webcam

if not args.get("input", False):

print("[INFO] starting video stream...")

vs = VideoStream(src=0).start()

time.sleep(2.0)

# otherwise, grab a reference to the video file

else:

print("[INFO] opening video file...")

vs = cv2.VideoCapture(args["input"])

First we handle the case where we’re using a webcam video stream (Lines 41-44). Otherwise, we’ll be capturing frames from a video file (Lines 47-49).

We still have a handful of initializations to perform before we begin looping over frames:

# initialize the video writer (we'll instantiate later if need be)

writer = None

# initialize the frame dimensions (we'll set them as soon as we read

# the first frame from the video)

W = None

H = None

# instantiate our centroid tracker, then initialize a list to store

# each of our dlib correlation trackers, followed by a dictionary to

# map each unique object ID to a TrackableObject

ct = CentroidTracker(maxDisappeared=40, maxDistance=50)

trackers = []

trackableObjects = {}

# initialize the total number of frames processed thus far, along

# with the total number of objects that have moved either up or down

totalFrames = 0

totalDown = 0

totalUp = 0

# start the frames per second throughput estimator

fps = FPS().start()

The remaining initializations include:

writer: Our video writer. We’ll instantiate this object later if we are writing to video.WandH: Our frame dimensions. We’ll need to plug these intocv2.VideoWriter.ct: OurCentroidTracker. For details on the implementation ofCentroidTracker, be sure to refer to my blog post from a few weeks ago.trackers: A list to store the dlib correlation trackers. To learn about dlib correlation tracking stay tuned for next week’s post.trackableObjects: A dictionary which maps anobjectIDto aTrackableObject.totalFrames: The total number of frames processed.totalDownandtotalUp: The total number of objects/people that have moved either down or up. These variables measure the actual “people counting” results of the script.fps: Our frames per second estimator for benchmarking.

Note: If you get lost in the while loop below, you should refer back to this bulleted listing of important variables.

Now that all of our initializations are taken care of, let’s loop over incoming frames:

# loop over frames from the video stream

while True:

# grab the next frame and handle if we are reading from either

# VideoCapture or VideoStream

frame = vs.read()

frame = frame[1] if args.get("input", False) else frame

# if we are viewing a video and we did not grab a frame then we

# have reached the end of the video

if args["input"] is not None and frame is None:

break

# resize the frame to have a maximum width of 500 pixels (the

# less data we have, the faster we can process it), then convert

# the frame from BGR to RGB for dlib

frame = imutils.resize(frame, width=500)

rgb = cv2.cvtColor(frame, cv2.COLOR_BGR2RGB)

# if the frame dimensions are empty, set them

if W is None or H is None:

(H, W) = frame.shape[:2]

# if we are supposed to be writing a video to disk, initialize

# the writer

if args["output"] is not None and writer is None:

fourcc = cv2.VideoWriter_fourcc(*"MJPG")

writer = cv2.VideoWriter(args["output"], fourcc, 30,

(W, H), True)

We begin looping on Line 76. At the top of the loop we grab the next frame (Lines 79 and 80). In the event that we’ve reached the end of the video, we’ll break out of the loop (Lines 84 and 85).

Preprocessing the frame takes place on Lines 90 and 91. This includes resizing and swapping color channels as dlib requires an rgb image.

We grab the dimensions of the frame for the video writer (Lines 94 and 95).

From there we’ll instantiate the video writer if an output path was provided via command line argument (Lines 99-102). To learn more about writing video to disk, be sure to refer to this post.

Now let’s detect people using the SSD:

# initialize the current status along with our list of bounding # box rectangles returned by either (1) our object detector or # (2) the correlation trackers status = "Waiting" rects = [] # check to see if we should run a more computationally expensive # object detection method to aid our tracker if totalFrames % args["skip_frames"] == 0: # set the status and initialize our new set of object trackers status = "Detecting" trackers = [] # convert the frame to a blob and pass the blob through the # network and obtain the detections blob = cv2.dnn.blobFromImage(frame, 0.007843, (W, H), 127.5) net.setInput(blob) detections = net.forward()

We initialize a status as “Waiting” on Line 107. Possible status states include:

- Waiting: In this state, we’re waiting on people to be detected and tracked.

- Detecting: We’re actively in the process of detecting people using the MobileNet SSD.

- Tracking: People are being tracked in the frame and we’re counting the

totalUpandtotalDown.

Our rects list will be populated either via detection or tracking. We go ahead and initialize rects on Line 108.

It’s important to understand that deep learning object detectors are very computationally expensive, especially if you are running them on your CPU.

To avoid running our object detector on every frame, and to speed up our tracking pipeline, we’ll be skipping every N frames (set by command line argument --skip-frames where 30 is the default). Only every N frames will we exercise our SSD for object detection. Otherwise, we’ll simply be tracking moving objects in-between.

Using the modulo operator on Line 112 we ensure that we’ll only execute the code in the if-statement every N frames.

Assuming we’ve landed on a multiple of skip_frames , we’ll update the status to “Detecting” (Line 114).

Then we initialize our new list of trackers (Line 115).

Next, we’ll perform inference via object detection. We begin by creating a blob from the image, followed by passing the blob through the net to obtain detections (Lines 119-121).

Now we’ll loop over each of the detections in hopes of finding objects belonging to the “person” class:

# loop over the detections for i in np.arange(0, detections.shape[2]): # extract the confidence (i.e., probability) associated # with the prediction confidence = detections[0, 0, i, 2] # filter out weak detections by requiring a minimum # confidence if confidence > args["confidence"]: # extract the index of the class label from the # detections list idx = int(detections[0, 0, i, 1]) # if the class label is not a person, ignore it if CLASSES[idx] != "person": continue

Looping over detections on Line 124, we proceed to grab the confidence (Line 127) and filter out weak results + those that don’t belong to the “person” class (Lines 131-138).

Now we can compute a bounding box for each person and begin correlation tracking:

# compute the (x, y)-coordinates of the bounding box

# for the object

box = detections[0, 0, i, 3:7] * np.array([W, H, W, H])

(startX, startY, endX, endY) = box.astype("int")

# construct a dlib rectangle object from the bounding

# box coordinates and then start the dlib correlation

# tracker

tracker = dlib.correlation_tracker()

rect = dlib.rectangle(startX, startY, endX, endY)

tracker.start_track(rgb, rect)

# add the tracker to our list of trackers so we can

# utilize it during skip frames

trackers.append(tracker)

Computing our bounding box takes place on Lines 142 and 143.

Then we instantiate our dlib correlation tracker on Line 148, followed by passing in the object’s bounding box coordinates to dlib.rectangle , storing the result as rect (Line 149).

Subsequently, we start tracking on Line 150 and append the tracker to the trackers list on Line 154.

That’s a wrap for all operations we do every N skip-frames!

Let’s take care of the typical operations where tracking is taking place in the else block:

# otherwise, we should utilize our object *trackers* rather than # object *detectors* to obtain a higher frame processing throughput else: # loop over the trackers for tracker in trackers: # set the status of our system to be 'tracking' rather # than 'waiting' or 'detecting' status = "Tracking" # update the tracker and grab the updated position tracker.update(rgb) pos = tracker.get_position() # unpack the position object startX = int(pos.left()) startY = int(pos.top()) endX = int(pos.right()) endY = int(pos.bottom()) # add the bounding box coordinates to the rectangles list rects.append((startX, startY, endX, endY))

Most of the time, we aren’t landing on a skip-frame multiple. During this time, we’ll utilize our trackers to track our object rather than applying detection.

We begin looping over the available trackers on Line 160.

We proceed to update the status to “Tracking” (Line 163) and grab the object position (Lines 166 and 167).

From there we extract the position coordinates (Lines 170-173) followed by populating the information in our rects list.

Now let’s draw a horizontal visualization line (that people must cross in order to be tracked) and use the centroid tracker to update our object centroids:

# draw a horizontal line in the center of the frame -- once an # object crosses this line we will determine whether they were # moving 'up' or 'down' cv2.line(frame, (0, H // 2), (W, H // 2), (0, 255, 255), 2) # use the centroid tracker to associate the (1) old object # centroids with (2) the newly computed object centroids objects = ct.update(rects)

On Line 181 we draw the horizontal line which we’ll be using to visualize people “crossing” — once people cross this line we’ll increment our respective counters

Then on Line 185, we utilize our CentroidTracker instantiation to accept the list of rects , regardless of whether they were generated via object detection or object tracking. Our centroid tracker will associate object IDs with object locations.

In this next block, we’ll review the logic which counts if a person has moved up or down through the frame:

# loop over the tracked objects for (objectID, centroid) in objects.items(): # check to see if a trackable object exists for the current # object ID to = trackableObjects.get(objectID, None) # if there is no existing trackable object, create one if to is None: to = TrackableObject(objectID, centroid) # otherwise, there is a trackable object so we can utilize it # to determine direction else: # the difference between the y-coordinate of the *current* # centroid and the mean of *previous* centroids will tell # us in which direction the object is moving (negative for # 'up' and positive for 'down') y = [c[1] for c in to.centroids] direction = centroid[1] - np.mean(y) to.centroids.append(centroid) # check to see if the object has been counted or not if not to.counted: # if the direction is negative (indicating the object # is moving up) AND the centroid is above the center # line, count the object if direction < 0 and centroid[1] < H // 2: totalUp += 1 to.counted = True # if the direction is positive (indicating the object # is moving down) AND the centroid is below the # center line, count the object elif direction > 0 and centroid[1] > H // 2: totalDown += 1 to.counted = True # store the trackable object in our dictionary trackableObjects[objectID] = to

We begin by looping over the updated bounding box coordinates of the object IDs (Line 188).

On Line 191 we attempt to fetch a TrackableObject for the current objectID .

If the TrackableObject doesn’t exist for the objectID , we create one (Lines 194 and 195).

Otherwise, there is already an existing TrackableObject , so we need to figure out if the object (person) is moving up or down.

To do so, we grab the y-coordinate value for all previous centroid locations for the given object (Line 204). Then we compute the direction by taking the difference between the current centroid location and the mean of all previous centroid locations (Line 205).

The reason we take the mean is to ensure our direction tracking is more stable. If we stored just the previous centroid location for the person we leave ourselves open to the possibility of false direction counting. Keep in mind that object detection and object tracking algorithms are not “magic” — sometimes they will predict bounding boxes that may be slightly off what you may expect; therefore, by taking the mean, we can make our people counter more accurate.

If the TrackableObject has not been counted (Line 209), we need to determine if it’s ready to be counted yet (Lines 213-222), by:

- Checking if the

directionis negative (indicating the object is moving Up) AND the centroid is Above the centerline. In this case we incrementtotalUp. - Or checking if the

directionis positive (indicating the object is moving Down) AND the centroid is Below the centerline. If this is true, we incrementtotalDown.

Finally, we store the TrackableObject in our trackableObjects dictionary (Line 225) so we can grab and update it when the next frame is captured.

We’re on the home-stretch!

The next three code blocks handle:

- Display (drawing and writing text to the frame)

- Writing frames to a video file on disk (if the

--outputcommand line argument is present) - Capturing keypresses

- Cleanup

First we’ll draw some information on the frame for visualization:

# draw both the ID of the object and the centroid of the

# object on the output frame

text = "ID {}".format(objectID)

cv2.putText(frame, text, (centroid[0] - 10, centroid[1] - 10),

cv2.FONT_HERSHEY_SIMPLEX, 0.5, (0, 255, 0), 2)

cv2.circle(frame, (centroid[0], centroid[1]), 4, (0, 255, 0), -1)

# construct a tuple of information we will be displaying on the

# frame

info = [

("Up", totalUp),

("Down", totalDown),

("Status", status),

]

# loop over the info tuples and draw them on our frame

for (i, (k, v)) in enumerate(info):

text = "{}: {}".format(k, v)

cv2.putText(frame, text, (10, H - ((i * 20) + 20)),

cv2.FONT_HERSHEY_SIMPLEX, 0.6, (0, 0, 255), 2)

Here we overlay the following data on the frame:

ObjectID: Each object’s numerical identifier.centroid: The center of the object will be represented by a “dot” which is created by filling in a circle.info: IncludestotalUp,totalDown, andstatus

For a review of drawing operations, be sure to refer to this blog post.

Then we’ll write the frame to a video file (if necessary) and handle keypresses:

# check to see if we should write the frame to disk

if writer is not None:

writer.write(frame)

# show the output frame

cv2.imshow("Frame", frame)

key = cv2.waitKey(1) & 0xFF

# if the `q` key was pressed, break from the loop

if key == ord("q"):

break

# increment the total number of frames processed thus far and

# then update the FPS counter

totalFrames += 1

fps.update()

In this block we:

- Write the

frame, if necessary, to the output video file (Lines 249 and 250) - Display the

frameand handle keypresses (Lines 253-258). If “q” is pressed, webreakout of the frame processing loop. - Update our

fpscounter (Line 263)

We didn’t make too much of a mess, but now it’s time to clean up:

# stop the timer and display FPS information

fps.stop()

print("[INFO] elapsed time: {:.2f}".format(fps.elapsed()))

print("[INFO] approx. FPS: {:.2f}".format(fps.fps()))

# check to see if we need to release the video writer pointer

if writer is not None:

writer.release()

# if we are not using a video file, stop the camera video stream

if not args.get("input", False):

vs.stop()

# otherwise, release the video file pointer

else:

vs.release()

# close any open windows

cv2.destroyAllWindows()

To finish out the script, we display the FPS info to the terminal, release all pointers, and close any open windows.

Just 283 lines of code later, we are now done ?.

People counting results

To see our OpenCV people counter in action, make sure you use the “Downloads” section of this blog post to download the source code and example videos.

From there, open up a terminal and execute the following command:

$ python people_counter.py --prototxt mobilenet_ssd/MobileNetSSD_deploy.prototxt \ --model mobilenet_ssd/MobileNetSSD_deploy.caffemodel \ --input videos/example_01.mp4 --output output/output_01.avi [INFO] loading model... [INFO] opening video file... [INFO] elapsed time: 37.27 [INFO] approx. FPS: 34.42

Here you can see that our person counter is counting the number of people who:

- Are entering the department store (down)

- And the number of people who are leaving (up)

At the end of the first video you’ll see there have been 7 people who entered and 3 people who have left.

Furthermore, examining the terminal output you’ll see that our person counter is capable of running in real-time, obtaining 34 FPS throughout. This is despite the fact that we are using a deep learning object detector for more accurate person detections.

Our 34 FPS throughout rate is made possible through our two-phase process of:

- Detecting people once every 30 frames

- And then applying a faster, more efficient object tracking algorithm in all frames in between.

Another example of people counting with OpenCV can be seen below:

$ python people_counter.py --prototxt mobilenet_ssd/MobileNetSSD_deploy.prototxt \ --model mobilenet_ssd/MobileNetSSD_deploy.caffemodel \ --input videos/example_01.mp4 --output output/output_02.avi [INFO] loading model... [INFO] opening video file... [INFO] elapsed time: 36.88 [INFO] approx. FPS: 34.79

Here is a full video of the demo:

This time there have been 2 people who have entered the department store and 14 people who have left.

You can see how useful this system would be to a store owner interested in foot traffic analytics.

The same type of system for counting foot traffic with OpenCV can be used to count automobile traffic with OpenCV and I hope to cover that topic in a future blog post.

Additionally, a big thank you to David McDuffee for recording the example videos used here today! David works here with me at PyImageSearch and if you’ve ever emailed PyImageSearch before, you have very likely interacted with him. Thank you for making this post possible, David! Also a thank you to BenSound for providing the music for the video demos included in this post.

Improving our people counter application

In order to build our OpenCV people counter we utilized dlib’s correlation tracker. This method is easy to use and requires very little code.

However, our implementation is a bit inefficient — in order to track multiple objects we need to create multiple instances of the correlation tracker object. And then when we need to compute the location of the object in subsequent frames, we need to loop over all N object trackers and grab the updated position.

All of this computation would take place in the main execution thread of our script which thereby slows down our FPS rate.

An easy way to improve performance would therefore be to use multi-object tracking with dlib. That tutorial covers how to use multiprocessing and queues such that our FPS rate improves by 45%!

Note: OpenCV also implements multi-object tracking, but not with multiple processes (at least at the time of this writing). OpenCV’s multi-object method is certainly far easier to use, but without the multiprocessing capability, it doesn’t help much in this instance.

Finally, for even higher tracking accuracy (but at the expense of speed without a fast GPU), you can look into deep learning-based object trackers, such as Deep SORT, introduced by Wojke et al. in their paper, Simple Online and Realtime Tracking with a Deep Association Metric.

This method is very popular for deep learning-based object tracking and has been implemented in multiple Python libraries. I would suggest starting with this implementation.

What's next? We recommend PyImageSearch University.

84 total classes • 114+ hours of on-demand code walkthrough videos • Last updated: February 2024

★★★★★ 4.84 (128 Ratings) • 16,000+ Students Enrolled

I strongly believe that if you had the right teacher you could master computer vision and deep learning.

Do you think learning computer vision and deep learning has to be time-consuming, overwhelming, and complicated? Or has to involve complex mathematics and equations? Or requires a degree in computer science?

That’s not the case.

All you need to master computer vision and deep learning is for someone to explain things to you in simple, intuitive terms. And that’s exactly what I do. My mission is to change education and how complex Artificial Intelligence topics are taught.

If you're serious about learning computer vision, your next stop should be PyImageSearch University, the most comprehensive computer vision, deep learning, and OpenCV course online today. Here you’ll learn how to successfully and confidently apply computer vision to your work, research, and projects. Join me in computer vision mastery.

Inside PyImageSearch University you'll find:

- ✓ 84 courses on essential computer vision, deep learning, and OpenCV topics

- ✓ 84 Certificates of Completion

- ✓ 114+ hours of on-demand video

- ✓ Brand new courses released regularly, ensuring you can keep up with state-of-the-art techniques

- ✓ Pre-configured Jupyter Notebooks in Google Colab

- ✓ Run all code examples in your web browser — works on Windows, macOS, and Linux (no dev environment configuration required!)

- ✓ Access to centralized code repos for all 536+ tutorials on PyImageSearch

- ✓ Easy one-click downloads for code, datasets, pre-trained models, etc.

- ✓ Access on mobile, laptop, desktop, etc.

Summary

In today’s blog post we learned how to build a people counter using OpenCV and Python.

Our implementation is:

- Capable of running in real-time on a standard CPU

- Utilizes deep learning object detectors for improved person detection accuracy

- Leverages two separate object tracking algorithms, including both centroid tracking and correlation filters for improved tracking accuracy

- Applies both a “detection” and “tracking” phase, making it capable of (1) detecting new people and (2) picking up people that may have been “lost” during the tracking phase

I hope you enjoyed today’s post on people counting with OpenCV!

To download the code to this blog post (and apply people counting to your own projects), just enter your email address in the form below!

Download the Source Code and FREE 17-page Resource Guide

Enter your email address below to get a .zip of the code and a FREE 17-page Resource Guide on Computer Vision, OpenCV, and Deep Learning. Inside you'll find my hand-picked tutorials, books, courses, and libraries to help you master CV and DL!