In this tutorial, you will learn how to build a Raspberry Pi security camera using OpenCV and computer vision. The Pi security camera will be IoT capable, making it possible for our Raspberry Pi to to send TXT/MMS message notifications, images, and video clips when the security camera is triggered.

Back in my undergrad years, I had an obsession with hummus. Hummus and pita/vegetables were my lunch of choice.

I loved it.

I lived on it.

And I was very protective of my hummus — college kids are notorious for raiding each other’s fridges and stealing each other’s food. No one was to touch my hummus.

But — I was a victim of such hummus theft on more than one occasion…and I never forgot it!

I never figured out who stole my hummus, and even though my wife and I are the only ones who live in our house, I often hide the hummus in the back of the fridge (where no one will look) or under fruits and vegetables (which most people wouldn’t want to eat).

Of course, back then I wasn’t as familiar with computer vision and OpenCV as I do now. Had I known what I do at present, I would have built a Raspberry Pi security camera to capture the hummus heist in action!

Today I’m channeling my inner undergrad-self and laying rest to the chickpea bandit. And if he ever returns again, beware, my fridge is monitored!

To learn how to build a security camera with a Raspberry Pi and OpenCV, just keep reading!

Building a Raspberry Pi security camera with OpenCV

In the first part of this tutorial, we’ll briefly review how we are going to build an IoT-capable security camera with the Raspberry Pi.

Next, we’ll review our project/directory structure and install the libraries/packages to successfully build the project.

We’ll also briefly review both Amazon AWS/S3 and Twilio, two services that when used together will enable us to:

- Upload an image/video clip when the security camera is triggered.

- Send the image/video clip directly to our smartphone via text message.

From there we’ll implement the source code for the project.

And finally, we’ll put all the pieces together and put our Raspberry Pi security camera into action!

An IoT security camera with the Raspberry Pi

We’ll be building a very simple IoT security camera with the Raspberry Pi and OpenCV.

The security camera will be capable of recording a video clip when the camera is triggered, uploading the video clip to the cloud, and then sending a TXT/MMS message which includes the video itself.

We’ll be building this project specifically with the goal of detecting when a refrigerator is opened and when the fridge is closed — everything in between will be captured and recorded.

Therefore, this security camera will work best in the same “open” and “closed” environment where there is a large difference in light. For example, you could also deploy this inside a mailbox that opens/closes.

You can easily extend this method to work with other forms of detection, including simple motion detection and home surveillance, object detection, and more. I’ll leave that as an exercise for you, the reader, to implement — in that case, you can use this project as a “template” for implementing any additional computer vision functionality.

Project structure

Go ahead and grab the “Downloads” for today’s blog post.

Once you’ve unzipped the files, you’ll be presented with the following directory structure:

$ tree --dirsfirst . ├── config │ └── config.json ├── pyimagesearch │ ├── notifications │ │ ├── __init__.py │ │ └── twilionotifier.py │ ├── utils │ │ ├── __init__.py │ │ └── conf.py │ └── __init__.py └── detect.py 4 directories, 7 files

Today we’ll be reviewing four files:

config/config.json: This commented JSON file holds our configuration. I’m providing you with this file, but you’ll need to insert your API keys for both Twilio and S3.pyimagesearch/notifications/twilionotifier.py: Contains theTwilioNotifierclass for sending SMS/MMS messages. This is the same exact class I use for sending text, picture, and video messages with Python inside my upcoming Raspberry Pi book.pyimagesearch/utils/conf.py: TheConfclass is responsible for loading the commented JSON configuration.detect.py: The heart of today’s project is contained in this driver script. It watches for significant light change, starts recording video, and alerts me when someone steals my hummus or anything else I’m hiding in the fridge.

Now that we understand the directory structure and files therein, let’s move on to configuring our machine and learning about S3 + Twilio. From there, we’ll begin reviewing the four key files in today’s project.

Installing package/library prerequisites

Today’s project requires that you install a handful of Python libraries on your Raspberry Pi.

In my upcoming book, all of these packages will be preinstalled in a custom Raspbian image. All you’ll have to do is download the Raspbian .img file, flash it to your micro-SD card, and boot! From there you’ll have a pre-configured dev environment with all the computer vision + deep learning libraries you need!

Note: If you want my custom Raspbian images right now (with both OpenCV 3 and OpenCV 4), you should grab a copy of either the Quickstart Bundle or Hardcopy Bundle of Practical Python and OpenCV + Case Studies which includes the Raspbian .img file.

This introductory book will also teach you OpenCV fundamentals so that you can learn how to confidently build your own projects. These fundamentals and concepts will go a long way if you’re planning to grab my upcoming Raspberry Pi for Computer Vision book.

In the meantime, you can get by with this minimal installation of packages to replicate today’s project:

opencv-contrib-python: The OpenCV library.imutils: My package of convenience functions and classes.twilio: The Twilio package allows you to send text/picture/video messages.boto3: Theboto3package will communicate with the Amazon S3 files storage service. Our videos will be stored in S3.json-minify: Allows for commented JSON files (because we all love documentation!)

To install these packages, I recommend that you follow my pip install opencv guide to setup a Python virtual environment.

You can then pip install all required packages:

$ workon <env_name> # insert your environment name such as cv or py3cv4 $ pip install opencv-contrib-python $ pip install imutils $ pip install twilio $ pip install boto3 $ pip install json-minify

Now that our environment is configured, each time you want to activate it, simply use the workon command.

Let’s review S3, boto3, and Twilio!

What is Amazon AWS and S3?

boto3 Python package to work with S3.Amazon Web Services (AWS) has a service called Simple Storage Service, commonly known as S3.

The S3 services is a highly popular service used for storing files. I actually use it to host some larger files such as GIFs on this blog.

Today we’ll be using S3 to host our video files generated by the Raspberry Pi Security camera.

S3 is organized by “buckets”. A bucket contains files and folders. It also can be set up with custom permissions and security settings.

A package called boto3 will help us to transfer the files from our Internet of Things Raspberry Pi to AWS S3.

Before we dive into boto3 , we need to set up an S3 bucket.

Let’s go ahead and create a bucket, resource group, and user. We’ll give the resource group permissions to access the bucket and then we’ll add the user to the resource group.

Step #1: Create a bucket

Amazon has great documentation on how to create an S3 bucket here.

Step #2: Create a resource group + user. Add the user to the resource group.

After you create your bucket, you’ll need to create an IAM user + resource group and define permissions.

- Visit the resource groups page to create a group. I named my example “s3pi”.

- Visit the users page to create a user. I named my example “raspberrypisecurity”.

Step #3: Grab your access keys. You’ll need to paste them into today’s config file.

Watch these slides to walk you through Steps 1-3, but refer to the documentation as well because slides become out of date rapidly:

Obtaining your Twilio API keys

Twilio, a phone number service with an API, allows for voice, SMS, MMS, and more.

Twilio will serve as the bridge between our Raspberry Pi and our cell phone. I want to know exactly when the chickpea bandit is opening my fridge so that I can take countermeasures.

Let’s set up Twilio now.

Step #1: Create an account and get a free number.

Go ahead and sign up for Twilio and you’ll be assigned a temporary trial number. You can purchase a number + quota later if you choose to do so.

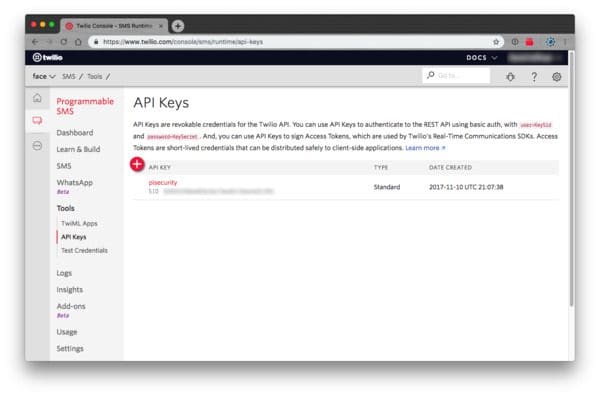

Step #2: Grab your API keys.

Now we need to obtain our API keys. Here’s a screenshot showing where to create one and copy it:

A final note about Twilio is that it does support the popular What’s App messaging platform. Support for What’s App is welcomed by the international community, however, it is currently in Beta. Today we’ll be demonstrating standard SMS/MMS only. I’ll leave it up to you to explore Twilio in conjunction with What’s App.

Our JSON configuration file

There are a number of variables that need to be specified for this project, and instead of hardcoding them, I decided to keep our code more modular and organized by putting them in a dedicated JSON configuration file.

Since JSON doesn’t natively support comments, our Conf class will take advantage of JSON-minify to parse out the comments. If JSON isn’t your config file of choice, you can try YAML or XML as well.

Let’s take a look at the commented JSON file now:

{

// two constants, first threshold for detecting if the

// refrigerator is open, and a second threshold for the number of

// seconds the refrigerator is open

"thresh": 50,

"open_threshold_seconds": 60,

Lines 5 and 6 contain two settings. The first is the light threshold for determining when the refrigerator is open. The second is a threshold for the number of seconds until it is determined that someone left the door open.

Now let’s handle AWS + S3 configs:

// variables to store your aws account credentials "aws_access_key_id": "YOUR_AWS_ACCESS_KEY_ID", "aws_secret_access_key": "YOUR_AWS_SECRET_ACCESS_KEY", "s3_bucket": "YOUR_AWS_S3_BUCKET",

Each of the values on Lines 9-11 are available in your AWS console (we just generated them in the “What is Amazon AWS and S3?” section above).

And finally our Twilio configs:

// variables to store your twilio account credentials "twilio_sid": "YOUR_TWILIO_SID", "twilio_auth": "YOUR_TWILIO_AUTH_ID", "twilio_to": "YOUR_PHONE_NUMBER", "twilio_from": "YOUR_TWILIO_PHONE_NUMBER" }

Twilio security settings are on Lines 14 and 15. The "twilio_from" value must match one of your Twilio phone numbers. If you’re using the trial, you only have one number. If you use the wrong number, are out of quota, etc., Twilio will likely send an error message to your email address.

Phone numbers can be formatted like this in the U.S.: "+1-555-555-5555" .

Loading the JSON configuration file

Our configuration file includes comments (for documentation purposes) which unfortunately means we cannot use Python’s built-in json package which cannot load files with comments.

Instead, we’ll use a combination of JSON-minify and a custom Conf class to load our JSON file as a Python dictionary.

Let’s take a look at how to implement the Conf class now:

# import the necessary packages from json_minify import json_minify import json class Conf: def __init__(self, confPath): # load and store the configuration and update the object's # dictionary conf = json.loads(json_minify(open(confPath).read())) self.__dict__.update(conf) def __getitem__(self, k): # return the value associated with the supplied key return self.__dict__.get(k, None)

This class is relatively straightforward. Notice that in the constructor, we use json_minify (Line 9) to parse out the comments prior to passing the file contents to json.loads .

The __getitem__ method will grab any value from the configuration with dictionary syntax. In other words, we won’t call this method directly — rather, we’ll simply use dictionary syntax in Python to grab a value associated with a given key.

Uploading key video clips and sending them via text message

Once our security camera is triggered we’ll need methods to:

- Upload the images/video to the cloud (since the Twilio API cannot directly serve “attachments”).

- Utilize the Twilio API to actually send the text message.

To keep our code neat and organized we’ll be encapsulating this functionality inside a class named TwilioNotifier — let’s review this class now:

# import the necessary packages from twilio.rest import Client import boto3 from threading import Thread class TwilioNotifier: def __init__(self, conf): # store the configuration object self.conf = conf def send(self, msg, tempVideo): # start a thread to upload the file and send it t = Thread(target=self._send, args=(msg, tempVideo,)) t.start()

On Lines 2-4, we import the Twilio Client , Amazon’s boto3 , and Python’s built-in Thread .

From there, our TwilioNotifier class and constructor are defined on Lines 6-9. Our constructor accepts a single parameter, the configuration, which we presume has been loaded from disk via the Conf class.

This project only demonstrates sending messages. We’ll be demonstrating receiving messages with Twilio in an upcoming blog post as well as in the Raspberry Pi Computer Vision book.

The send method is defined on Lines 11-14. This method accepts two key parameters:

- The string text

msg - The video file,

tempVideo. Once the video is successfully stored in S3, it will be removed from the Pi to save space. Hence it is a temporary video.

The send method kicks off a Thread to actually send the message, ensuring the main thread of execution is not blocked.

Thus, the core text message sending logic is in the next method, _send :

def _send(self, msg, tempVideo):

# create a s3 client object

s3 = boto3.client("s3",

aws_access_key_id=self.conf["aws_access_key_id"],

aws_secret_access_key=self.conf["aws_secret_access_key"],

)

# get the filename and upload the video in public read mode

filename = tempVideo.path[tempVideo.path.rfind("/") + 1:]

s3.upload_file(tempVideo.path, self.conf["s3_bucket"],

filename, ExtraArgs={"ACL": "public-read",

"ContentType": "video/mp4"})

The _send method is defined on Line 16. It operates as an independent thread so as not to impact the driver script flow.

Parameters (msg and tempVideo ) are passed in when the thread is launched.

The _send method first will upload the video to AWS S3 via:

- Initializing the

s3client with the access key and secret access key (Lines 18-21). - Uploading the file (Lines 25-27).

Line 24 simply extracts the filename from the video path since we’ll need it later.

Let’s go ahead and send the message:

# get the bucket location and build the url

location = s3.get_bucket_location(

Bucket=self.conf["s3_bucket"])["LocationConstraint"]

url = "https://s3-{}.amazonaws.com/{}/{}".format(location,

self.conf["s3_bucket"], filename)

# initialize the twilio client and send the message

client = Client(self.conf["twilio_sid"],

self.conf["twilio_auth"])

client.messages.create(to=self.conf["twilio_to"],

from_=self.conf["twilio_from"], body=msg, media_url=url)

# delete the temporary file

tempVideo.cleanup()

To send the message and have the video show up in a cell phone messaging app, we need to send the actual text string along with a URL to the video file in S3.

Note: This must be a publicly accessible URL, so ensure that your S3 settings are correct.

The URL is generated on Lines 30-33.

From there, we’ll create a Twilio client (not to be confused with our boto3 s3 client) on Lines 36 and 37.

Lines 38 and 39 actually send the message. Notice the to , from_ , body , and media_url parameters.

Finally, we’ll remove the temporary video file to save some precious space (Line 42). If we don’t do this it’s possible that your Pi may run out of space if your disk space is already low.

The Raspberry Pi security camera driver script

Now that we have (1) our configuration file, (2) a method to load the config, and (3) a class to interact with the S3 and Twilio APIs, let’s create the main driver script for the Raspberry Pi security camera.

The way this script works is relatively simple:

- It monitors the average amount of light seen by the camera.

- When the refrigerator door opens, the light comes on, the Pi detects the light, and the Pi starts recording.

- When the refrigerator door is closed, the light turns off, the Pi detects the absence of light, and the Pi stops recording + sends me or you a video message.

- If someone leaves the refrigerator open for longer than the specified seconds in the config file, I’ll receive a separate text message indicating that the door was left open.

Let’s go ahead and implement these features.

Open up the detect.py file and insert the following code:

# import the necessary packages from __future__ import print_function from pyimagesearch.notifications import TwilioNotifier from pyimagesearch.utils import Conf from imutils.video import VideoStream from imutils.io import TempFile from datetime import datetime from datetime import date import numpy as np import argparse import imutils import signal import time import cv2 import sys

Lines 2-15 import our necessary packages. Notably, we’ll be using our TwilioNotifier , Conf class, VideoStream , imutils , and OpenCV.

Let’s define an interrupt signal handler and parse for our config file path argument:

# function to handle keyboard interrupt

def signal_handler(sig, frame):

print("[INFO] You pressed `ctrl + c`! Closing refrigerator monitor" \

" application...")

sys.exit(0)

# construct the argument parser and parse the arguments

ap = argparse.ArgumentParser()

ap.add_argument("-c", "--conf", required=True,

help="Path to the input configuration file")

args = vars(ap.parse_args())

Our script will run headless because we don’t need an HDMI screen inside the fridge.

On Lines 18-21, we define a signal_handler class to capture “ctrl + c” events from the keyboard gracefully. It isn’t always necessary to do this, but if you need anything to execute before the script exits (such as someone disabling your security camera!), you can put it in this function.

We have a single command line argument to parse. The --conf flag (the path to config file) can be provided directly in the terminal or launch on reboot script. You may learn more about command line arguments here.

Let’s perform our initializations:

# load the configuration file and initialize the Twilio notifier

conf = Conf(args["conf"])

tn = TwilioNotifier(conf)

# initialize the flags for fridge open and notification sent

fridgeOpen = False

notifSent = False

# initialize the video stream and allow the camera sensor to warmup

print("[INFO] warming up camera...")

# vs = VideoStream(src=0).start()

vs = VideoStream(usePiCamera=True).start()

time.sleep(2.0)

# signal trap to handle keyboard interrupt

signal.signal(signal.SIGINT, signal_handler)

print("[INFO] Press `ctrl + c` to exit, or 'q' to quit if you have" \

" the display option on...")

# initialize the video writer and the frame dimensions (we'll set

# them as soon as we read the first frame from the video)

writer = None

W = None

H = None

Our initializations take place on Lines 30-52. Let’s review them:

- Lines 30 and 31 instantiate our

ConfandTwilioNotifierobjects. - Two status variables are initialized to determine when the fridge is open and when a notification has been sent (Lines 34 and 35).

- We’ll start our

VideoStreamon Lines 39-41. I’ve elected to use a PiCamera, so Line 39 (USB webcam) is commented out. You can easily swap these if you are using a USB webcam. - Line 44 starts our

signal_handlerthread to run in the background. - Our video

writerand frame dimensions are initialized on Lines 50-52.

It’s time to begin looping over frames:

# loop over the frames of the stream while True: # grab both the next frame from the stream and the previous # refrigerator status frame = vs.read() fridgePrevOpen = fridgeOpen # quit if there was a problem grabbing a frame if frame is None: break # resize the frame and convert the frame to grayscale frame = imutils.resize(frame, width=200) gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY) # if the frame dimensions are empty, set them if W is None or H is None: (H, W) = frame.shape[:2]

Our while loop begins on Line 55. We proceed to read a frame from our video stream (Line 58). The frame undergoes a sanity check on Lines 62 and 63 to determine if we have a legitimate image from our camera.

Line 59 sets our fridgePrevOpen flag. The previous value must always be set at the beginning of the loop and it is based on the current value which will be determined later.

Our frame is resized to a dimension that will look reasonable on a smartphone and also make for a smaller filesize for our MMS video (Line 66).

On Line 67, we create a grayscale image from frame — we’ll need this soon to determine the average amount of light in the frame.

Our dimensions are set via Lines 70 and 71 during the first iteration of the loop.

Now let’s determine if the refrigerator is open:

# calculate the average of all pixels where a higher mean # indicates that there is more light coming into the refrigerator mean = np.mean(gray) # determine if the refrigerator is currently open fridgeOpen = mean > conf["thresh"]

Determining if the refrigerator is open is a dead-simple, two-step process:

- Average all pixel intensities of our grayscale image (Line 75).

- Compare the average to the threshold value in our configuration (Line 78). I’m confident that a value of

50(in theconfig.jsonfile) will be an appropriate threshold for most refrigerators with a light that turns on and off as the door is opened and closed. That said, you may want to experiment with tweaking that value yourself.

The fridgeOpen variable is simply a boolean indicating if the refrigerator is open or not.

Let’s now determine if we need to start capturing a video:

# if the fridge is open and previously it was closed, it means # the fridge has been just opened if fridgeOpen and not fridgePrevOpen: # record the start time startTime = datetime.now() # create a temporary video file and initialize the video # writer object tempVideo = TempFile(ext=".mp4") writer = cv2.VideoWriter(tempVideo.path, 0x21, 30, (W, H), True)

As shown by the conditional on Line 82, so long as the refrigerator was just opened (i.e. it was not previously opened), we will initialize our video writer .

We’ll go ahead and grab the startTime , create a tempVideo , and initialize our video writer with the temporary file path (Lines 84-90). The constant 0x21 is for H264 video encoding.

Now we’ll handle the case where the refrigerator was previously open:

# if the fridge is open then there are 2 possibilities, # 1) it's left open for more than the *threshold* seconds. # 2) it's closed in less than or equal to the *threshold* seconds. elif fridgePrevOpen: # calculate the time different between the current time and # start time timeDiff = (datetime.now() - startTime).seconds # if the fridge is open and the time difference is greater # than threshold, then send a notification if fridgeOpen and timeDiff > conf["open_threshold_seconds"]: # if a notification has not been sent yet, then send a # notification if not notifSent: # build the message and send a notification msg = "Intruder has left your fridge open!!!" # release the video writer pointer and reset the # writer object writer.release() writer = None # send the message and the video to the owner and # set the notification sent flag tn.send(msg, tempVideo) notifSent = True

If the refrigerator was previously open, let’s check to ensure it wasn’t left open long enough to trigger an “Intruder has left your fridge open!” alert.

Kids can leave the refrigerator open by accident, or maybe after a holiday, you have a lot of food preventing the refrigerator door from closing all the way. You don’t want your food to spoil, so you may want these alerts!

For this message to be sent, the timeDiff must be greater than the threshold set in the config (Lines 98-102).

This message will include a msg and video to you, as shown on Lines 107-117. The msg is defined, the writer is released, and the notification is set.

Let’s now take care of the most common scenario where the refrigerator was previously open, but now it is closed (i.e. some thief stole your food, or maybe it was you when you became hungry):

# check to see if the fridge is closed

elif not fridgeOpen:

# if a notification has already been sent, then just set

# the notifSent to false for the next iteration

if notifSent:

notifSent = False

# if a notification has not been sent, then send a

# notification

else:

# record the end time and calculate the total time in

# seconds

endTime = datetime.now()

totalSeconds = (endTime - startTime).seconds

dateOpened = date.today().strftime("%A, %B %d %Y")

# build the message and send a notification

msg = "Your fridge was opened on {} at {} " \

"for {} seconds.".format(dateOpened

startTime.strftime("%I:%M%p"), totalSeconds)

# release the video writer pointer and reset the

# writer object

writer.release()

writer = None

# send the message and the video to the owner

tn.send(msg, tempVideo)

The case beginning on Line 120 will send a video message indicating, “Your fridge was opened on {{ day }} at {{ time }} for {{ seconds }}.”

On Lines 123 and 124, our notifSent flag is reset if needed. If the notification was already sent, we set this value to False , effectively resetting it for the next iteration of the loop.

Otherwise, if the notification has not been sent, we’ll calculate the totalSeconds the refrigerator was open (Lines 131 and 132). We’ll also record the date the door was opened (Line 133).

Our msg string is populated with these values (Lines 136-138).

Then the video writer is released and the message and video are sent (Line 142-147).

Our final block finishes out the loop and performs cleanup:

# check to see if we should write the frame to disk if writer is not None: writer.write(frame) # check to see if we need to release the video writer pointer if writer is not None: writer.release() # cleanup the camera and close any open windows cv2.destroyAllWindows() vs.stop()

To finish the loop, we’ll write the frame to the video writer object and then go back to the top to grab the next frame.

When the loop exits, the writer is released, and the video stream is stopped.

Great job! You made it through a simple IoT project using a Raspberry Pi and camera.

It’s now time to place the bait. I know my thief likes hummus as much as I do, so I ran to the store and came back to put it in the fridge.

RPi security camera results

When deploying the Raspberry Pi security camera in your refrigerator to catch the hummus bandit, you’ll need to ensure that it will continue to run without a wireless connection to your laptop.

There are two great options for deployment:

- Run the computer vision Python script on reboot.

- Leave a

screensession running with the Python computer vision script executing within.

Be sure to visit the first link if you just want your Pi to run the script when you plug in power.

While this blog post isn’t the right place for a full screen demo, here are the basics:

- Install screen via:

sudo apt-get install screen - Open an SSH connection to your Pi and run it:

screen - If the connection from your laptop to your Pi ever dies or is closed, don’t panic! The screen session is still running. You can reconnect by SSH’ing into the Pi again and then running

screen -r. You’ll be back in your virtual window. - Keyboard shortcuts for screen:

- “ctrl + a, c”: Creates a new “window”.

- “ctrl + a, p” and “ctrl + a, n”: Cycles through “previous” and “next” windows, respectively.

- For a more in-depth review of

screen, see the documentation. Here’s a screen keyboard shortcut cheat sheet.

Once you’re comfortable with starting a script on reboot or working with screen , grab a USB battery pack that can source enough current. Shown in Figure 4, we’re using a RavPower 2200mAh battery pack connected to the Pi power input. The product specs claim to charge an iPhone 6+ times, and it seems to run a Raspberry Pi for about +/-10 hours (depending on the algorithm) as well.

Go ahead and plug in the battery pack, connect, and deploy the script (if you didn’t set it up to start on boot).

The commands are:

$ screen # wait for screen to start $ source ~/.profile $ workon <env_name> # insert the name of your virtual environment $ python detect.py --conf config/config.json

If you aren’t familiar with command line arguments, please read this tutorial. The command line argument is also required if you are deploying the script upon reboot.

Let’s see it in action!

I’ve included a full dem0 of the Raspberry Pi security camera below:

What's next? We recommend PyImageSearch University.

84 total classes • 114+ hours of on-demand code walkthrough videos • Last updated: February 2024

★★★★★ 4.84 (128 Ratings) • 16,000+ Students Enrolled

I strongly believe that if you had the right teacher you could master computer vision and deep learning.

Do you think learning computer vision and deep learning has to be time-consuming, overwhelming, and complicated? Or has to involve complex mathematics and equations? Or requires a degree in computer science?

That’s not the case.

All you need to master computer vision and deep learning is for someone to explain things to you in simple, intuitive terms. And that’s exactly what I do. My mission is to change education and how complex Artificial Intelligence topics are taught.

If you're serious about learning computer vision, your next stop should be PyImageSearch University, the most comprehensive computer vision, deep learning, and OpenCV course online today. Here you’ll learn how to successfully and confidently apply computer vision to your work, research, and projects. Join me in computer vision mastery.

Inside PyImageSearch University you'll find:

- ✓ 84 courses on essential computer vision, deep learning, and OpenCV topics

- ✓ 84 Certificates of Completion

- ✓ 114+ hours of on-demand video

- ✓ Brand new courses released regularly, ensuring you can keep up with state-of-the-art techniques

- ✓ Pre-configured Jupyter Notebooks in Google Colab

- ✓ Run all code examples in your web browser — works on Windows, macOS, and Linux (no dev environment configuration required!)

- ✓ Access to centralized code repos for all 536+ tutorials on PyImageSearch

- ✓ Easy one-click downloads for code, datasets, pre-trained models, etc.

- ✓ Access on mobile, laptop, desktop, etc.

Summary

In this tutorial, you learned how to build a Raspberry Pi security camera from scratch using OpenCV and computer vision.

Specifically, you learned how to:

- Access the Raspberry Pi camera module or USB webcam.

- Setup your Amazon AWS/S3 account so you can upload images/video when your security camera is triggered (other services such as Dropbox, Box, Google Drive, etc. will work as well, provided you can obtain a public-facing URL of the media).

- Obtain Twilio API keys used to send text messages with the uploaded images/video.

- Create a Raspberry Pi security camera using OpenCV and computer vision.

Finally, we put all the pieces together and deployed the security camera to monitor a refrigerator:

- Each time the door was opened we started recording

- After the door was closed the recording stopped

- The recording was then uploaded to the cloud

- And finally, a text message was sent to our phone showing the activity

You can extend the security camera to include other components as well. My first suggestion would be to take a look at how to build a home surveillance system using a Raspberry Pi where we use a more advanced motion detection technique. It would be fun to implement Twilio SMS/MMS notifications into the home surveillance project as well.

I hope you enjoyed this tutorial!

To download the source code to this post, and be notified when future tutorials are published here on PyImageSearch, just enter your email address in the form below!

Download the Source Code and FREE 17-page Resource Guide

Enter your email address below to get a .zip of the code and a FREE 17-page Resource Guide on Computer Vision, OpenCV, and Deep Learning. Inside you'll find my hand-picked tutorials, books, courses, and libraries to help you master CV and DL!