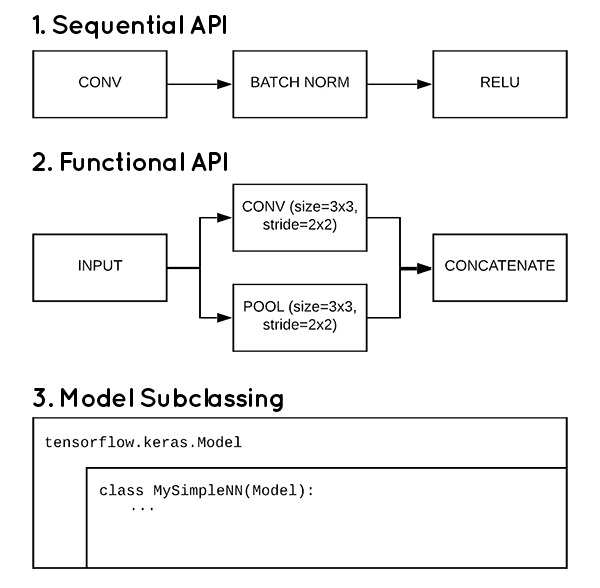

Keras and TensorFlow 2.0 provide you with three methods to implement your own neural network architectures:

- Sequential API

- Functional API

- Model subclassing

Inside of this tutorial you’ll learn how to utilize each of these methods, including how to choose the right API for the job.

A dataset is crucial for implementing and understanding the difference between Sequential, Functional, and Model Subclassing in TensorFlow 2.0. It allows us to observe how each method impacts the model’s performance.

Roboflow has free tools for each stage of the computer vision pipeline that will streamline your workflows and supercharge your productivity.

Sign up or Log in to your Roboflow account to access state of the art dataset libaries and revolutionize your computer vision pipeline.

You can start by choosing your own datasets or using our PyimageSearch’s assorted library of useful datasets.

Bring data in any of 40+ formats to Roboflow, train using any state-of-the-art model architectures, deploy across multiple platforms (API, NVIDIA, browser, iOS, etc), and connect to applications or 3rd party tools.

To learn more about Sequential, Functional, and Model subclassing with Keras and TensorFlow 2.0, just keep reading!

3 ways to create a Keras model with TensorFlow 2.0 (Sequential, Functional, and Model subclassing)

In the first half of this tutorial, you will learn how to implement sequential, functional, and model subclassing architectures using Keras and TensorFlow 2.0. I’ll then show you how to train each of these model architectures.

Once our training script is implemented we’ll then train each of the sequential, functional, and subclassing models, and review the results.

Furthermore, all code examples covered here will be compatible with Keras and TensorFlow 2.0.

Project structure

Go ahead and grab the source code to this post by using the “Downloads” section of this tutorial. Then extract the files and inspect the directory contents with the tree command:

$ tree --dirsfirst . ├── output │ ├── class.png │ ├── functional.png │ └── sequential.png ├── pyimagesearch │ ├── __init__.py │ └── models.py └── train.py 2 directories, 6 files

Our models.py contains three functions to build Keras/TensorFlow 2.0 models using the Sequential, Functional and Model subclassing APIs, respectively.

The training script, train.py , will load a model depending on the provided command line arguments. The model will be trained on the CIFAR-10 dataset. An accuracy/loss curve plot will be output to a .png file in the output directory.

Implementing a Sequential model with Keras and TensorFlow 2.0

A sequential model, as the name suggests, allows you to create models layer-by-layer in a step-by-step fashion.

Keras Sequential API is by far the easiest way to get up and running with Keras, but it’s also the most limited — you cannot create models that:

- Share layers

- Have branches (at least not easily)

- Have multiple inputs

- Have multiple outputs

Examples of seminal sequential architectures that you may have already used or implemented include:

- LeNet

- AlexNet

- VGGNet

Let’s go ahead and implement a basic Convolutional Neural Network using TensorFlow 2.0 and Keras’ Sequential API.

Open up the models.py file in your project structure and insert the following code:

# import the necessary packages from tensorflow.keras.models import Model from tensorflow.keras.models import Sequential from tensorflow.keras.layers import BatchNormalization from tensorflow.keras.layers import AveragePooling2D from tensorflow.keras.layers import MaxPooling2D from tensorflow.keras.layers import Conv2D from tensorflow.keras.layers import Activation from tensorflow.keras.layers import Dropout from tensorflow.keras.layers import Flatten from tensorflow.keras.layers import Input from tensorflow.keras.layers import Dense from tensorflow.keras.layers import concatenate

Notice how all of our Keras imports on Lines 2-13 come from tensorflow.keras (also known as tf.keras ).

To implement our sequential model, we need the Sequential import on Line 3. Let’s go ahead and create the sequential model now:

def shallownet_sequential(width, height, depth, classes):

# initialize the model along with the input shape to be

# "channels last" ordering

model = Sequential()

inputShape = (height, width, depth)

# define the first (and only) CONV => RELU layer

model.add(Conv2D(32, (3, 3), padding="same",

input_shape=inputShape))

model.add(Activation("relu"))

# softmax classifier

model.add(Flatten())

model.add(Dense(classes))

model.add(Activation("softmax"))

# return the constructed network architecture

return model

Line 15 defines the shallownet_sequential model builder method.

Notice how on Line 18, we initialize the model as an instance of the Sequential class. We’ll then add each layer to to the Sequential class, one at a time.

ShallowNet contains one CONV => RELU layer followed by a softmax classifier (Lines 22-29). Notice on each of these lines of code that we call model.add to assemble our CNN with the appropriate building blocks. Order matters — you must call model.add in the order in which you want to insert layers, normalization methods, softmax classifiers, etc.

Once your model has all of the components you desire, you can return the object so that it can be be compiled later.

Line 32 returns our sequential model (we will use it in our training script).

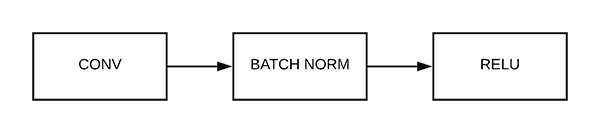

Creating a Functional model with Keras and TensorFlow 2.0

Once you’ve had some practice implementing a few basic neural network architectures using Keras’ Sequential API, you’ll then want to gain experience working with the Functional API.

Keras’ Functional API is easy to use and is typically favored by most deep learning practitioners who use the Keras deep learning library.

Using the Functional API you can:

- Create more complex models.

- Have multiple inputs and multiple outputs.

- Easily define branches in your architectures (ex., an Inception block, ResNet block, etc.).

- Design directed acyclic graphs (DAGs).

- Easily share layers inside the architecture.

Furthermore, any Sequential model can be implemented using Keras’ Functional API.

Examples of models that have Functional characteristics (such as layer branching) include:

- ResNet

- GoogLeNet/Inception

- Xception

- SqueezeNet

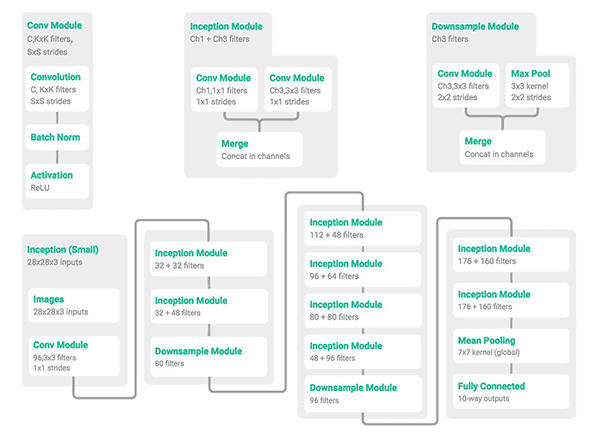

To gain experience using TensorFlow 2.0 and Keras’ Functional API, let’s implement a MiniGoogLeNet which includes a simplified version of the Inception module from Szegedy et al.’s seminal Going Deeper with Convolutions paper:

As you can see, there are three modules inside the MiniGoogLeNet architecture:

conv_module: Performs convolution on an input volume, utilizes batch normalization, and then applies a ReLU activation. We define this module out of simplicity and to make it reusable, ensuring we can easily apply a “convolutional block” inside our architecture using as few lines of code as possible, keeping our implementation tidy, organized, and easier to debug.inception_module: Instantiates twoconv_moduleobjects. The first CONV block applies 1×1 convolution while the second block performs 3×3 convolution with “same” padding, ensuring the output volume sizes for 1×1 and 3×3 convolutional are identical. The output volumes are then concatenated together along the channel dimension.downsample_module: This module is responsible for reducing the size of an input volume. Similar to theinception_moduletwo branches are utilized here. The first branch performs 3×3 convolution but with (1) 2×2 stride and (2) “valid” padding, thereby reducing the volume size. The second branch applies 3×3 max-pooling with a 2×2 stride. The output volume dimensions for both branches are identical so they can be concatenated together along the channel axis.

Think of each of these modules as Legos — we implement each type of Lego and then stack them in a particular manner to define our model architecture.

Legos can be organized and fit together in a near-infinite number of possibilities; however, since form defines function, we need to take care and consider how these Legos should fit together.

Note: If you would like a detailed review of each of the modules inside the MiniGoogLeNet architecture, be sure to refer to Deep Learning for Computer Vision with Python where I cover them in detail.

As an example of piecing our “Lego modules” together, let’s go ahead and implement MiniGoogLeNet now:

def minigooglenet_functional(width, height, depth, classes):

def conv_module(x, K, kX, kY, stride, chanDim, padding="same"):

# define a CONV => BN => RELU pattern

x = Conv2D(K, (kX, kY), strides=stride, padding=padding)(x)

x = BatchNormalization(axis=chanDim)(x)

x = Activation("relu")(x)

# return the block

return x

Line 34 defines the minigooglenet_functional model builder method.

We’re going to define three reusable modules which are part of the GoogLeNet architecture:

conv_moduleinception_moduledownsample_module

Be sure to refer to the detailed descriptions of each above.

Defining the modules as sub-functions like this allows us to reuse the structure and save on lines of code, not to mention making it easier to read and make modifications.

Line 35 defines the conv_module and its parameters. The most important parameter is x — the input to this module. The other parameters pass through to Conv2D and BatchNormalization .

Lines 37-39 build a set of CONV => BN => RELU layers.

Notice that the beginning of each line starts with x = and the end of the lines finish with (x) . This style is representative of the Keras Functional API. Layers are appended to one another where x acts as the input to subsequent layers. This functional style will be present throughout the minigooglenet_functional method.

Line 42 returns the built conv_module to the caller.

Let’s create our inception_module which consists of two convolution modules:

def inception_module(x, numK1x1, numK3x3, chanDim): # define two CONV modules, then concatenate across the # channel dimension conv_1x1 = conv_module(x, numK1x1, 1, 1, (1, 1), chanDim) conv_3x3 = conv_module(x, numK3x3, 3, 3, (1, 1), chanDim) x = concatenate([conv_1x1, conv_3x3], axis=chanDim) # return the block return x

Line 44 defines our inception_module and parameters.

The inception module contains two branches of the conv_module that are concatenated together:

- In the first branch, we perform 1×1 convolutions (Line 47).

- In the second branch we perform 3×3 convolutions (Line 48).

The call to concatenate on Line 49 brings the module branches together across the channel dimension. Since the padding is the “same” for both branches the output volume dimensions are equal. Thus, they can be concatenated along the channel dimension.

Line 51 returns the inception_module block to the caller.

Finally, we’ll implement our downsample_module :

def downsample_module(x, K, chanDim): # define the CONV module and POOL, then concatenate # across the channel dimensions conv_3x3 = conv_module(x, K, 3, 3, (2, 2), chanDim, padding="valid") pool = MaxPooling2D((3, 3), strides=(2, 2))(x) x = concatenate([conv_3x3, pool], axis=chanDim) # return the block return x

Line 54 defines our downsample_module and parameters. The downsample module is responsible for reducing the input volume size and it also utilizes two branches:

- The first branch performs 3×3 convolution with 2×2 stride (Lines 57 and 58).

- The second branch performs 3×3 max-pooling with 2×2 stride (Line 59).

The outputs of the branches are then stacked along the channel dimension via a call to concatenate (Line 60).

Line 63 returns the downsample block to the caller.

With each of our modules defined, we can now use them to build the entire MiniGoogLeNet architecture using the Functional API:

# initialize the input shape to be "channels last" and the

# channels dimension itself

inputShape = (height, width, depth)

chanDim = -1

# define the model input and first CONV module

inputs = Input(shape=inputShape)

x = conv_module(inputs, 96, 3, 3, (1, 1), chanDim)

# two Inception modules followed by a downsample module

x = inception_module(x, 32, 32, chanDim)

x = inception_module(x, 32, 48, chanDim)

x = downsample_module(x, 80, chanDim)

# four Inception modules followed by a downsample module

x = inception_module(x, 112, 48, chanDim)

x = inception_module(x, 96, 64, chanDim)

x = inception_module(x, 80, 80, chanDim)

x = inception_module(x, 48, 96, chanDim)

x = downsample_module(x, 96, chanDim)

# two Inception modules followed by global POOL and dropout

x = inception_module(x, 176, 160, chanDim)

x = inception_module(x, 176, 160, chanDim)

x = AveragePooling2D((7, 7))(x)

x = Dropout(0.5)(x)

# softmax classifier

x = Flatten()(x)

x = Dense(classes)(x)

x = Activation("softmax")(x)

# create the model

model = Model(inputs, x, name="minigooglenet")

# return the constructed network architecture

return model

Lines 67-71 set up our inputs to the CNN.

From there, we use the Functional API to assemble our model:

- First, we apply a single

conv_module(Line 72). - Two

inception_moduleblocks are then stacked on top of each other before using thedownsample_moduleto reduce volume size. (Lines 75-77). - We then deepen the module by applying four

inception_moduleblocks before reducing volume size via thedownsample_module(Lines 80-84). - We then stack two more

inception_moduleblocks before applying average pooling and constructing the fully-connected layer head (Lines 87-90). - A softmax classifier is then applied (Lines 93-95).

- Finally, the fully constructed

Modelis returned to the calling function (Lines 98-101).

Again, notice how we are using the Functional API in comparison to the Sequential API discussed in the previous section.

For a more detailed discussion of how to utilize Keras’ Functional API to implement your own custom model architectures, be sure to refer to my book, Deep Learning for Computer Vision with Python, where I discuss the Functional API in more detail.

Additionally, I want to give credit to Zhang et al. who originally proposed the MiniGoogLeNet architecture in a beautiful visualization in their paper, Understanding deep learning requires rethinking generalization.

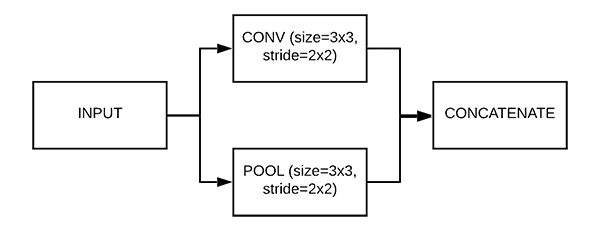

Model subclassing with Keras and TensorFlow 2.0

The third and final method to implement a model architecture using Keras and TensorFlow 2.0 is called model subclassing.

Inside of Keras the Model class is the root class used to define a model architecture. Since Keras utilizes object-oriented programming, we can actually subclass the Model class and then insert our architecture definition.

Model subclassing is fully-customizable and enables you to implement your own custom forward-pass of the model.

However, this flexibility and customization comes at a cost — model subclassing is way harder to utilize than the Sequential API or Functional API.

So, if the model subclassing method is so hard to use, why bother utilizing it all?

Exotic architectures or custom layer/model implementations, especially those utilized by researchers, can be extremely challenging, if not impossible, to implement using the standard Sequential or Functional APIs.

Instead, researchers wish to have control over every nuance of the network and training process — and that’s exactly what model subclassing provides them.

Let’s look at a simple example implementing MiniVGGNet, an otherwise sequential model, but converted to a model subclass:

class MiniVGGNetModel(Model):

def __init__(self, classes, chanDim=-1):

# call the parent constructor

super(MiniVGGNetModel, self).__init__()

# initialize the layers in the first (CONV => RELU) * 2 => POOL

# layer set

self.conv1A = Conv2D(32, (3, 3), padding="same")

self.act1A = Activation("relu")

self.bn1A = BatchNormalization(axis=chanDim)

self.conv1B = Conv2D(32, (3, 3), padding="same")

self.act1B = Activation("relu")

self.bn1B = BatchNormalization(axis=chanDim)

self.pool1 = MaxPooling2D(pool_size=(2, 2))

# initialize the layers in the second (CONV => RELU) * 2 => POOL

# layer set

self.conv2A = Conv2D(32, (3, 3), padding="same")

self.act2A = Activation("relu")

self.bn2A = BatchNormalization(axis=chanDim)

self.conv2B = Conv2D(32, (3, 3), padding="same")

self.act2B = Activation("relu")

self.bn2B = BatchNormalization(axis=chanDim)

self.pool2 = MaxPooling2D(pool_size=(2, 2))

# initialize the layers in our fully-connected layer set

self.flatten = Flatten()

self.dense3 = Dense(512)

self.act3 = Activation("relu")

self.bn3 = BatchNormalization()

self.do3 = Dropout(0.5)

# initialize the layers in the softmax classifier layer set

self.dense4 = Dense(classes)

self.softmax = Activation("softmax")

Line 103 defines our MiniVGGNetModel class followed by Line 104 which defines our constructor.

Line 106 calls our parent constructor using the super keyword.

From there, our layers are defined as instance attributes, each with its own name (Lines 110-137). Attributes in Python use the self keyword and are typically (but not always) defined in a constructor. Let’s review them now:

- The first

(CONV => RELU) * 2 => POOLlayer set (Lines 110-116). - The second

(CONV => RELU) * 2 => POOLlayer set (Lines 120-126). - Our fully-connected network head (

Dense) with"softmax"classifier (Line 129-138).

Notice how each layer is defined inside the constructor — this is on purpose!

Let’s say we had our own custom layer implementation that performed an exotic type of convolution or pooling. That layer could be defined elsewhere in the MiniVGGNetModel and then instantiated inside the constructor.

Once our Keras layers and custom implemented layers are defined, we can then define the network topology/graph inside the call function which is used to perform a forward-pass:

def call(self, inputs): # build the first (CONV => RELU) * 2 => POOL layer set x = self.conv1A(inputs) x = self.act1A(x) x = self.bn1A(x) x = self.conv1B(x) x = self.act1B(x) x = self.bn1B(x) x = self.pool1(x) # build the second (CONV => RELU) * 2 => POOL layer set x = self.conv2A(x) x = self.act2A(x) x = self.bn2A(x) x = self.conv2B(x) x = self.act2B(x) x = self.bn2B(x) x = self.pool2(x) # build our FC layer set x = self.flatten(x) x = self.dense3(x) x = self.act3(x) x = self.bn3(x) x = self.do3(x) # build the softmax classifier x = self.dense4(x) x = self.softmax(x) # return the constructed model return x

Notice how this model is essentially a Sequential model; however, we could just as easily define a model with multiple inputs/outputs, branches, etc.

The majority of deep learning practitioners won’t have to use the model subclassing method, but just know that it’s available to you if you need it!

Implementing the training script

Our three model architectures are implemented, but how are we going to train them?

The answer lies inside train.py — let’s take a look:

# set the matplotlib backend so figures can be saved in the background

import matplotlib

matplotlib.use("Agg")

# there seems to be an issue with TensorFlow 2.0 throwing non-critical

# warnings regarding gradients when using the model sub-classing

# feature -- I found that by setting the logging level I can suppress

# the warnings from showing up (likely won't be required in future

# releases of TensorFlow)

import logging

logging.getLogger("tensorflow").setLevel(logging.CRITICAL)

# import the necessary packages

from pyimagesearch.models import MiniVGGNetModel

from pyimagesearch.models import minigooglenet_functional

from pyimagesearch.models import shallownet_sequential

from sklearn.preprocessing import LabelBinarizer

from sklearn.metrics import classification_report

from tensorflow.keras.preprocessing.image import ImageDataGenerator

from tensorflow.keras.optimizers import SGD

from tensorflow.keras.datasets import cifar10

import matplotlib.pyplot as plt

import numpy as np

import argparse

Lines 2-24 import our packages:

- For

matplotlib, we set the backend to"Agg"so that we can export our plots to disk as.pngfiles (Lines 2 and 3). - We import

loggingand set the log level to ignore anything but critical errors (Lines 10 and 11). TensorFlow was reporting (irrelevant) warning messages when training a model using Keras’ model subclassing feature so I updated the logging to only report critical messages. I believe that the warnings themselves are a bug inside TensorFlow 2.0 that will likely be removed in the next release. - Our three CNN models are imported: (1)

MiniVGGNetModel, (2)minigooglenet_functional, and (3)shallownet_sequential(Lines 14-16). - We import our CIFAR-10 dataset (Line 21).

From here we’ll go ahead and parse command line arguments:

# construct the argument parser and parse the arguments

ap = argparse.ArgumentParser()

ap.add_argument("-m", "--model", type=str, default="sequential",

choices=["sequential", "functional", "class"],

help="type of model architecture")

ap.add_argument("-p", "--plot", type=str, required=True,

help="path to output plot file")

args = vars(ap.parse_args())

Our two command line arguments include:

--model: One of the followingchoices=["sequential", "functional", "class"]must be made to load our model using Keras’ APIs.--plot: The path to the output plot image file. You may store your plots in theoutput/directory as I have done.

From here we’ll (1) initialize a number of hyperparameters, (2) prepare our data, and (3) construct our data augmentation object:

# initialize the initial learning rate, batch size, and number of

# epochs to train for

INIT_LR = 1e-2

BATCH_SIZE = 128

NUM_EPOCHS = 60

# initialize the label names for the CIFAR-10 dataset

labelNames = ["airplane", "automobile", "bird", "cat", "deer", "dog",

"frog", "horse", "ship", "truck"]

# load the CIFAR-10 dataset

print("[INFO] loading CIFAR-10 dataset...")

((trainX, trainY), (testX, testY)) = cifar10.load_data()

# scale the data to the range [0, 1]

trainX = trainX.astype("float32") / 255.0

testX = testX.astype("float32") / 255.0

# convert the labels from integers to vectors

lb = LabelBinarizer()

trainY = lb.fit_transform(trainY)

testY = lb.transform(testY)

# construct the image generator for data augmentation

aug = ImageDataGenerator(rotation_range=18, zoom_range=0.15,

width_shift_range=0.2, height_shift_range=0.2, shear_range=0.15,

horizontal_flip=True, fill_mode="nearest")

In this code block, we:

- Initialize the (1) learning rate, (2) batch size, and (3) number of training epochs (Lines 37-39).

- Set the CIFAR-10 dataset

labelNames, load the dataset, and preprocess them (Lines 42-51). - Binarize our labels (Lines 54-56).

- Instantiate our data augmentation object with settings for random rotations, zooms, shifts, shears, and flips (Lines 59-61).

The heart of the script lies in this next code block where we instantiate our model:

# check to see if we are using a Keras Sequential model

if args["model"] == "sequential":

# instantiate a Keras Sequential model

print("[INFO] using sequential model...")

model = shallownet_sequential(32, 32, 3, len(labelNames))

# check to see if we are using a Keras Functional model

elif args["model"] == "functional":

# instantiate a Keras Functional model

print("[INFO] using functional model...")

model = minigooglenet_functional(32, 32, 3, len(labelNames))

# check to see if we are using a Keras Model class

elif args["model"] == "class":

# instantiate a Keras Model sub-class model

print("[INFO] using model sub-classing...")

model = MiniVGGNetModel(len(labelNames))

Here we check if either our Sequential, Functional, or Model Subclassing architecture should be instantiated. Following the if/elif statements based on the command line arguments we initialize the appropriate model .

From there, we are ready to compile the model and fit to our data:

# initialize the optimizer and compile the model

opt = SGD(lr=INIT_LR, momentum=0.9, decay=INIT_LR / NUM_EPOCHS)

print("[INFO] training network...")

model.compile(loss="categorical_crossentropy", optimizer=opt,

metrics=["accuracy"])

# train the network

H = model.fit_generator(

aug.flow(trainX, trainY, batch_size=BATCH_SIZE),

validation_data=(testX, testY),

steps_per_epoch=trainX.shape[0] // BATCH_SIZE,

epochs=NUM_EPOCHS,

verbose=1)

All of our models are compiled with Stochastic Gradient Descent (SGD ) and learning rate decay (Lines 82-85).

Lines 88-93 kick off training using Keras’ .fit_generator method to handle data augmentation. You may read about the .fit_generator method in depth in this article.

We’ll wrap up by evaluating our model and plotting the training history:

# evaluate the network

print("[INFO] evaluating network...")

predictions = model.predict(testX, batch_size=BATCH_SIZE)

print(classification_report(testY.argmax(axis=1),

predictions.argmax(axis=1), target_names=labelNames))

# determine the number of epochs and then construct the plot title

N = np.arange(0, NUM_EPOCHS)

title = "Training Loss and Accuracy on CIFAR-10 ({})".format(

args["model"])

# plot the training loss and accuracy

plt.style.use("ggplot")

plt.figure()

plt.plot(N, H.history["loss"], label="train_loss")

plt.plot(N, H.history["val_loss"], label="val_loss")

plt.plot(N, H.history["accuracy"], label="train_acc")

plt.plot(N, H.history["val_accuracy"], label="val_acc")

plt.title(title)

plt.xlabel("Epoch #")

plt.ylabel("Loss/Accuracy")

plt.legend()

plt.savefig(args["plot"])

Lines 97-99 make predictions on the test set and evaluate the network. A classification report is printed to the terminal.

Lines 102-117 plot the training accuracy/loss curves and output the plot to disk.

Keras Sequential model results

We are now ready to use Keras and TensorFlow 2.0 to train our Sequential model!

Take a second now to use the “Downloads” section of this tutorial to download the source code to this guide.

From there, open up a terminal and execute the following command to train and evaluate a Sequential model:

$ python train.py --model sequential --plot output/sequential.png

[INFO] loading CIFAR-10 dataset...

[INFO] using sequential model...

[INFO] training network...

Epoch 1/60

390/390 [==============================] - 25s 63ms/step - loss: 1.9162 - accuracy: 0.3165 - val_loss: 1.6599 - val_accuracy: 0.4163

Epoch 2/60

390/390 [==============================] - 24s 61ms/step - loss: 1.7170 - accuracy: 0.3849 - val_loss: 1.5639 - val_accuracy: 0.4471

Epoch 3/60

390/390 [==============================] - 23s 59ms/step - loss: 1.6499 - accuracy: 0.4093 - val_loss: 1.5228 - val_accuracy: 0.4668

...

Epoch 58/60

390/390 [==============================] - 24s 61ms/step - loss: 1.3343 - accuracy: 0.5299 - val_loss: 1.2767 - val_accuracy: 0.5655

Epoch 59/60

390/390 [==============================] - 24s 61ms/step - loss: 1.3276 - accuracy: 0.5334 - val_loss: 1.2461 - val_accuracy: 0.5755

Epoch 60/60

390/390 [==============================] - 24s 61ms/step - loss: 1.3280 - accuracy: 0.5342 - val_loss: 1.2555 - val_accuracy: 0.5715

[INFO] evaluating network...

precision recall f1-score support

airplane 0.73 0.52 0.60 1000

automobile 0.62 0.80 0.70 1000

bird 0.58 0.30 0.40 1000

cat 0.51 0.24 0.32 1000

deer 0.69 0.32 0.43 1000

dog 0.53 0.51 0.52 1000

frog 0.47 0.84 0.60 1000

horse 0.55 0.73 0.62 1000

ship 0.69 0.69 0.69 1000

truck 0.52 0.77 0.62 1000

accuracy 0.57 10000

macro avg 0.59 0.57 0.55 10000

weighted avg 0.59 0.57 0.55 10000

Here we are obtaining 59% accuracy on the CIFAR-10 dataset.

Looking at our training history plot in Figure 5, we notice that our validation loss is less than our training loss for nearly the entire training process — we can improve our accuracy by increasing the model complexity which is exactly what we’ll do in the next section.

Keras Functional model results

Our Functional model implementation is far deeper and more complex than our Sequential example.

Again, make sure you’ve used the “Downloads” section of this guide to download the source code.

Once you have the source code, execute the following command to train our Functional model:

$ python train.py --model functional --plot output/functional.png

[INFO] loading CIFAR-10 dataset...

[INFO] using functional model...

[INFO] training network...

Epoch 1/60

390/390 [==============================] - 69s 178ms/step - loss: 1.6112 - accuracy: 0.4091 - val_loss: 2.2448 - val_accuracy: 0.2866

Epoch 2/60

390/390 [==============================] - 60s 153ms/step - loss: 1.2376 - accuracy: 0.5550 - val_loss: 1.3850 - val_accuracy: 0.5259

Epoch 3/60

390/390 [==============================] - 59s 151ms/step - loss: 1.0665 - accuracy: 0.6203 - val_loss: 1.4964 - val_accuracy: 0.5370

...

Epoch 58/60

390/390 [==============================] - 59s 151ms/step - loss: 0.2498 - accuracy: 0.9141 - val_loss: 0.4282 - val_accuracy: 0.8756

Epoch 59/60

390/390 [==============================] - 58s 149ms/step - loss: 0.2398 - accuracy: 0.9184 - val_loss: 0.4874 - val_accuracy: 0.8643

Epoch 60/60

390/390 [==============================] - 61s 156ms/step - loss: 0.2442 - accuracy: 0.9155 - val_loss: 0.4981 - val_accuracy: 0.8649

[INFO] evaluating network...

precision recall f1-score support

airplane 0.94 0.84 0.89 1000

automobile 0.95 0.94 0.94 1000

bird 0.70 0.92 0.80 1000

cat 0.85 0.64 0.73 1000

deer 0.77 0.92 0.84 1000

dog 0.91 0.70 0.79 1000

frog 0.88 0.94 0.91 1000

horse 0.95 0.85 0.90 1000

ship 0.89 0.96 0.92 1000

truck 0.89 0.95 0.92 1000

accuracy 0.86 10000

macro avg 0.87 0.86 0.86 10000

weighted avg 0.87 0.86 0.86 10000

This time we’ve been able to boost our accuracy all the way up to 87%!

Keras Model subclassing results

Our final experiment evaluates our implementation of model subclassing using Keras.

The model we’re using here is a variation of VGGNet — an essentially sequential model consisting of 3×3 CONVs and 2×2 max-pooling for volume dimension reduction.

We used Keras model subclassing here (rather than the Sequential API) as a simple example of how you may take an existing model and convert it to subclassed architecture.

Note: Implementing your own custom layer types and training procedures for the model subclassing API is outside the scope of this post but I will cover it in a future guide.

To see Keras model subclassing in action make sure you’ve used the “Downloads” section of this guide to grab the code — from there you can execute the following command:

$ python train.py --model class --plot output/class.png

[INFO] loading CIFAR-10 dataset...

[INFO] using model sub-classing...

[INFO] training network...

Epoch 1/60

Epoch 58/60

390/390 [==============================] - 30s 77ms/step - loss: 0.9100 - accuracy: 0.6799 - val_loss: 0.8620 - val_accuracy: 0.7057

Epoch 59/60

390/390 [==============================] - 30s 77ms/step - loss: 0.9100 - accuracy: 0.6792 - val_loss: 0.8783 - val_accuracy: 0.6995

Epoch 60/60

390/390 [==============================] - 30s 77ms/step - loss: 0.9036 - accuracy: 0.6785 - val_loss: 0.8960 - val_accuracy: 0.6955

[INFO] evaluating network...

precision recall f1-score support

airplane 0.76 0.77 0.77 1000

automobile 0.80 0.90 0.85 1000

bird 0.81 0.46 0.59 1000

cat 0.63 0.36 0.46 1000

deer 0.68 0.57 0.62 1000

dog 0.78 0.45 0.57 1000

frog 0.45 0.96 0.62 1000

horse 0.74 0.81 0.77 1000

ship 0.90 0.79 0.84 1000

truck 0.73 0.89 0.80 1000

accuracy 0.70 10000

macro avg 0.73 0.70 0.69 10000

weighted avg 0.73 0.70 0.69 10000

Here we obtain 73% accuracy, not quite as good as our MiniGoogLeNet implementation, but it still serves as an example of how to implement an architecture using Keras’ model subclassing feature.

In general, I do not recommend using Keras’ model subclassing:

- It’s harder to use.

- It adds more code complexity

- It’s harder to debug.

…but it does give you full control over the model.

Typically I would only recommend you use Keras’ model subclassing if you are a:

- Deep learning researcher implementing custom layers, models, and training procedures.

- Deep learning practitioner trying to replicate the results of a researcher/paper.

The majority of deep learning practitioners are not going to need Keras’ model subclassing feature.

What's next? We recommend PyImageSearch University.

84 total classes • 114+ hours of on-demand code walkthrough videos • Last updated: February 2024

★★★★★ 4.84 (128 Ratings) • 16,000+ Students Enrolled

I strongly believe that if you had the right teacher you could master computer vision and deep learning.

Do you think learning computer vision and deep learning has to be time-consuming, overwhelming, and complicated? Or has to involve complex mathematics and equations? Or requires a degree in computer science?

That’s not the case.

All you need to master computer vision and deep learning is for someone to explain things to you in simple, intuitive terms. And that’s exactly what I do. My mission is to change education and how complex Artificial Intelligence topics are taught.

If you're serious about learning computer vision, your next stop should be PyImageSearch University, the most comprehensive computer vision, deep learning, and OpenCV course online today. Here you’ll learn how to successfully and confidently apply computer vision to your work, research, and projects. Join me in computer vision mastery.

Inside PyImageSearch University you'll find:

- ✓ 84 courses on essential computer vision, deep learning, and OpenCV topics

- ✓ 84 Certificates of Completion

- ✓ 114+ hours of on-demand video

- ✓ Brand new courses released regularly, ensuring you can keep up with state-of-the-art techniques

- ✓ Pre-configured Jupyter Notebooks in Google Colab

- ✓ Run all code examples in your web browser — works on Windows, macOS, and Linux (no dev environment configuration required!)

- ✓ Access to centralized code repos for all 536+ tutorials on PyImageSearch

- ✓ Easy one-click downloads for code, datasets, pre-trained models, etc.

- ✓ Access on mobile, laptop, desktop, etc.

Summary

In this tutorial you learned the three ways to implement a neural network architecture using Keras and TensorFlow 2.0:

- Sequential: Used for implementing simple layer-by-layer architectures without multiple inputs, multiple outputs, or layer branches. Typically the first model API you use when getting started with Keras.

- Functional: The most popular Keras model implementation API. Allows everything inside the Sequential API, but also facilitates substantially more complex architectures which include multiple inputs and outputs, branching, etc. Best of all, the syntax for Keras’ Functional API is clean and easy to use.

- Model subclassing: Utilized when a deep learning researcher/practitioner needs full control over model, layer, and training procedure implementation. Code is verbose, harder to write, and even harder to debug. Most deep learning practitioners won’t need to subclass models using Keras, but if you’re doing research or custom implementation, model subclassing is there if you need it!

If you’re interested in learning more about the Sequential, Functional, and Model Subclassing APIs, be sure to refer to my book, Deep Learning for Computer Vision with Python, where I cover them in more detail.

I hope you enjoyed today’s tutorial!

To download the source code to this post, and be notified when future tutorials are published here on PyImageSearch, just enter your email address in the form below!

Download the Source Code and FREE 17-page Resource Guide

Enter your email address below to get a .zip of the code and a FREE 17-page Resource Guide on Computer Vision, OpenCV, and Deep Learning. Inside you'll find my hand-picked tutorials, books, courses, and libraries to help you master CV and DL!